Now Reading: AI and Data Analytics: Tools, Platforms, and Trends

-

01

AI and Data Analytics: Tools, Platforms, and Trends

AI and Data Analytics: Tools, Platforms, and Trends

AI-Driven Data Analytics: An Executive Overview

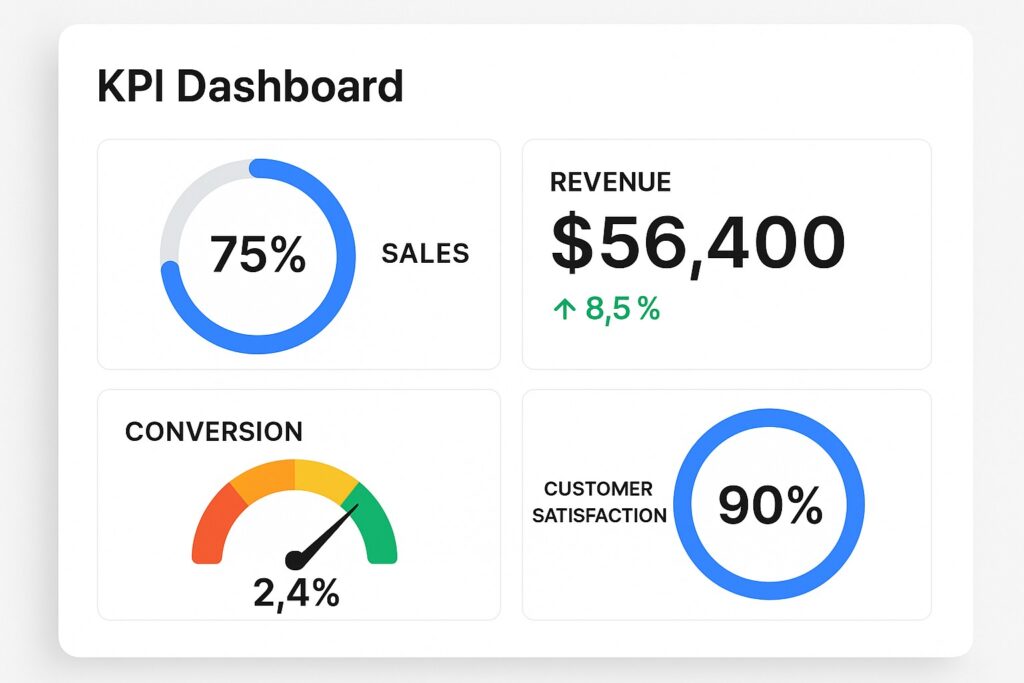

Across industries, AI-driven data analytics is redefining how leaders interpret signals from data, accelerate decision cycles, and scale insights across departments. Organizations are moving from descriptive reports to predictive and prescriptive analytics, leveraging machine learning and natural language interfaces to turn data into actionable guidance. The result is not only faster dashboards but a new operating rhythm where data-driven insight informs strategy, product development, customer experience, and risk management in near real time.

Success hinges on aligning data assets with business outcomes. AI analytics enable models that forecast demand, detect anomalies, optimize pricing, and automate routine analysis. Yet the value comes when data is accessible to the right people, governed for quality and trust, and integrated into day-to-day processes. In practice, that means blending data engineering, data science, and domain expertise to produce interpretable, auditable results that executives can rely on.

To realize durable outcomes, organizations must design for data quality, governance, and risk management from the start. This includes cataloging data assets, defining measurement metrics, and establishing guardrails around model deployment and monitoring. Adoption also requires change management, with governance councils, clear ownership, and training so analysts and business users can collaborate with AI-enabled tools and interpret model outputs responsibly.

- Real-time, AI-powered insights that scale across teams

- Cloud-native scalability and cost-efficiency through pay-as-you-go compute

- Democratization of analytics through self-service and natural language interfaces

- Proactive anomaly detection and automated alerting

- Operational efficiency gained from automated data preparation and insight delivery

Data-Centric AI: Data Strategy and Governance

In data-centric AI, the quality and management of data determine model performance more than the complexity of models themselves. The shift emphasizes building data products: curated datasets with documented lineage, versioning, and clear ownership. Organizations invest in data catalogs, lineage tracing, and quality gates to ensure that datasets used for training and inference meet the required standards. A data-centric stance also aligns incentives across teams, reducing brittle pipelines and enabling faster experimentation with lower risk.

Governance and privacy become enablers rather than impediments when designed into the data platform. This means rigorous data lineage, policy enforcement, access controls, and audit trails that allow regulators and internal stakeholders to understand how insights were produced. Model governance and risk assessment complement data governance, ensuring that models operate within defined bounds and that drift, bias, and fairness are monitored continuously.

Architecture decisions shape how data flows into AI analytics. Patterns such as data lakehouses, data meshes, and semantic layers provide different balances of autonomy and control. The table below outlines common patterns, their core principles, and typical use cases to help decision-makers select the right fit for their organization.

| Pattern | Core Principle | Benefit | Example Tool |

|---|---|---|---|

| Data Lakehouse | Unifies storage and analytics across data types | Simplified data access, lower latency, unified governance | Databricks Delta Lake; Azure Synapse |

| Data Mesh | Domain-oriented data ownership and product thinking | Scalable data democratization with clear accountability | Amundsen; Collibra |

| Data Virtualization | Virtualized access to distributed sources | Faster cross-source analytics without physical models | Denodo; TIBCO |

| Semantic Layer | Business-facing abstraction for metrics and semantics | Consistent metrics and easy reporting | Looker semantic layer; dbt with semantic definitions |

Core Tools and Platforms Shaping Analytics

The tool landscape for AI-infused analytics spans data integration, data quality and governance, analytics and ML platforms, and visualization capabilities. Enterprise buyers increasingly require tools that not only perform well in raw benchmarks but also integrate seamlessly with governance frameworks, security controls, and data catalogs. A holistic stack supports both traditional BI needs and emerging AI workloads, enabling teams to move from descriptive insights to autonomous decision support.

Key categories to consider include data ingestion and orchestration, data quality and lineage, machine learning model development and deployment, and AI-enabled business intelligence. These domains must work in concert, with clear ownership, standardized metadata, and robust monitoring. In practice, organizations often curate a portfolio of tools that share common data models, security policies, and access controls to minimize friction and risk as analytics maturity evolves.

Selecting the right combination of tools requires alignment with data governance, security, and regulatory requirements, as well as the specific business use cases. Organizations should evaluate interoperability with existing data sources, scalability to handle growing data volumes, and the ease with which business users can create and modify analyses. The aim is to establish reliable, auditable analytics that teams trust and rely on for timely decisions.

- Interoperability across data sources and platforms

- Scalability for growing data volumes and user bases

- Usability and enablement for business users and analysts

- Security and compliance, including data privacy controls and audit trails

Cloud Platforms and AI Integration: Azure Synapse, Snowflake, and Beyond

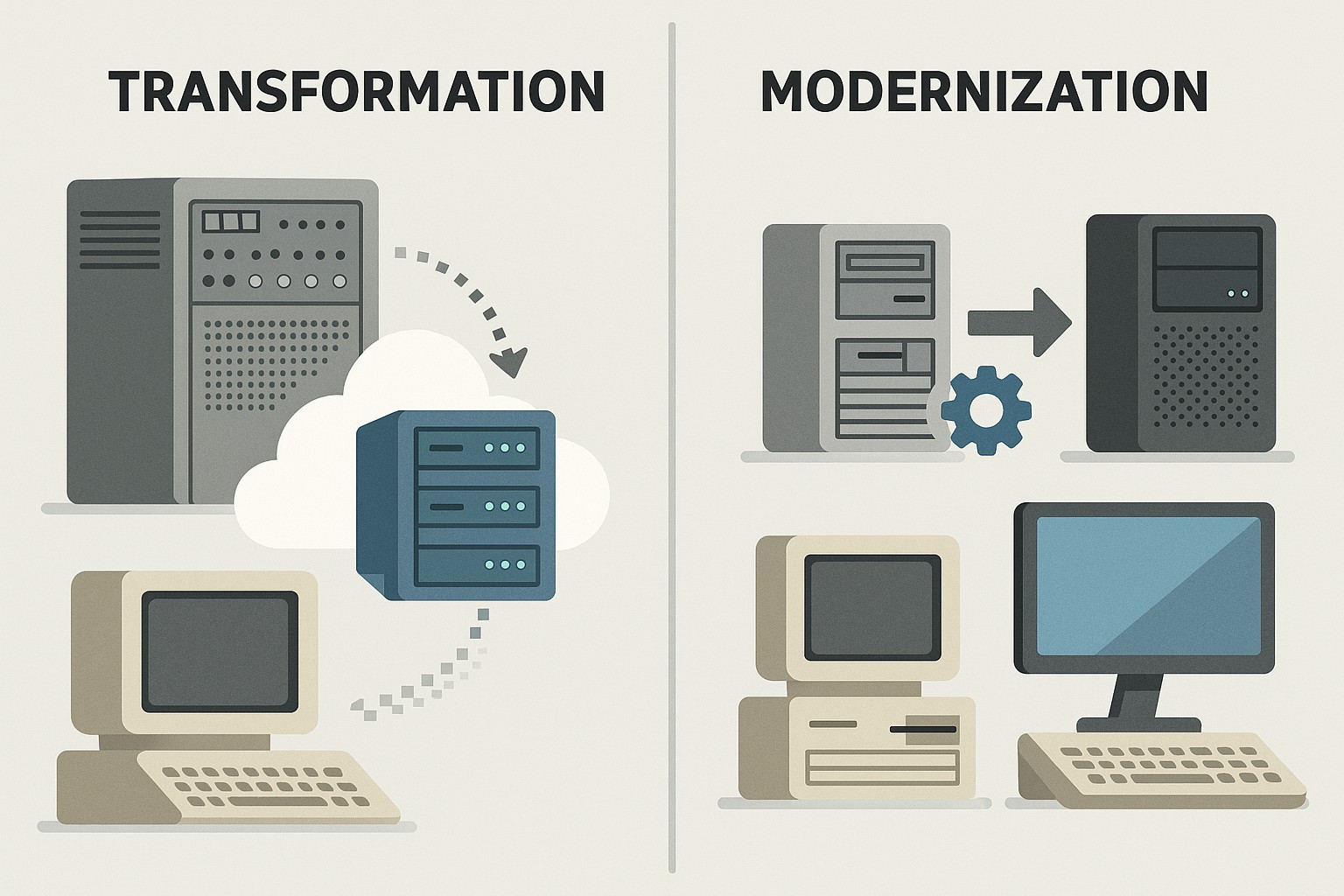

Cloud-native data platforms have become the backbone of modern AI-infused analytics, delivering scalable storage, powerful compute, and integrated AI services. These platforms enable teams to run advanced analytics workloads, train and deploy models, and serve insights directly within data pipelines. A well-architected cloud stack reduces data movement, accelerates experimentation, and supports governance at scale through policy-driven access and centralized logging.

Leading platforms such as Azure Synapse Analytics, Snowflake, AWS Redshift, and Google BigQuery offer distinct strengths. Azure Synapse combines data integration, big data processing, and data warehousing with native integration to AI services and notebooks. Snowflake emphasizes separation of storage and compute, cross-region data sharing, and strong data governance. Across ecosystems, organizations leverage feature stores, model hosting, and automated data quality checks to operationalize AI insights within analytics pipelines.

Architectural patterns for AI-enabled analytics emphasize secure data access, reproducible experimentation, and responsible AI. For example, a typical workflow might involve ingesting data into a data lake or lakehouse, applying transformations in a notebook environment, deploying a scoring model in a serverless function, and delivering results through a BI layer. Below is a compact code example illustrating how a simple scoring workflow can be approximated in a cloud-enabled context.

// Example snippet: pseudo code to score data using an AI model

import requests

def score(record):

payload = {"model": "customer-churn-v1", "features": record}

r = requests.post("https://ai.example.com/v1/score", json=payload)

return r.json()

# Example usage with a batch of records

results = [score(r) for r in batch_of_records]

Implementation Patterns: From Data Ingestion to Insight

Effective AI analytics rely on end-to-end workflows that move data from raw sources to trusted insights. A typical pattern starts with robust data ingestion from diverse sources—streaming and batch—followed by cleansing, normalization, and enrichment. This prepares data for feature engineering, model training, and scoring, while maintaining lineage and quality signals that auditors and regulators can review.

Automation and orchestration are essential to scale. Production pipelines require reliable scheduling, dependency tracking, and automated testing to catch regressions. MLOps practices help manage versioning for data, features, and models, while continuous integration and delivery pipelines ensure that improvements reach production with predictable risk. Operational dashboards and anomaly detection keep stakeholders informed about pipeline health and data quality over time.

Adoption strategies must emphasize governance, transparency, and collaboration. Teams should establish data contracts, define metrics that matter to the business, and document model assumptions. Regular reviews for bias, drift, and performance ensure that AI analytics remain aligned with corporate values and regulatory expectations. Implementing education and enablement programs accelerates the ability of analysts and business users to interpret AI-driven insights responsibly.

- Data ingestion best practices and quality gates

- Feature store, versioning, and model registry

- Model monitoring, drift detection, and automated alerting

- Cross-functional governance and collaborative workflows

AI Ethics, Trust, and Responsible Analytics

As AI becomes embedded in decision-making processes, organizations must address ethical considerations, fairness, and explainability. Responsible analytics requires visibility into how a model arrived at a prediction, what data influenced the outcome, and whether biased patterns could skew decisions. Techniques such as interpretable models, post-hoc explanations, and audits help stakeholders trust AI outputs and ensure they align with business and ethical standards.

Privacy, security, and compliance are foundational to responsible analytics. Data minimization, access controls, anonymization, and secure data sharing practices protect sensitive information. Regular audits, impact assessments, and clear policy frameworks enable regulators and customers to understand how data is collected, stored, and used. Organizations should also implement risk controls that limit the potential for unintended consequences in automated decision systems.

Beyond technology, workforce readiness and governance shape the success of AI analytics programs. Clear ownership, ongoing training, and cross-functional collaboration between data engineers, data scientists, and business units ensure that analytics capabilities evolve in step with business needs. A culture that values transparency, continuous improvement, and measurable outcomes helps sustain momentum and ROI over time.

What is AI-driven data analytics?

AI-driven data analytics combines machine learning, statistical methods, and advanced analytics with data management to produce predictive, prescriptive, and actionable insights. It extends traditional reporting by automating pattern discovery, enabling real-time scoring, and delivering decision-support signals that are interpretable and auditable for business users and leaders.

How do AI and analytics platforms differ?

Analytics platforms primarily organize, visualize, and summarize data for human interpretation, while AI-enabled analytics adds automated pattern recognition, ML model orchestration, and inference at scale. AI platforms typically include components for model development, deployment, monitoring, and governance, enabling continuous experimentation and deployment alongside traditional BI capabilities.

What are critical considerations when choosing a cloud analytics platform?

Important factors include data gravity (where data resides), interoperability with existing tools, scalability of compute and storage, security and compliance controls, data governance capabilities, support for AI workflows, and total cost of ownership. Organizations should also assess support for data sharing across boundaries, ease of integration with their ML pipelines, and the availability of managed services to reduce operational overhead.

How can organizations ensure responsible AI in analytics?

Organizations should implement governance frameworks that cover data lineage, model risk management, bias detection, explainability, and impact assessment. Regular audits, transparent instrumentation of features and decisions, and collaboration between data science, legal, and business units help ensure AI-driven insights align with ethical, regulatory, and strategic objectives. Ongoing training and clear accountability further strengthen responsible AI practices.