Now Reading: AI in Robotics: Smarter Robots for Industry and Home

-

01

AI in Robotics: Smarter Robots for Industry and Home

AI in Robotics: Smarter Robots for Industry and Home

Overview: AI-Driven Robotics in Industry and Home

The convergence of artificial intelligence with robotics is reshaping how organizations design, deploy, and maintain autonomous systems. AI robotics trends are shifting from rigid, pre-programmed routines to adaptive behavior that can learn from real-world data, tune performance in the moment, and collaborate with humans in dynamic environments. In industrial settings, this shift drives higher throughput, lower cycle times, and greater resilience to variability in materials, processes, and demand. In home and service contexts, AI-enabled robots are moving from novelty devices toward dependable assistants capable of personalized, context-aware interaction and safe operation around people and pets. This transition is accelerating investment in perception, planning, and robust safety mechanisms that make smarter robots both profitable and trustworthy.

At a practical level, modern robotic systems rely on a layered architecture where perception, decision-making, and control operate in concert with domain knowledge, safety constraints, and governance policies. Perception systems translate sensor streams into actionable world models; planning and decision modules choose actions that balance goals such as throughput, quality, safety, and energy efficiency; and low-level controllers execute motions with precision and stability. Across both industry and household applications, the ability to close the loop quickly—from sensing to action to verification—has become a core differentiator for AI-powered robotics. This article explores how vision, planning, and learning are being integrated to produce smarter robots capable of operating with reduced human supervision while maintaining rigorous safety and compliance standards.

AI Vision and Sensing in Modern Robots

Advances in computer vision, sensor fusion, and scene understanding are enabling robots to interpret complex environments with a level of nuance once reserved for humans. Modern perception stacks combine camera data with depth sensing, tactile signals, LiDAR, and acoustic information to detect objects, identify hazards, estimate material properties, and track the state of a workspace. Robust perception is foundational for reliable manipulation, safe navigation, and adaptive collaboration with human workers. As robots encounter variations in lighting, occlusions, or clutter, probabilistic reasoning and self-learning approaches help maintain performance without frequent reprogramming.

Beyond raw perception, AI-driven perception systems benefit from simulation, domain adaptation, and continual learning. Engineers use synthetic data and photo-realistic environments to train models that generalize to real-world variations, while online learning allows a system to adjust to new product variants or seasonal changes in demand. Model-based reasoning, combined with data-driven inference, provides a practical path to explainable decisions and traceable failure modes. Together, these technologies enable industrial robots to recognize parts with high skew or misfeeds, cobots to anticipate human intent, and autonomous agents to understand the broader context of a task before acting.

- Advanced object recognition and pose estimation for complex parts

- Real-time sensor fusion to form coherent environmental maps

- Robust object tracking under occlusion and motion blur

- Adaptive calibration and self-diagnostics for sensing hardware

- Semantic scene understanding to support task planning

- Edge inference for low-latency decision making

- Resilience to changing lighting and weather conditions in outdoor and indoor spaces

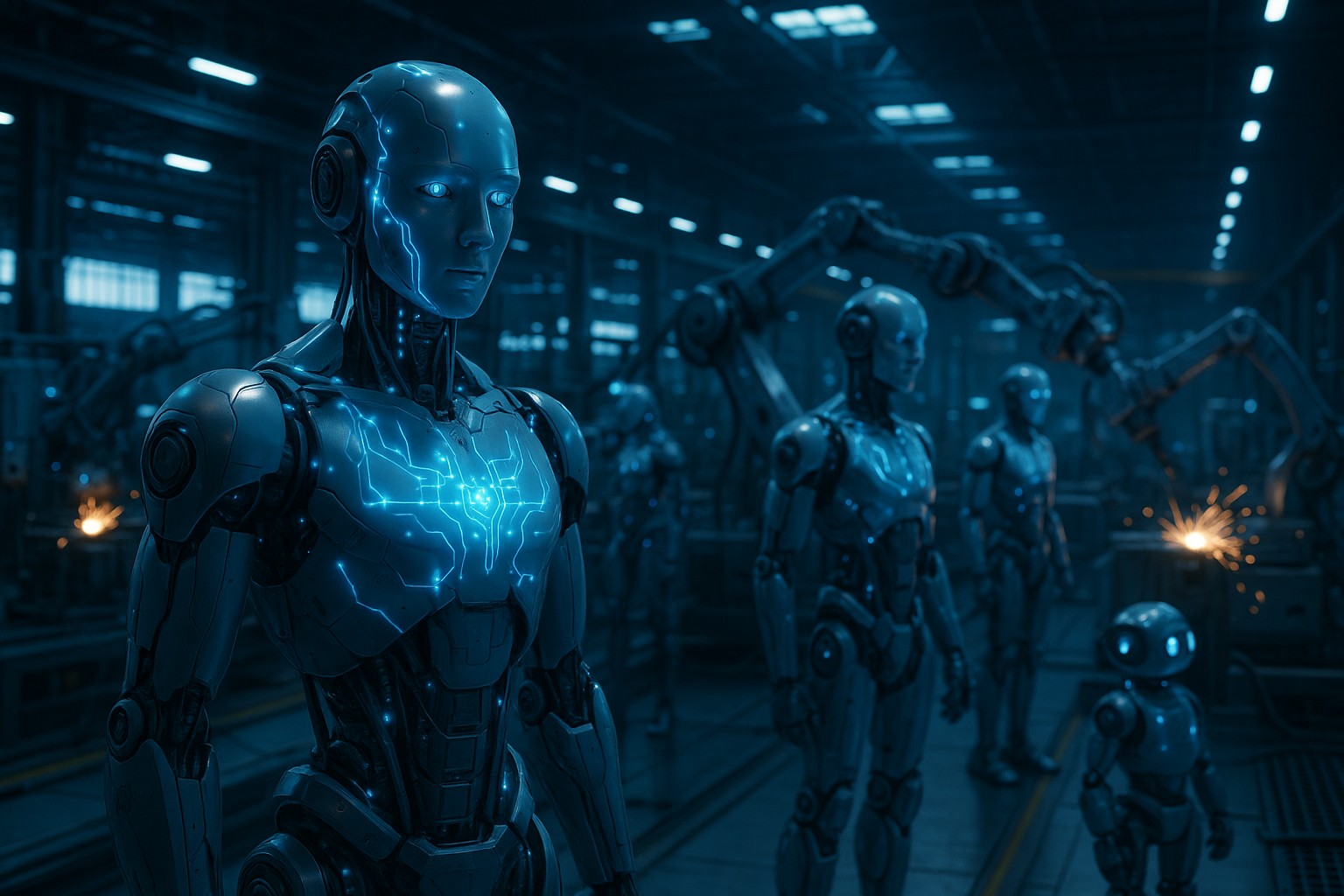

Industrial Robots and Collaborative Cobots: Safe, Flexible Manufacturing

Industrial robots have evolved from isolated, hard-coded machines into collaborative workcells that share tasks with human operators. Cobots are designed to adapt to a range of workloads and to operate safely in shared spaces through force sensing, speed and separation monitoring, and intuitive programming by demonstration. This shift reduces downtime, expands the range of tasks that can be automated, and lowers the barrier to entry for small and mid-size manufacturers seeking agile, reconfigurable production lines. The most successful deployments blend robust perception, reliable control, and clear governance around safety margins, qualifications, and change management.

Deployment in manufacturing now emphasizes modularity and reuse. Off-the-shelf perception suites, standard robot arms, and reusable task modules enable rapid scale-up across lines and facilities. Companies are increasingly implementing digital twins of their production processes, which allow scenario planning, virtual commissioning, and predictive maintenance to reduce risk before physical changes are made. The net effect is a more resilient operation: higher repeatability, faster changeovers, and the ability to re-task lines to shifting demand without the need for large capital projects.

- Design for safe human-robot collaboration, with clear demarcations of shared workspaces and safety-rated controls

- Implement modular, transferable task programs to accelerate reconfiguration for new products

- Use real-time monitoring and analytics to optimize throughput and detect anomalies early

- Apply digital twins and simulation to validate changes before physical implementation

Autonomy in Drones and Mobile Robots: Fleet-Level Intelligence

Autonomous aerial and ground platforms bring scale and reach to logistics, inspection, agriculture, and public safety. Drone and mobile-robot systems rely on robust localization, mapping, and path planning to operate in uncertain environments, while onboard intelligence supports decision-making even when connectivity is intermittent. In industrial ecosystems, autonomous platforms complement fixed automation by performing routine tasks—such as inventory checks, warehouse transport, or asset inspection—without direct human supervision. The capability to operate safely near people and equipment hinges on reliable perception, stringent safety constraints, and transparent behavior that can be audited by operators and regulators alike.

To realize practical autonomy, organizations combine onboard computation with cloud- or edge-enabled analytics. Edge devices deliver low-latency inference for critical tasks, while centralized systems coordinate fleets, optimize routes, and maintain up-to-date maps and mission libraries. This approach improves utilization of assets, reduces downtime, and enables more flexible responses to supply-chain disruptions or remote inspection requirements. As with other AI-enabled robotics, continuous learning from field data helps these platforms refine navigation strategies, perception models, and fault-detection capabilities over time.

- Autonomous navigation with dynamic obstacle avoidance

- Vision-based localization and precise landing or docking

- Fault-tolerant operation in degraded sensory conditions

- Mission planning and fleet coordination for optimized routing

- Compliance with airspace, safety, and privacy regulations

AI Planning, Control, and Safety in Robotic Systems

Planning and control form the core logic that translates perception into action. Modern robotic systems use hierarchical planners that reconcile long-term objectives with short-term constraints, ensuring that actions not only accomplish tasks efficiently but also maintain safety and reliability. These planners often combine optimization-based approaches with rule-based policies to handle both routine and exceptional scenarios. The result is a framework capable of adapting to changes in goals, environment, or resource availability without extensive reprogramming.

Safety, governance, and risk management are integral to deploying AI-enabled robotics at scale. Companies implement layered safety models, including sensor-level redundancy, runtime verification, and fail-safe mechanisms that gracefully degrade performance rather than producing unsafe behavior. Transparent decision logs, model versioning, and auditable validation pipelines are increasingly required to satisfy regulatory expectations and customer trust. As robots become more autonomous, the emphasis on traceability, explainability, and robust testing grows correspondingly, ensuring that automated actions can be understood, validated, and trusted by human operators and stakeholders alike.

// Pseudo-code: action planning loop

function planAction(state, goals, constraints) {

candidates = generateCandidateActions(state, goals)

feasible = filterBySafetyAndFeasibility(candidates, constraints)

scores = evaluateFeasibilityAndImpact(feasible, state, goals)

best = selectHighestScore(feasible, scores)

return best

}

Data Governance, Privacy, and Ethics in Robotics

As robots collect and process increasingly rich data from their environment and users, data governance becomes essential. Organizations must define who owns data, how it is stored, who can access it, and how long it is retained. Privacy considerations are particularly salient in consumer devices and service robots operating in home environments, where sensitive information about routines, preferences, and personal schedules may be captured. Robust anonymization, access controls, and consent mechanisms help protect user privacy while ensuring that data can be leveraged to improve performance and safety.

Ethics and accountability considerations extend to algorithmic bias, fairness in automated decision-making, and the potential impacts on workers and communities. Establishing governance frameworks, model auditing practices, and clear escalation paths for safety incidents builds trust and reduces risk. Standards and interoperability across vendors—such as common data schemas, communication protocols, and validation methodologies—facilitate safer deployments at scale and help prevent vendor lock-in that can impede long-term strategic planning.

Applications and Roadmap: Industry and Home Adoption

Across industries, AI-driven robotics are enabling new business models and operational capabilities. In manufacturing, smart cobots and autonomous inspection systems reduce cycle times and improve quality by providing consistent, data-driven feedback to operators. In logistics and agriculture, autonomous platforms augment human labor with scalable, precise, and repetitive tasks, enabling organizations to respond more quickly to demand fluctuations. In consumer sectors, service robots are becoming more capable at navigating real-world environments, interpreting user intent, and delivering personalized experiences while maintaining safety and privacy standards. The roadmap for robotics in this context emphasizes modular software architectures, scalable cloud-edge orchestration, and continuous learning pipelines that can propagate improvements across fleets of devices.

To realize these benefits, organizations must address change management, system integration, and workforce upskilling. Successful programs align technology adoption with business objectives, establish clear success metrics, and invest in training for operators and maintenance staff. Interoperability standards and vendor collaborations accelerate scaling by reducing integration friction and enabling reusable components, models, and workflows. As AI-enabled robotics mature, disruption will increasingly come from the ability to orchestrate diverse robotic assets—industrial arms, cobots, drones, and household robots—into cohesive, intelligent ecosystems that deliver end-to-end value across processes, products, and services.

FAQ

What distinguishes cobots from traditional industrial robots?

Collaborative robots, or cobots, are designed to work alongside humans in shared spaces with safety features such as force and speed limits, power and force limiting (PFL), and hand-guiding capabilities. Unlike traditional industrial robots that operate in guarded cells with heavy safety interlocks, cobots emphasize safer human-robot interaction, quicker programming by demonstration, and more adaptable collaboration across tasks. Their software and hardware are typically modular, enabling rapid reconfiguration for new processes without extensive retooling or specialized programming expertise.

How is AI vision maintained under changing lighting or occlusions?

Robust AI vision relies on multi-sensor fusion, data augmentation, and continual learning to handle variability in environments. By combining cameras with depth sensing, LiDAR, tactile signals, and proprioceptive feedback, vision systems maintain situational awareness even when one sensor underperforms. Techniques such as domain adaptation, synthetic data generation, and running lightweight models at the edge help ensure reliable perception with low latency, while confidence estimation and fallback behaviors reduce the impact of uncertain observations on decision-making.

What are the primary safety concerns when deploying autonomous robots in workplace settings?

Key concerns include ensuring safe interaction with humans and equipment, maintaining reliable fault detection and recovery, and providing auditable decision logs. Safety strategies typically involve layered control architectures, redundant sensing, formal verification of critical behaviors, and rigorous training and validation cycles. Compliance with applicable standards and ongoing monitoring are essential to sustain safe operation as tasks, environments, and regulatory requirements evolve.