Now Reading: Challenges of Microservices Adoption and How to Overcome Them

-

01

Challenges of Microservices Adoption and How to Overcome Them

Challenges of Microservices Adoption and How to Overcome Them

Introduction: Why organizations consider microservices and what success looks like

Organizations increasingly pursue microservices to align technology with fast-moving business needs. The promise of independent deployments, technology diversity, and scalable teams can unlock faster delivery, better resilience, and clearer ownership of service boundaries. Yet the same architecture that enables these benefits also introduces new failure modes and operational complexity. In debates about microservices vs monolith, the trade-offs become apparent: you gain agility and fault containment at the cost of increased coordination, more sophisticated testing, and a shift in how you think about data, security, and governance.

To set realistic expectations, it helps to define success in concrete terms: measurable improvements in release cadence, reliability, and time-to-detect issues; the ability to scale specific business capabilities without reworking the entire system; and a clear path for teams to own the services they build. This article explores common challenges organizations encounter as they adopt microservices, and offers practical strategies to overcome them while keeping a strong business focus and a disciplined engineering mindset.

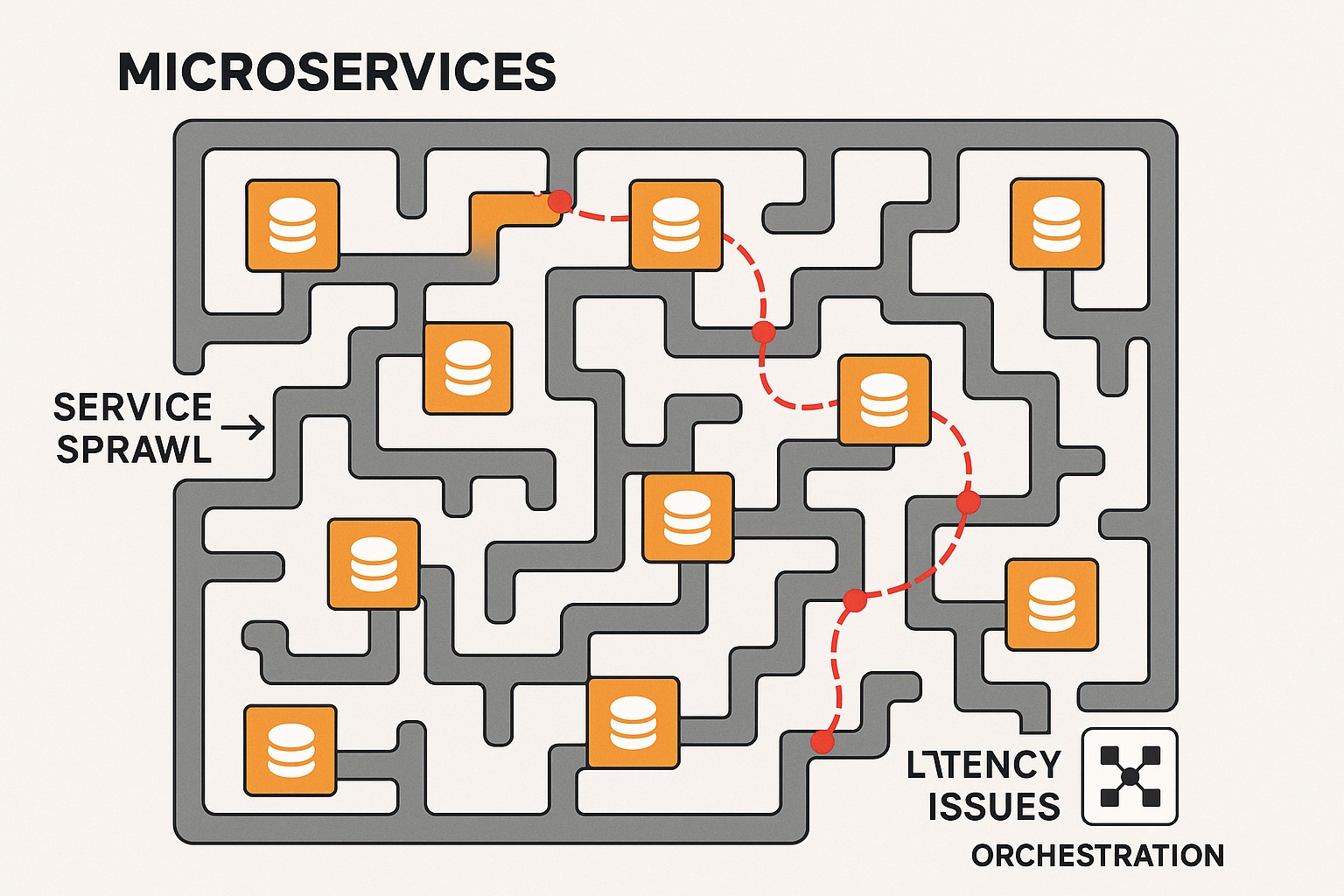

Common challenges organizations face during adoption

Many of the challenges stem from the fundamental shift from a centralized, tightly coupled architecture to a distributed, autonomous set of services. The same distributed nature that enables scalability and independent evolution also expands the surface area for failure, introduces coordination overhead, and complicates data governance. When teams start with a few services, the benefits can be compelling, but as the system grows, complexity compounds quickly.

To illustrate the landscape, consider these core challenges that frequently arise during transition. Aligning around clear boundaries, ensuring reliable inter-service communication, and maintaining visibility across a distributed system require deliberate design and ongoing discipline. In the context of microservices vs monolith choices, the right balance is often achieved not by chasing only technical gains, but by aligning organizational structure, development processes, and platform capabilities with strategic business goals.

- Increased system complexity and distributed topology that complicates reasoning about end-to-end behavior.

- Data management and consistency across services, which can lead to eventual consistency requirements and additional coordination mechanisms.

- Operational overhead, including deployment, monitoring, tracing, and incident response for a broader set of services.

- Distributed debugging and root-cause analysis across multiple services, environments, and data stores.

- Security and compliance considerations in a landscape with more network boundaries, service contracts, and access controls.

- Organizational alignment and governance, ensuring teams own clear service boundaries and avoid fragmentation.

Technical challenges in depth

From a technical standpoint, the move to microservices changes how you approach deployment, observability, and resilience. You must design for partial failures, ensure reliable inter-service communication, and implement robust data access patterns that respect service boundaries. Without the right foundations, teams can spend more time firefighting infrastructure than delivering business value.

Key technical considerations include the need for consistent service contracts, standardized communication protocols, and a unified approach to observability. Building a framework that supports end-to-end tracing, centralized logging, and correlated metrics across services helps diagnose issues quickly and reduces mean time to recovery. The following snippet demonstrates a lightweight health check pattern in a typical service, a building block that accelerates health-based routing and deployment decisions:

curl -s http://gateway.example.com/api/health -H "Accept: application/json"Organizational and process challenges

Beyond technology, successful microservices adoption relies on aligning people, teams, and processes with architectural intent. Organizational silos can impede the independence that services require, and governance mechanisms that are too heavy-handed can slow down delivery. Conversely, too little governance risks inconsistent design choices and duplicated efforts. Establishing the right balance—clear ownership, measurable expectations, and lightweight, repeatable processes—helps teams move faster while maintaining quality at scale.

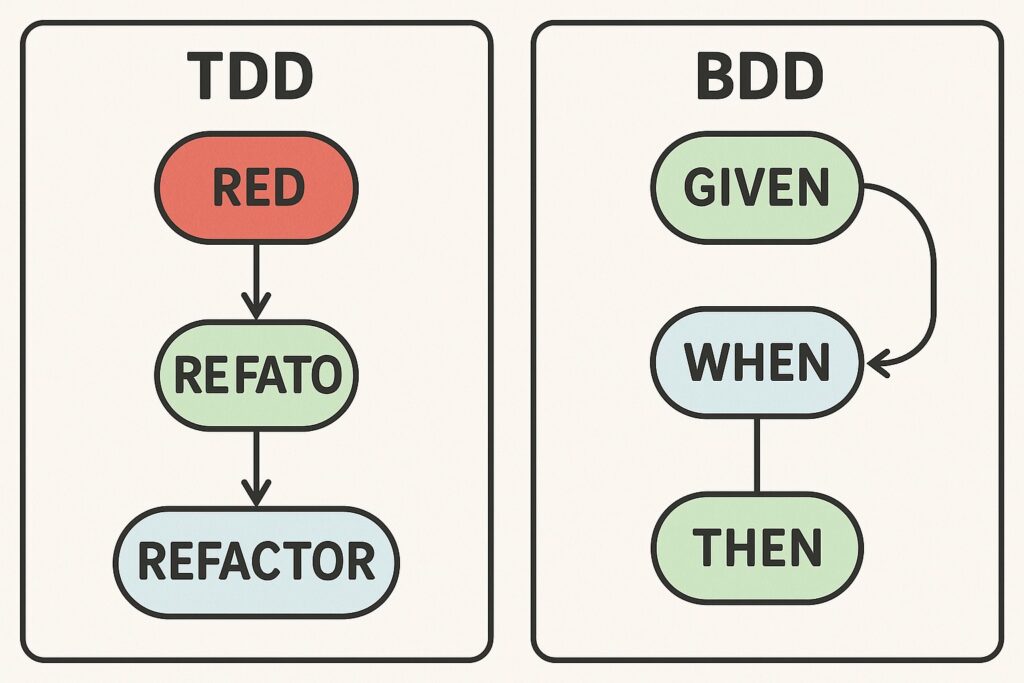

Process changes often accompany the technical transition. Techniques such as domain-driven design, bounded contexts, contract testing, and automation of build, test, and deployment pipelines help create a reproducible rhythm for teams. It is important to invest in onboarding, playbooks for incident response, and forums for cross-team collaboration so everyone can align on contracts, versioning, and interoperability.

Strategies to overcome challenges

Recovery from the common pain points requires a combination of architectural guidance, disciplined practices, and platform capabilities. A strategic focus on well-bounded service ownership, robust testing, and automation reduces risk and accelerates learning. The following approach emphasizes both design and execution to create a path toward reliable, scalable microservices without sacrificing business outcomes.

Key strategies include establishing bounded contexts and explicit service contracts, investing in automated CI/CD and testing infrastructure, deploying a capable observability stack, and ensuring leadership sponsorship and alignment across business and technology teams. The goal is to create an environment where teams can iterate quickly while maintaining confidence in system behavior and data integrity.

- Adopt domain-driven design with clearly defined bounded contexts to minimize cross-service coupling and clarify ownership.

- Implement contract testing and consumer-driven contracts to ensure service contracts remain stable as teams evolve.

- Invest in automation for build, test, deployment, and rollback to shorten cycle times and reduce human error.

Architectural patterns and platform considerations

A successful microservices implementation often relies on patterns that address inter-service communication, data governance, and deployment strategy. An API gateway can provide a single entry point, while a service mesh can manage policy, routing, and telemetry at the network level. Event-driven patterns help decouple producers and consumers, improving resilience and scalability, but they require careful handling of event schemas, versioning, and eventual consistency guarantees. Choosing the right mix of patterns depends on business requirements, team capabilities, and risk tolerance.

Platform decisions—such as containerization, orchestration, and the ecosystem of tooling—shape both reliability and speed. A consistent platform layer reduces cognitive load on engineering teams and accelerates adoption. It is important to establish standards for packaging, environment parity, secret management, and access controls to avoid drift and fragmentation across services.

Data management and consistency in microservices

Data ownership and consistency are among the most persistent challenges in distributed architectures. Each microservice typically manages its own data store, which improves autonomy but complicates cross-service queries and transactions. Employing patterns such as eventual consistency, sagas, and publish/subscribe event channels can help maintain data coherence without distributed two-phase commits. Teams should design for idempotent operations and establish clear data governance policies to prevent conflict and data loss in failure scenarios.

Change data capture, event streaming, and standardized data contracts provide a foundation for reliable data exchange across services. Teams must also plan for migrations and schema evolution in a way that minimizes impact on consumers. The result is a data landscape that supports independent service development while preserving the integrity of business processes that span multiple services.

Measuring success and avoiding common pitfalls

Organizations should define a pragmatic set of metrics that reflect both business outcomes and engineering health. Metrics such as deployment frequency, lead time for changes, error rates, and mean time to recovery help quantify progress and highlight areas for improvement. Equally important are architecture and process indicators—service boundary clarity, contract stability, and the effectiveness of your observability and incident response practices.

Common pitfalls include underestimating the need for standardized tooling, neglecting data governance, and failing to invest in people and culture alongside technology. Regular reviews, blameless postmortems, and ongoing training help teams learn from incidents and continuously improve. The right balance of speed and discipline helps organizations avoid the cost of churn and ensures long-term stability as the system grows.

FAQ

What is the biggest challenge when migrating from a monolith to microservices?

The most significant challenge is achieving clear service boundaries and building the organizational discipline to own those boundaries. Without well-defined domains, teams may create overlapping services, duplicate functionality, or introduce tight coupling that undermines independence. Establishing bounded contexts, agreement on contracts, and governance that enables teams to move fast without stepping on each other is essential.

How can organizations manage data consistency across services?

Managing data consistency requires embracing eventual consistency where appropriate, implementing reliable event streams, and using patterns like sagas or choreography to coordinate multi-service workflows. Each service should own its data model, with explicit contracts and backward-compatible changes. Automating data migrations and ensuring observability into data flows help keep cross-service consistency manageable while maintaining autonomy.

What role does organizational structure play in adoption?

Organizational structure should support autonomous teams aligned around bounded contexts. This means cross-functional teams with clear ownership of individual services, supported by lightweight governance, shared standards, and a platform team that provides common tooling and capabilities. When teams are organized to minimize handoffs and dependencies, delivery cadence improves and the likelihood of design drift decreases.

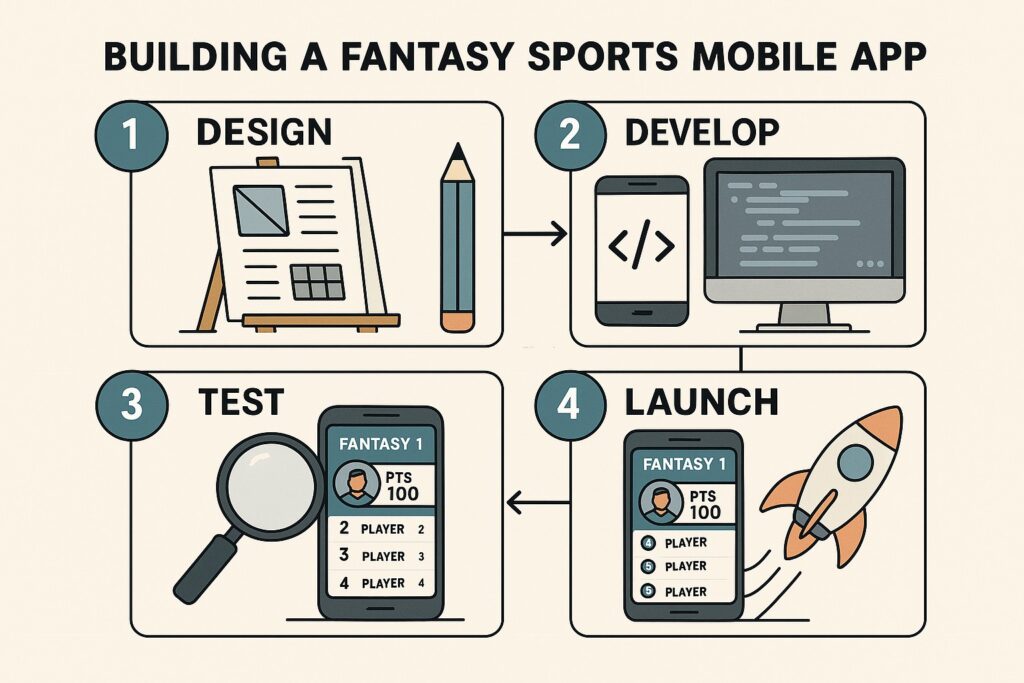

What should be included in a successful pilot project?

A successful pilot recognizes the learning goals, demonstrates end-to-end value, and produces measurable outcomes. Include a small but representative set of services with clear boundaries, automated pipelines, comprehensive monitoring, and a plan for data migration. Focus on delivering a specific business capability end-to-end, and capture lessons to guide broader rollout and platform enhancements.

How do you measure success with microservices?

Success is best measured through a combination of business and technical metrics. Track release velocity, time-to-market for features, and reliability indicators like service availability and incident resolution time. Pair these with architecture health indicators—contract stability, observable traces, and consistent deployment patterns—to ensure that speed does not come at the expense of quality or maintainability.