Now Reading: Data Processing vs Data Analysis vs Data Science: Differences Explained

-

01

Data Processing vs Data Analysis vs Data Science: Differences Explained

Data Processing vs Data Analysis vs Data Science: Differences Explained

What is Data Processing?

Data processing refers to the end-to-end set of operations that turn raw data into a structured, usable form. It encompasses collecting data from source systems, validating quality, cleaning noise, transforming formats and units, and loading it into a repository where it can be reliably queried. In modern organizations, data processing is often implemented as ETL or ELT pipelines and sits at the core of data governance and operational reporting. The emphasis is on making data accessible, consistent, and timely so downstream activities can proceed with confidence.

While data processing is sometimes described as a technical backbone, its value is measured in speed, reliability, and governance. A well-designed processing layer reduces duplication, minimizes errors, and ensures that downstream analysis and modeling are not bottlenecked by messy data. Common tasks include deduplicating records, standardizing date formats, reconciling schemas across systems, and enforcing data quality checks before data lands in a data warehouse or data lake.

- Data extraction from source systems (ETL/ELT)

- Data cleansing and quality checks

- Data transformation and normalization

- Data loading and orchestration into storage and marts

What is Data Analysis?

Data analysis is the practice of inspecting processed data to answer business questions and guide decisions. It sits closer to decision-making than raw processing, translating numbers into stories through statistics, summaries, visualizations, and structured inquiry. Analysts seek to understand what happened, when it happened, and why, using a mix of quantitative methods and domain knowledge to interpret patterns.

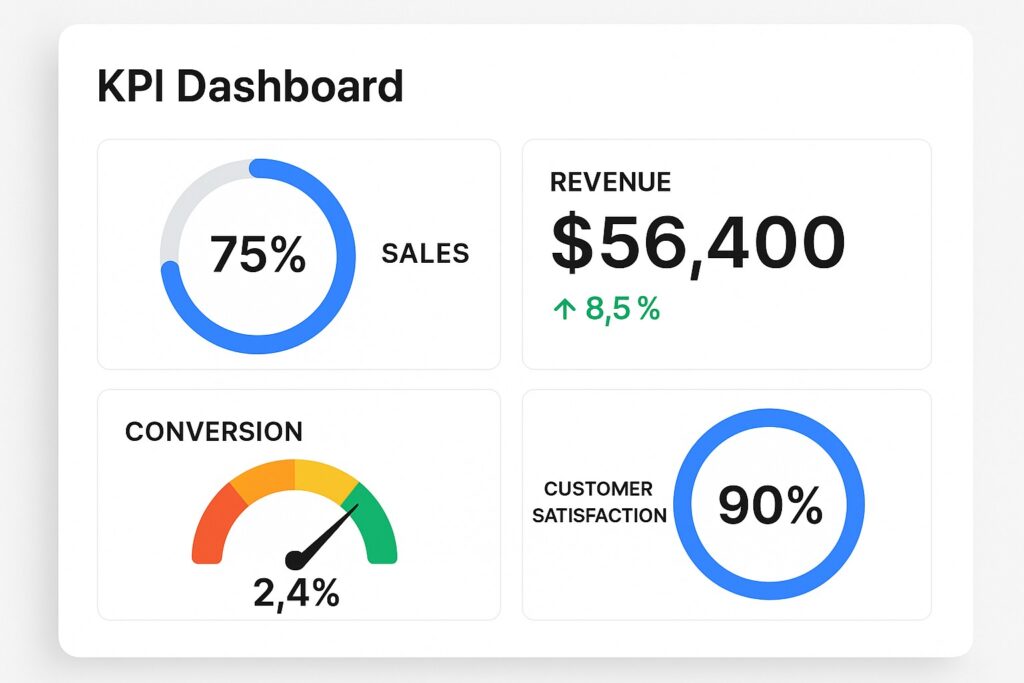

Practically, data analysis often produces descriptive and diagnostic outputs that stakeholders can act on. It relies on repeatable queries and dashboards, but it also invites exploratory work to uncover surprising relationships or anomalies. Typical activities include calculating key performance indicators, segmenting customers, testing whether observed differences are statistically meaningful, and communicating findings in a way that business leaders can act on.

Leading techniques commonly employed in data analysis span several areas, ensuring that insights are both actionable and reproducible. A practical catalog of techniques typically includes descriptive statistics, data visualization, hypothesis testing, and exploring simple predictive relationships to gauge the potential impact of different decisions.

- Descriptive statistics and summaries

- Data visualization and dashboards

- Hypothesis testing and inference

- Correlation and simple predictive relationships

What is Data Science?

Data science extends beyond analysis by combining domain knowledge, advanced modeling, and experimentation to produce predictive and prescriptive insights. It encompasses the design and evaluation of models, feature engineering, and systematic experimentation to understand what could happen under different scenarios. The goal is to turn data-driven insights into deployable answers that influence decisions and, in some cases, automate actions within business processes.

Data science is characterized by an emphasis on modeling, validation, and iteration. It often requires collaboration with product, engineering, and domain experts to ensure models align with reality, scale appropriately, and remain auditable. Deliverables commonly include predictive scores, segmentation models, recommendations, and, when appropriate, end-to-end data products that integrate into interactive applications or services.

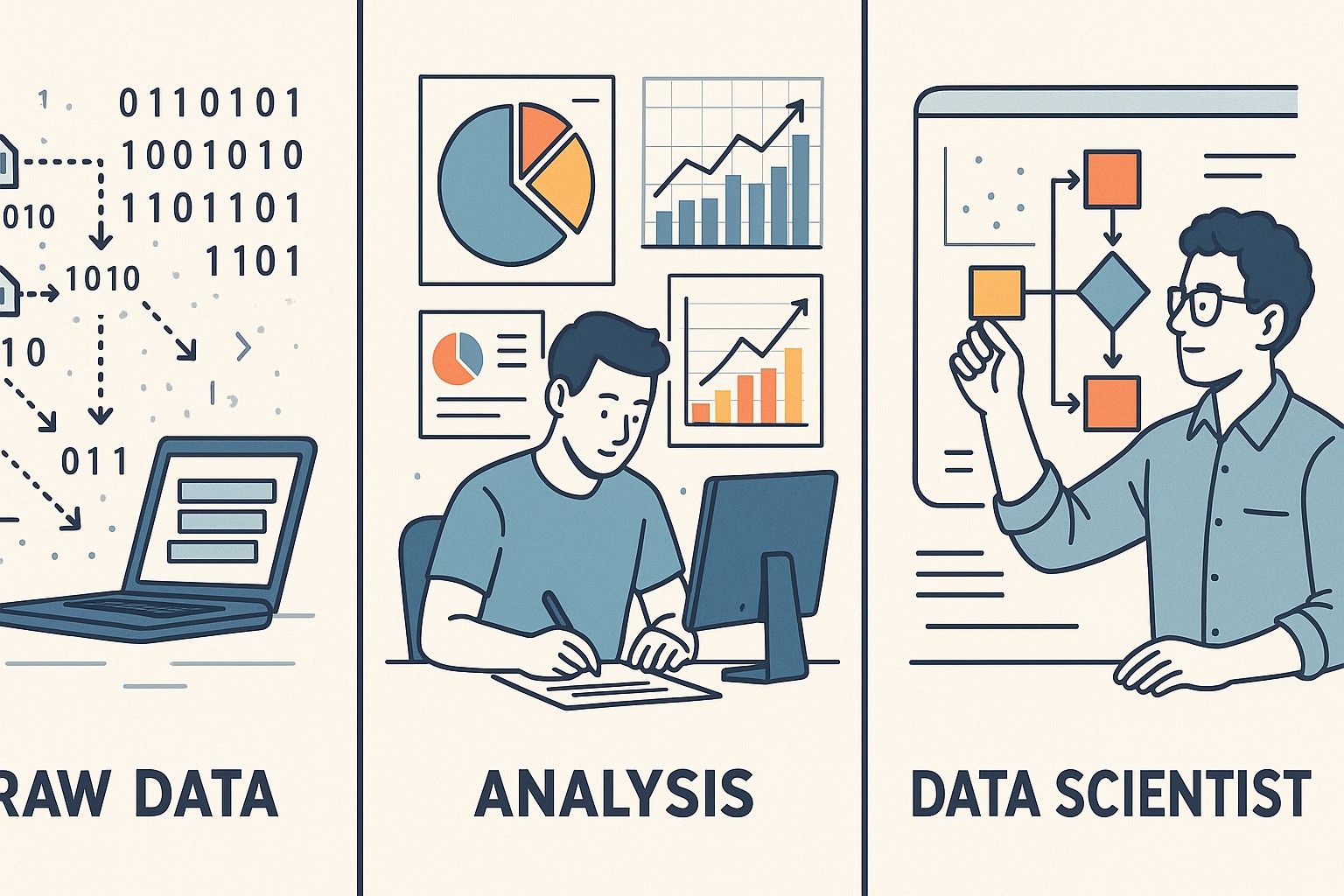

How these domains relate in practice

In practice, data processing provides the reliable foundation upon which analysis and data science build. Clean, well-governed data enables analysts to generate consistent insights, and it also supports the more complex experiments and model-building that data scientists perform. The boundaries between these domains are not always rigid: teams often share data, tooling, and even people who contribute across stages of a workflow. The most successful organizations design clear interfaces and governance so that data products can be moved from raw collection to analysis to production without rework and risk.

From a workflow perspective, a typical lifecycle begins with a focus on data processing to ensure data quality and availability, followed by data analysis to uncover what the data reveals about current performance, and then data science to explore predictive or automated capabilities that can scale decisions. The business value emerges when insights are translated into repeatable data products and processes, with monitoring, governance, and feedback loops that guide ongoing improvement.

Roles, skills, and toolsets

Organizations successful in leveraging data across processing, analysis, and science tend to organize around capability rather than a single job title. The data engineer often focuses on the plumbing—building robust data pipelines, ensuring data quality, and maintaining scalable storage. The data analyst concentrates on translating data into actionable insights through statistics, visualization, and narrative storytelling. The data scientist experiments with advanced modeling, validates results, and collaborates with product teams to deploy models into production. Each role requires a mix of domain knowledge, technical proficiency, and governance awareness to ensure data remains trustworthy and usable, at scale.

To support these roles, teams typically rely on a common set of capabilities and tools that bridge disciplines. The following skill areas are central across the three domains:

- Data engineering: SQL, data modeling, ETL/ELT design, data quality, orchestration tools

- Data analysis: statistics, exploratory data analysis, data visualization, storytelling

- Data science: machine learning, experimentation design, model evaluation, deployment concepts

- Data governance and security: lineage, provenance, access controls, compliance considerations

- Domain knowledge: business context, processes, and decision workflows

- Collaboration and project management: cross-functional teamwork, stakeholder communication

A practical decision framework for your organization

Choosing the right mix of processing, analysis, and science depends on factors such as data maturity, scale, business risk, and the desired speed of decision-making. A practical framework starts with clarity on business goals, followed by an assessment of data quality and availability, and then a plan for governance and ownership. Organizations with more mature data capabilities typically separate concerns more cleanly—creating dedicated pipelines, analytics teams, and data science units—while smaller teams may combine functions with strong data governance to avoid silos. The following framework can help guide prioritization and resourcing decisions.

| Domain | Primary focus | Typical outputs | Key skills/tools |

|---|---|---|---|

| Data Processing | Data preparation and governance | Cleaned data sets, ready for analysis | SQL, ETL/ELT, data modeling, data quality |

| Data Analysis | Insight generation and interpretation | Reports, dashboards, statistical summaries | Statistics, data visualization, SQL |

| Data Science | Modeling and automation | Predictions, recommendations, deployed models | Machine learning, experimentation, Python/R, cloud ML |

What is the primary difference between data processing and data analysis?

Data processing is the engineering work of turning raw data into a clean, consistent, and accessible form, focusing on pipelines, quality, and governance. Data analysis, by contrast, concentrates on extracting meaning from that processed data through statistics, visualization, and interpretation, with the aim of supporting decision-making.

How does data science relate to data analysis?

Data science builds on data analysis by incorporating advanced modeling, experimentation, and production-grade deployment. It seeks to forecast outcomes, automate decisions, and provide prescriptive insights, often requiring a closer collaboration with engineering and product teams to bring models into live use.

When should an organization separate these functions rather than combine them in a single team?

The decision depends on data maturity, governance needs, and business scale. Larger organizations with complex data ecosystems typically benefit from dedicated roles and pipelines for processing, analysis, and science to maintain accountability and quality. Smaller teams may combine roles if they implement strong standards, clear interfaces, and shared tools to avoid bottlenecks and misalignment.

What are common pitfalls to avoid when building data pipelines and models?

Common pitfalls include neglecting data quality and lineage, allowing data leakage between training and testing sets, overfitting models to historical data, and failing to monitor models after deployment. Security, privacy, and governance gaps can also undermine trust and compliance, so explicit ownership and auditability are essential.