Now Reading: Ethical AI: Mitigating Bias and Ensuring Transparency

-

01

Ethical AI: Mitigating Bias and Ensuring Transparency

Ethical AI: Mitigating Bias and Ensuring Transparency

Introduction: The ethical AI imperative

The rapid adoption of artificial intelligence in enterprise settings has created immense opportunities to optimize operations, uncover new insights, and deliver differentiated products and services. Yet with these opportunities comes a responsibility to manage risk, protect users, and uphold trust. Ethics in AI is not an abstract ideal but a practical framework that guides design choices, deployment decisions, and ongoing governance. When ethics are embedded into development and operating practices, organizations reduce exposure to regulatory penalties, reputational harm, and unintended social consequences, while also accelerating user adoption and long-term value creation.

This article lays out a holistic view of how to mitigate bias, ensure transparency, and align AI initiatives with robust governance and regulatory expectations. It speaks to leaders in product, engineering, data science, compliance, and risk management who are responsible for the end-to-end lifecycle of AI systems. Along the way, we will discuss concrete techniques, real-world challenges, and a path to implement responsible AI across technology stacks — including practical references for teams focused on ai programming.

Bias in AI: Types, Causes, and Risks

Bias in AI emerges when models reflect systematic errors in data, design choices, or deployment contexts, leading to uneven outcomes across groups or individuals. Because automated decisions increasingly affect hiring, lending, healthcare, law enforcement, and customer experience, biased AI can translate into unfair treatment, regulatory scrutiny, and lost trust. Even well-intentioned models can produce biased results if the data reflect historical inequities or if the evaluation criteria overlook important contexts. Recognizing bias as a multifaceted problem — not merely a technical glitch — helps organizations design comprehensive responses that combine data governance, model testing, and human oversight.

Several core dimensions of bias warrant attention. Sample bias occurs when the data used to train a model do not represent the target population. Representation bias arises when the features or labels do not capture the relevant attributes across demographic groups. Historical bias reflects past inequities embedded in the data, while measurement bias stems from flawed data collection or labeling processes. Algorithmic bias can emerge from modeling choices, such as objective functions that emphasize overall accuracy at the expense of minority performance, or from feedback loops that reinforce existing disparities. Finally, contextual bias emerges when a model’s performance varies with changes in user behavior, market conditions, or policy environments. Each form of bias requires targeted detection and mitigation strategies that operate across data, model, and governance layers.

Techniques to Mitigate Bias in AI

Mitigating bias is a continuous, multi-faceted effort. It starts with ensuring high-quality, representative data and is reinforced by governance structures, transparent evaluation, and diverse perspectives throughout the product lifecycle. Organizations should implement measurement frameworks that quantify fairness, accuracy, and robustness across groups and scenarios. They should also establish decision rights, escalation paths, and stakeholder involvement to balance competing objectives, such as fairness, accuracy, speed, and cost. Finally, ongoing monitoring after deployment helps catch drift and adapt to changing conditions, ensuring that responsible AI remains effective over time.

- Data quality and representation audits

- Algorithmic auditing and testing on diverse datasets

- Debiasing techniques: reweighting, resampling, fairness constraints

- Human-in-the-loop and consent mechanisms

- Model monitoring and post-deployment evaluation

- Documentation and governance across the AI lifecycle

Transparency, Explainability, and Accountability

Transparency and explainability are essential for enabling stakeholders to understand how AI decisions are made, why certain inferences were drawn, and under what conditions a model may fail. Explainability supports responsible disclosure to customers, regulators, and internal decision-makers, while accountability ensures clear ownership of outcomes, model performance, and governance processes. The goal is not to reveal every line of code, but to provide meaningful explanations, auditable records, and governance artifacts that enable informed decision-making, robust risk management, and continuous improvement.

- Model cards that summarize purpose, data, performance, and limitations

- Data sheets for datasets detailing provenance, quality, and known biases

- Transparent logging and lineage to trace decisions from input to outcome

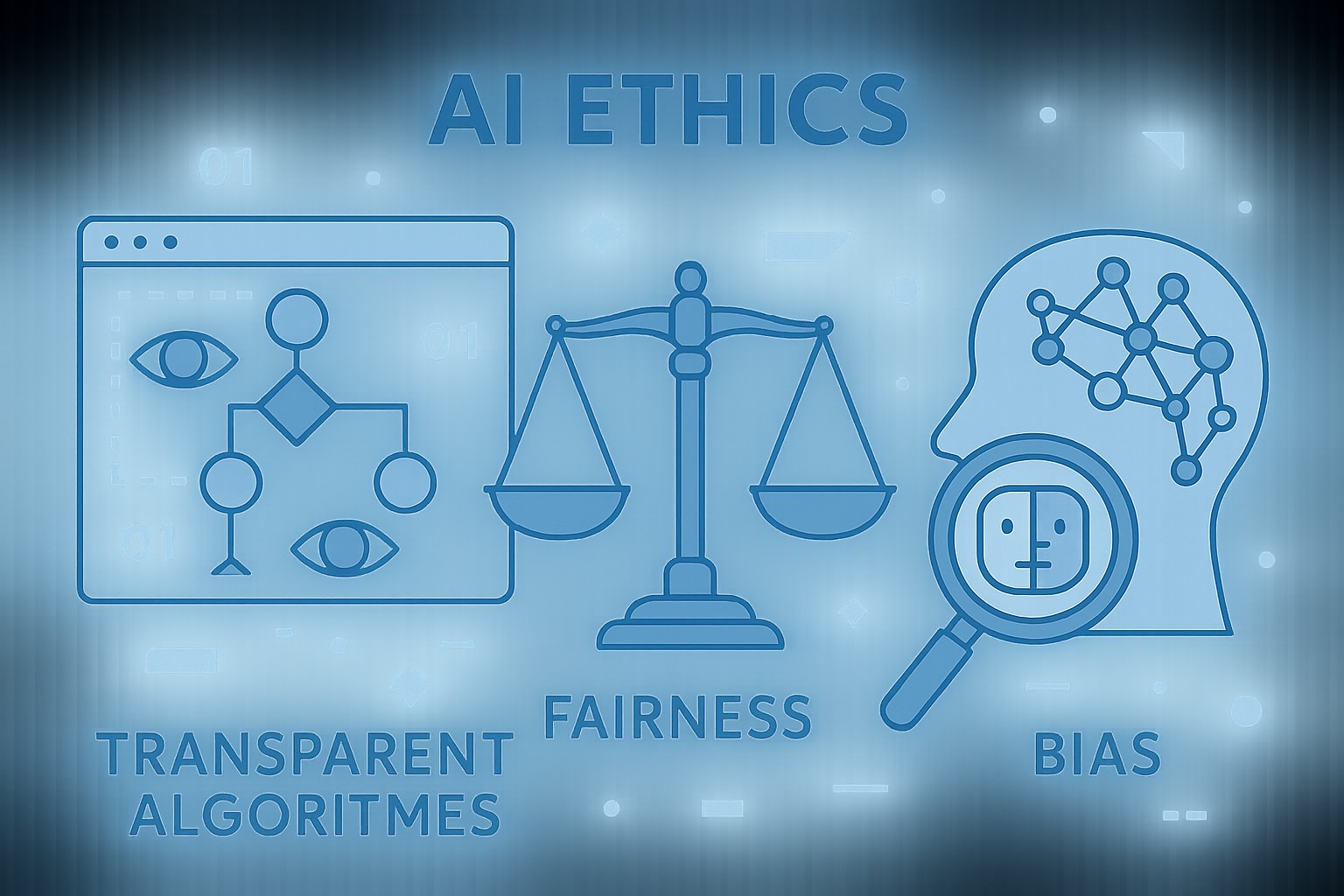

Governance, Regulation, and Frameworks

Effective governance combines policy, process, and technology to ensure AI systems stay aligned with organizational values and societal norms. It includes roles and responsibilities, risk assessment, and ongoing assurance activities such as third-party audits and independent reviews. Regulation and standards provide a common language for measuring risk, communicating expectations, and harmonizing practices across industries and jurisdictions. A disciplined governance posture helps accelerate responsible AI adoption while reducing the likelihood of costly missteps.

Organizations should track a core set of frameworks and standards to guide their efforts. They help translate abstract ethical principles into concrete requirements for data handling, model development, deployment, and post-deployment monitoring. Adopting recognized frameworks can also facilitate regulatory conversations and demonstrate a commitment to accountability and continuous improvement.

- EU AI Act

- NIST AI Risk Management Framework (RMF)

- OECD AI Principles

- IEEE Ethically Aligned Design (EAD) and related standards

Operationalizing Responsible AI in the Enterprise

Turning ethics from a checkbox into a lived practice requires embedding responsible AI into product strategy, software development lifecycle, vendor management, and organizational culture. This means defining and communicating clear policy requirements, integrating risk assessments into planning and design reviews, and establishing measurable targets for fairness, transparency, and governance. It also involves investing in people, processes, and tooling that support ongoing evaluation, incident response, and remediation. By aligning incentive structures with responsible outcomes, organizations can sustain momentum and build resilience against emerging risks in a dynamic AI landscape.

Practical deployment involves cross-functional collaboration among product managers, data scientists, software engineers, privacy and legal teams, and executive leadership. It also demands appropriate training, governance dashboards, and repeatable playbooks that guide incident response, documentation, and stakeholder communication. For teams focused on ai programming, this means not only delivering technically sound models but also ensuring that the models behave consistently with declared policies, user expectations, and regulatory constraints, even as data and contexts evolve over time.

FAQ

What is responsible AI and why does it matter?

Responsible AI refers to the disciplined combination of governance, technical safeguards, and organizational practices that ensure AI systems are fair, transparent, secure, and aligned with human values. It matters because AI decisions increasingly affect people’s lives, and missteps can cause harm, erode trust, invite regulatory scrutiny, and undermine business performance. A responsible AI approach helps organizations anticipate risk, demonstrate accountability, and sustain long-term value while respecting stakeholder interests.

How can organizations measure bias in AI systems?

Measuring bias involves both quantitative and qualitative assessments across data, model, and deployment contexts. Practically, teams use fairness metrics that compare outcomes across demographic groups, perform intersectional analyses to detect compound disparities, and execute counterfactual evaluations to test model behavior under alternative inputs. Regular bias audits, model performance monitoring, and independent reviews complement these measures, enabling early detection of drift and targeted remediation.

What are practical steps to improve transparency?

Practical steps include documenting data provenance and labeling guidelines, publishing model cards and data sheets, maintaining transparent decision logs, and establishing user-facing explanations where appropriate. Organizations should also implement governance dashboards that track policy compliance, risk ratings, and remediation actions, and ensure that explainability methods are chosen to match the audience and context without compromising security or performance.

Which regulatory frameworks should enterprises prioritize?

Enterprises should prioritize frameworks that align with their geography, sector, and risk profile. Core references often include the EU AI Act for governance and accountability, the NIST AI RMF for risk management practices, the OECD AI Principles for high-level normative alignment, and IEEE standards for ethical design. These frameworks help structure risk assessment, documentation, auditing, and governance processes while supporting cross-border collaboration and regulatory dialogue.

How do you sustain governance over time?

Sustaining governance requires embedded processes: continuous risk assessment, ongoing model monitoring, periodic audits, and a clear cadence for policy updates in response to new developments. Organizations should assign accountable owners for each AI system, maintain auditable records of decisions, and invest in training and tooling that support adaptive governance. A mature program treats ethics as a living practice, integrated with product roadmaps, performance metrics, and executive oversight to ensure resilience in a changing AI landscape.