Now Reading: Explainable AI: Making Machine Learning Transparent

-

01

Explainable AI: Making Machine Learning Transparent

Explainable AI: Making Machine Learning Transparent

What is Explainable AI?

Explainable AI (XAI) describes a set of methods and practices designed to make machine learning models more understandable to humans. It covers the data, the model architecture, the training process, and the results produced by the model. Broadly, XAI can be split into two families: interpretable models whose behavior can be followed directly by humans, and post-hoc explanation techniques that illuminate the decisions of complex, “black-box” systems after training. For a business context, explainability is not a luxury; it is a governance and risk-management capability that helps teams interpret why a model produced a given prediction and what factors carried the most weight. In practice, explainability is increasingly embedded in model development pipelines, not added as afterthoughts, to support safer deployment and continuous improvement.

The goal is to balance accuracy with clarity. Different stakeholders require different forms of explanation: data scientists may want insight into feature interactions, product managers may need succinct rationale to communicate with customers, and regulators or auditors may demand auditable traces of decisions. As such, XAI emphasizes both faithfulness (explanations that reflect how the model actually reasons) and usability (explanations that are meaningful and accessible to the target audience). In many industries, explainability is a practical requirement for accountability, enabling teams to diagnose model errors, assess bias, and justify actions taken by automated systems. A mature XAI program treats explanations as an ongoing product—designed, tested, and refined just like any other business capability—and ties them to governance, risk, and customer outcomes.

Key methods and techniques

Explainable AI techniques fall into two broad categories: intrinsic interpretability, where the model architecture itself is designed to be easy to understand, and post-hoc explanations, which are applied after a model is trained to reveal the drivers behind a given prediction. Model-agnostic methods aim to work across any model type, while model-specific approaches exploit structure in particular algorithms. In business applications, teams often combine several techniques to address different needs—from high-level feature importance to local explanations for individual decisions. The choice of technique should reflect the audience, the risk profile, and the data domain.

At a high level, practitioners should consider both global explanations that describe overall model behavior and local explanations that illuminate why a single prediction was made. Global explanations help with model selection and governance, while local explanations support case-level debugging and user-facing rationales. Below are representative technique families frequently adopted in industry:

- Feature attribution methods (for example SHAP and LIME) that quantify each feature’s contribution to a particular prediction.

- Surrogate models, such as decision trees or linear models trained to mimic a black-box model in a restricted domain, which offer simple, rule-based interpretations.

- Visualization and saliency techniques (Grad-CAM, attention maps) that highlight influential regions or inputs, especially in image, text, and sequence data.

- Counterfactual explanations that describe the smallest change needed to flip an outcome, helping users understand decision boundaries.

- Model-specific explanations that leverage internal representations, such as rule extraction from tree ensembles or attention weights in transformers.

Practical considerations for organizations

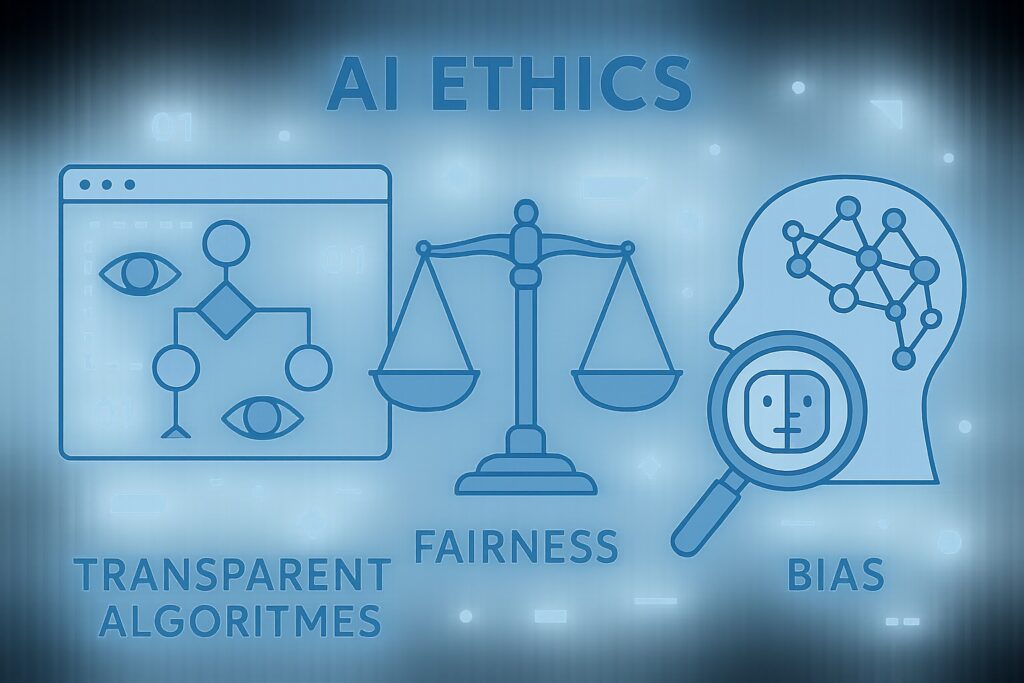

For organizations, explainability is as much a governance practice as a technical capability. Successful XAI programs start with clear objectives tied to business value—risk controls, regulatory compliance, customer trust, or operational efficiency. They require cross-functional governance spanning data science, product, legal, and security to define what explanations are required, who will consume them, and at what cadence explanations must be refreshed. Data lineage, version control for models and explanations, and traceability of training data are essential to demonstrate accountability and to support audits in regulated sectors such as finance or healthcare.

Implementing XAI in production entails choosing the right balance between fidelity and latency, and designing explanations that meet user needs without exposing sensitive information. Early pilots should involve representative users and tasks to validate explainability goals, measure whether explanations improve decision quality, and assess potential biases. Teams should beware that overly detailed explanations can overwhelm users or reveal leakage of proprietary strategies; conversely, too terse explanations may erode trust. A pragmatic approach combines automated explainability with human-in-the-loop review, staged rollouts, and continuous monitoring of explanation quality and model performance. In parallel, organizations should invest in training and change management to ensure product teams, operators, and regulators understand how explanations are produced and used in decision-making processes.

Evaluating explainability and trust

Evaluation of explainability combines quantitative metrics and qualitative feedback. Technical metrics may assess faithfulness (how well the explanation reflects the model’s actual reasoning), completeness, and stability across data perturbations. Human-centered evaluation involves tasks where users judge the usefulness of explanations for decision-making, potential error detection, or compliance. It is important to tailor evaluations to the product context, audience, and risk exposure, rather than relying on a single universal measure. Transparent documentation of evaluation results also supports governance and external audits. Effective evaluation is iterative: it informs model adjustments, interface design, and policy updates, and it demonstrates a measurable impact on business outcomes such as decision quality or user trust.

To structure evaluation, teams typically follow a staged process that aligns with the development lifecycle, including planning, measurement, user testing, and iteration. The following steps provide a practical workflow for ongoing assessment:

- Define explainability objectives in collaboration with stakeholders and align them to concrete tasks and user needs.

- Select a set of metrics that capture faithfulness, usefulness, and reliability of explanations, not just accuracy.

- Prototype explanations early and test with domain experts to identify gaps and biases.

- Conduct user studies or roundtables to gather qualitative feedback on clarity and usefulness.

- Monitor explanation stability and model drift as data evolves.

- Iterate the explanation methods or presentation based on findings, and document changes for audits.

Integrating XAI into governance and product lifecycle

Integrating explainability into product lifecycle requires architectural and organizational changes. This often means adding an explainability layer that captures feature influence, decision context, and rationale alongside the model’s predictions. Clear documentation, versioning, and auditing of both models and explanations enable traceability from data sources to end-user outcomes. Embedding explainability into design reviews, risk assessments, and compliance checks helps ensure that explanations stay aligned with policy and user expectations as the product evolves. A well-designed architecture also supports reusability: explanations for common features can be standardized and reused across products to reduce duplication and ensure consistency.

Practical practices include establishing company-wide standards for explainability across products, making explanations accessible to non-technical audiences, and ensuring that model updates trigger regression tests for explainability quality. Teams should invest in training for data scientists and product teams to interpret and communicate explanations responsibly, and they should implement processes for auditing explanations over time. By pairing technical capabilities with governance and user-centric design, organizations achieve sustainable trust and better decision-making outcomes.

- Define roles and responsibilities for model explainability, including a dedicated governance owner and cross-disciplinary review boards.

- Standardize formats and interfaces for explanations to enable reusability and interoperability across products.

- Document data lineage, feature definitions, and explanation versioning to support audits and compliance.

- Incorporate user-friendly explanations, including multilingual or accessible formats, to broaden understanding.

- Integrate explainability metrics into product KPIs and continuous integration pipelines.

- Plan for ongoing monitoring, incident response, and remediation when explanations reveal issues.

FAQ

What is Explainable AI and why is it important?

Explainable AI refers to approaches that make the behavior and decisions of machine learning models understandable to humans. It is important because it builds trust, supports accountability, helps uncover bias or errors, and facilitates governance and regulatory compliance. By providing clear rationales for predictions and outcomes, organizations can explain why decisions were made, identify where to improve models, and communicate effectively with customers, regulators, and product teams. The practical value lies in aligning advanced analytics with business goals and risk controls, not merely achieving higher accuracy.

What are common XAI methods?

Common XAI methods include feature attribution techniques like SHAP and LIME that quantify each input’s contribution to a prediction, surrogate models that imitate complex models with simpler interpretable rules, and visualization or saliency methods that highlight influential inputs. Counterfactual explanations describe the minimal changes needed to alter an outcome, while model-specific explanations exploit internal representations such as attention weights or extracted rules. The right mix depends on the data type, the audience, and the regulatory or ethical requirements facing the decision scenario.

How do you determine which explanations are appropriate for a given audience?

Determining appropriate explanations starts with stakeholder analysis: identify who needs explanations, for what decisions, and at what level of detail. Regulatory demands, risk exposure, and user needs should shape the format and granularity of explanations. For customers, concise, actionable explanations with non-technical language are often preferred, while internal auditors may require traceability and evidence of faithfulness. The design process should include user testing with representative recipients to ensure that explanations are meaningful, unbiased, and do not reveal sensitive information.

How should an organization measure the effectiveness of its explanations?

Measuring the effectiveness of explanations combines quantitative and qualitative methods. Metrics may assess faithfulness, coverage of important factors, stability across data shifts, and the degree to which explanations improve decision accuracy or reduce error rates. Qualitative methods include user testing, interviews, and scenario-based evaluations to gauge usefulness, trust, and comprehension. Organizations should document results, tie findings to governance actions, and monitor changes over time as models and data evolve.

What are typical limitations or pitfalls of XAI?

Typical limitations include a trade-off between explainability and predictive performance, the risk of explanations being misleading or perceived as more definitive than they are, and the potential leakage of sensitive or proprietary information through overly detailed rationales. Other pitfalls involve misinterpretation by non-experts, overreliance on explanations that do not reflect true model reasoning (unfaithful explanations), and challenges in maintaining explanations as models drift. A prudent approach emphasizes faithfulness, user-centered design, continuous validation, and governance controls to mitigate these risks.