Now Reading: Hadoop vs Spark: Big Data Frameworks Compared

-

01

Hadoop vs Spark: Big Data Frameworks Compared

Hadoop vs Spark: Big Data Frameworks Compared

Overview

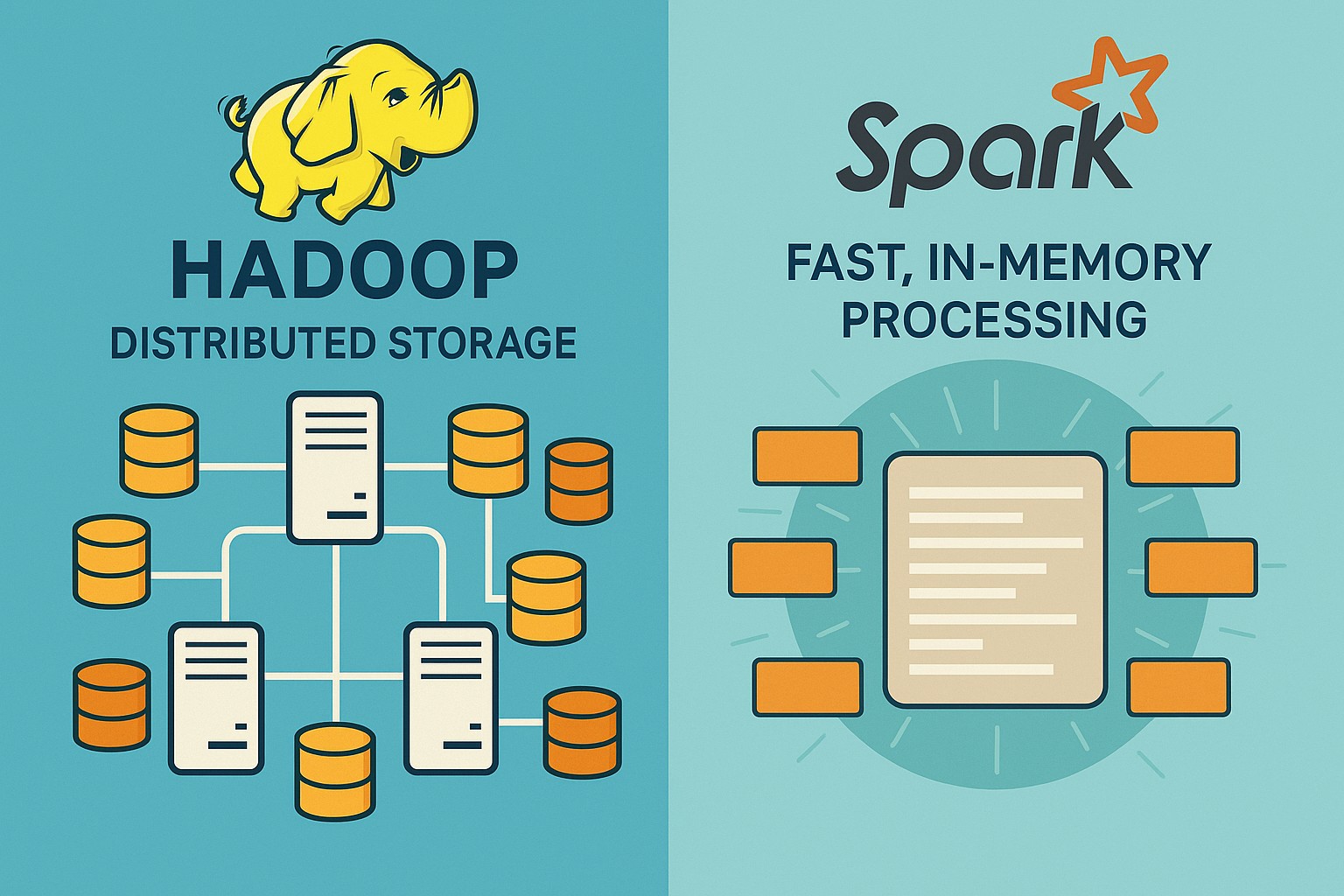

Hadoop and Spark represent two foundational approaches to big data processing, each born from distinct design goals and operational realities. Hadoop emerged around the Hadoop Distributed File System (HDFS) and the MapReduce paradigm, delivering reliable, scalable batch processing on commodity clusters and enabling organizations to store and analyze massive datasets with fault-tolerance guarantees. Spark arrived later with an emphasis on speed, ease of use, and a unified analytics surface, including batch processing, streaming, and machine learning, all within a single runtime that favors in-memory computation and directed acyclic graph (DAG) scheduling. For enterprises—especially in healthcare where data volumes are large, data access patterns vary, and regulatory demands are stringent—the practical choice often hinges on how these frameworks complement each other rather than on a single silver bullet.

What this article aims to do is provide a practical, business-technical comparison that helps teams align technology choices with organizational goals, data governance requirements, and the realities of real-world workloads. You’ll see how the core differences in storage, computation, and ecosystem components translate into concrete trade-offs for batch ETL, iterative analytics, real-time alerts, and compliant data handling in healthcare contexts. The core message is that many successful architectures blend both platforms, leveraging Hadoop as a durable storage and batch backbone while deploying Spark for fast analytics and interactive exploration.

Architecture and Execution Model

Hadoop’s architecture rests on three mature pillars: HDFS for distributed, reliable storage, YARN for resource management, and a MapReduce execution model that organizes work into stages with disk I/O between steps. The emphasis is on durability, fault tolerance through block replication, and predictable throughput across vast data volumes. Spark reimagines execution by introducing a driver program and a cluster of executors that run within a distributed framework (YARN, Mesos, or Kubernetes). Its strength lies in building a DAG of transformations, caching intermediate data in memory when possible, and re-computing partitions through lineage if failures occur. This results in lower latency for many workloads, especially iterative or interactive tasks, but it also demands careful memory management to avoid garbage collection overhead and spill-to-disk scenarios.

In streaming contexts, Spark Structured Streaming provides a unified approach to continuous data flows by processing data in micro-batches, enabling near-real-time analytics while maintaining familiar APIs. Hadoop-based pipelines historically relied on separate streaming components or external systems (for example, Kafka or Flume) feeding into HDFS and then batch-processing results downstream. The ecosystem has evolved to allow tighter integration—Spark can serve as the front-end analytics engine over data stored in HDFS or cloud storage, while Hadoop components provide durable storage and governance that remain valuable for long-term retention and batch workloads. The architectural takeaway is that Spark shines in speed and interactivity, while Hadoop emphasizes durable storage, fault-tolerant batch processing, and large-scale throughput, often via a well-defined data lifecycle.

Data Processing Styles and Workloads

Batch workloads in the Hadoop ecosystem have historically been the backbone of enterprise data engineering. They excel at long-running ETL pipelines, periodic reporting, archival processing, and the consolidation of data from diverse sources into a canonical data store. These workloads tolerate higher latency per job in exchange for predictable, scale-out throughput and robust fault tolerance. MapReduce-style jobs, though evolving with newer engines, still underpin many organizations’ data pipelines because of their mature operational tooling and strong guarantees for correctness and auditable lineage.

Spark shifts the performance frontier for many analytics patterns by keeping data resident in memory, reusing intermediate results, and offering high-level libraries for SQL, machine learning, graph processing, and streaming. This enables significantly faster model training cycles, faster iterative experiments, and more responsive dashboards or exploratory analyses. In healthcare analytics, this translates to accelerated cohort discovery, rapid prototyping of predictive models, and real-time alerting for patient monitoring scenarios, all while leveraging the same underlying data lake or warehouse. The trade-off is that Spark requires adequate memory resources and careful tuning to avoid memory pressure, whereas Hadoop emphasizes durability and predictability, even at larger disk I/O cost.

Ecosystem and Components

Hadoop’s ecosystem provides a mature, end-to-end stack designed for scalable data storage, processing, and governance. The core components most enterprises rely on include the following:

- HDFS — the distributed storage substrate designed for reliability and large-scale data retention.

- MapReduce — the original batch processing engine that ensures deterministic fault tolerance and simple scalability.

- YARN — the resource manager that allocates clusters’ CPU, memory, and I/O across workloads.

- Hive — a data warehouse layer that enables SQL-like queries over large datasets stored in HDFS.

- HBase — a NoSQL store for low-latency random reads and writes on top of HDFS.

- Pig — a scripting abstraction for building data analysis workflows on Hadoop.

- Sqoop — a bridge for efficiently moving data between relational databases and Hadoop’s storage.

- Oozie — a workflow scheduler that coordinates complex ETL pipelines and job sequences.

In modern deployments, Spark has established itself as a pervasive analytics engine that often operates atop the same storage and data catalogs used by Hadoop components. Spark SQL, MLlib, GraphX, and structured streaming integrate with existing Hive metastore schemas and leverage Parquet or ORC formats for efficient columnar access. This convergence has made it common for organizations to deploy a shared data lake where Spark handles analytics and Hadoop handles durable storage, governance, and batch processing. In healthcare, this alignment supports secure, auditable data access across a broad set of analytical use cases from population health analytics to clinical research pipelines.

Performance, Scalability, and Fault Tolerance

The performance profile of Hadoop and Spark reflects their architectural choices. MapReduce’s disk-first design minimizes the risk of data loss and simplifies fault recovery, but the approach incurs substantial I/O overhead and multi-stage shuffle costs that can become the bottleneck for iterative workloads. Spark’s in-memory caching dramatically reduces the cost of repeated computations, delivering substantial speedups for machine learning, graph analytics, and interactive queries. However, maintaining large in-memory datasets requires carefully provisioned cluster memory and thoughtful configuration to avoid out-of-memory errors and excessive garbage collection.

Both platforms scale horizontally and benefit from resilient distributed storage and robust resource management. When running on YARN (or Kubernetes), Spark can co-locate with other workloads, reducing network traffic and enabling shared use of compute and storage resources. In real-world deployments, data locality, network bandwidth, and the shapes of data-skewed workloads play a decisive role in performance. For healthcare workloads—where latency for decision support matters and data refresh cycles can be tight—Spark often delivers the responsiveness that analysts expect, while Hadoop provides the stable, auditable backbone for archival and governance requirements.

Deployment and Operational Considerations

Operational patterns differ depending on whether you favor on-premises infrastructure or cloud-based managed services. Traditional on-prem deployments emphasize control, bare-metal or virtualized clusters, and long-term cost planning, while cloud deployments lean on managed services that automate provisioning, patching, and scaling. In either case, integration with identity management, encryption, and network security is essential to meet regulatory obligations such as HIPAA. Proven deployment practices include establishing secure data ingest paths, setting up role-based access to data, and implementing repeatable pipeline templates to minimize drift between environments.

Monitoring and maintenance require consistent logging, standardized metadata practices, and automated testing of data pipelines. Spark and Hadoop jobs expose different failure modes—Spark may fail due to memory bottlenecks or misconfigured executors, while MapReduce pipelines may stall due to resource constraints or long-tail data skew. Operational excellence comes from clear SLAs for data freshness, robust retry policies, and observability across the data lifecycle—from ingestion to transformation to consumption. In healthcare contexts, this translates to auditable pipelines, well-defined data contracts, and rapid incident response procedures that protect patient safety and data integrity.

Security, Governance, and Healthcare Considerations

Healthcare data is especially sensitive and subject to strict regulatory controls. Any Hadoop or Spark deployment that touches PHI must enforce strong authentication, authorization, and encryption. Kerberos-based authentication remains common in on-premise configurations, while cloud deployments leverage integrated IAM controls, encryption keys, and network security groups. Tools such as Apache Ranger or Sentry provide centralized, policy-driven access control across data stores and processing engines, enabling fine-grained permissions and audit trails for data access by clinicians, researchers, and analysts.

Beyond access controls, governance and data quality become central to compliance and operational trust. Organizations should implement robust data catalogs, lineage tracking, and schema-versioning to ensure reproducibility and accountability of analytics. Retention policies, audit logging, and tamper-evident records support regulatory requirements and incident investigations. In practice, successful healthcare implementations balance performance with strict governance, ensuring that analytics teams can move quickly while preserving patient privacy and meeting regulatory expectations.

Migration Paths and Coexistence Strategies

A practical migration strategy often begins with running Spark on top of existing Hadoop storage and metadata services, allowing teams to port transformation logic gradually while preserving historical data pipelines. Rewriting legacy MapReduce jobs into Spark DataFrame or RDD-based workloads can yield meaningful performance gains, especially for ML-enabled analytics and iterative data exploration. Cloud-based options such as Amazon EMR, Google Cloud Dataproc, or Azure HDInsight offer managed environments that simplify provisioning, versioning, and policy enforcement while providing governance features essential for regulated industries.

Coexistence patterns favor preserving batch ETL in the Hadoop stack while adopting Spark for iterative analytics and streaming transformations. Data can be ingested into HDFS or cloud storage, cataloged in Hive metastore, and consumed by Spark SQL, with columnar formats like Parquet or ORC to optimize read performance. A staged migration approach—beginning with non-critical workflows, validating results, and implementing robust rollback plans—helps minimize risk while aligning teams around common data contracts, governance policies, and cost controls relevant to healthcare data flows.

Practical Evaluation: How to Choose for Your Organization

Determining the right mix of Hadoop and Spark depends on your organization’s latency requirements, data volumes, analytics velocity, and regulatory constraints. Start with a clear hypothesis about where real-time insight is valuable versus where batch efficiency is paramount, then align technology choices with your cloud strategy, skill sets, and data governance framework. A pragmatic evaluation plan includes pilot workloads that reflect representative pipelines, performance benchmarks that measure end-to-end latency and throughput, and a cost model that contrasts on-premise versus managed service options. In healthcare settings, security, privacy, and auditable data provenance must be woven into every phase of the evaluation.

- Latency and real-time needs: sparking faster streaming analytics generally favors Spark, while batch nightly processing may remain well-suited to Hadoop.

- Data size and throughput: both platforms scale, but storage format, data locality, and pipeline design influence overall performance.

- Operational risk and compliance: implement strong access controls, encryption, governance, and auditable logs as non-negotiable requirements.

Finally, align vendor support, community activity, and roadmap alignment with your organization’s strategic goals to ensure long-term viability and predictable upgrade paths for your data platform.

FAQ

Can Spark completely replace MapReduce in an enterprise?

In many cases Spark can replace a large portion of MapReduce workloads by providing faster in-memory processing, unified APIs, and easier maintenance. However, some legacy pipelines, compliance-driven batch jobs, or environments with highly specialized MapReduce configurations may continue to rely on MapReduce or require a staged migration. The practical approach is to run Spark for new analytics and progressively port or decommission MapReduce tasks while preserving governance and data lineage.

Is Spark more memory-intensive than Hadoop, and how should I size the cluster?

Yes, Spark often requires more memory to hold data in memory for caching and iterative computations. Effective sizing involves provisioning enough RAM for executors, tuning the memory fraction and garbage collection strategy, and considering data skew and shuffle operations. A rule of thumb is to start with moderate executor memory, monitor memory pressure, and scale out the cluster as needed to keep executors busy without excessive spills to disk.

How do I decide between on-premises and cloud deployments for Hadoop/Spark?

The decision depends on factors such as control requirements, data residency, total cost of ownership, and the ability to scale with demand. Cloud deployments offer rapid provisioning, managed security controls, and flexible billing, which can simplify governance and compliance for healthcare data. On-premises may be preferred for very-large, stable workloads, existing data center investments, or strict data localization policies. In many cases, a hybrid approach that uses cloud for analytics bursts while preserving critical data in on-prem storage yields the best balance.

How does fault tolerance work in Spark, and how does it compare to Hadoop’s approach?

Spark achieves fault tolerance primarily through lineage information and the ability to recompute lost partitions from the original transformations, along with caching safeguards when data can fit in memory. Hadoop’s fault tolerance relies on HDFS replication and the ability to re-execute failed map or reduce tasks, often writing intermediate data to disk. In practice, Spark’s model enables faster recovery for in-memory workloads, while Hadoop’s robust, disk-based fault tolerance remains valuable for durable storage and long-running batch pipelines.

What are healthcare-specific considerations when choosing between Hadoop and Spark?

Healthcare deployments must prioritize data privacy, regulatory compliance, and auditability. This means enforcing strong access controls, encryption in transit and at rest, robust identity management, and detailed data lineage. Both platforms require careful governance, schema management, and policy enforcement to ensure PHI protection and compliant data sharing, while still enabling researchers and clinicians to extract meaningful insights at velocity appropriate to the use case.