Now Reading: The Rise of Multimodal AI: Text, Vision, and Audio Together

-

01

The Rise of Multimodal AI: Text, Vision, and Audio Together

The Rise of Multimodal AI: Text, Vision, and Audio Together

Overview of Multimodal AI in the Enterprise

Multimodal artificial intelligence is moving from niche capability into core enterprise infrastructure. Organizations increasingly demand AI systems that can reason across text, vision, and audio to support decision making, collaboration, and customer engagement. The result is a shift from siloed AI apps with single-task focus to integrated platforms that can ingest diverse data streams, align signals, and produce coherent actions or insights. In practice, multimodal models unlock faster knowledge discovery, richer customer experiences, and more robust automation by reducing the need to translate information between modalities manually.

As this capability matures, business leaders are balancing the promise of unified perception with the realities of data governance, latency, and cost. The most compelling deployments tend to center on workflows where cross-modal understanding yields measurable improvements in accuracy, speed, or user satisfaction. Examples include real-time media analysis for brand safety, multimodal search for complex product catalogs, and assistive AI that interprets user intent across spoken language and visual cues. In this dynamic landscape, the competitive differentiator is not a single feature but the ability to orchestrate multiple modalities in a way that aligns with organizational goals and technical constraints.

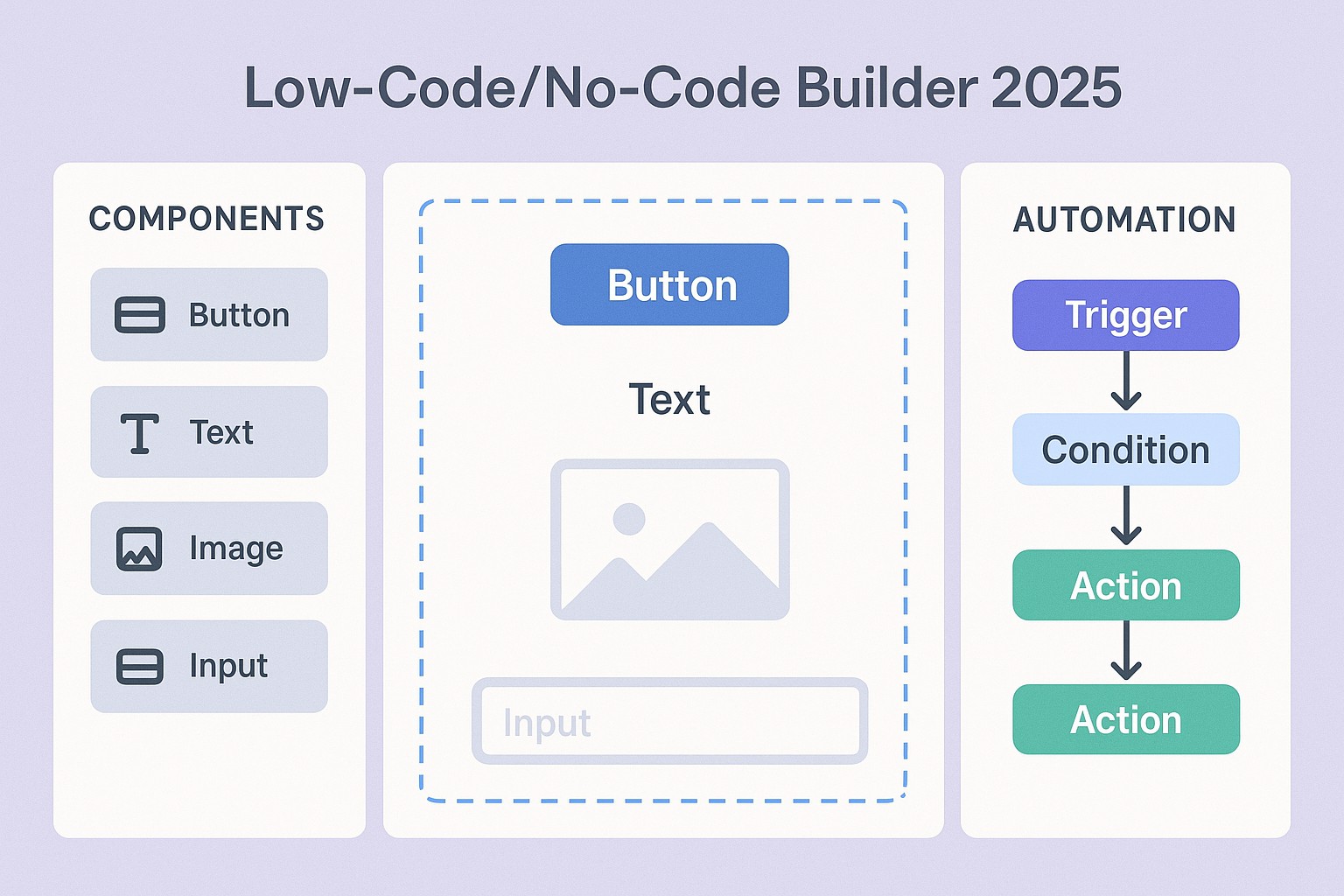

The Tech Stack: How Multimodal Models Are Built

At a high level, multimodal models integrate encoders for each modality—text, vision, and audio—and a fusion or alignment mechanism that aggregates signals into a shared representation. This architecture supports downstream tasks such as question answering, captioning, or task planning. Modern systems rely on transformer-based backbones, cross-attention modules, and scalable pretraining objectives that encourage cross-modal understanding without requiring bespoke pipelines for every task. The result is a flexible platform capable of adapting to new modalities or tasks with incremental updates rather than complete rewrites.

Key design decisions in this space include how to balance modality-specific processing against a shared latent space, how to handle missing or noisy inputs, and how to optimize inference latency for real-time use cases. Enterprises often adopt a modular approach: specialized encoders tuned for domain data, a central fusion layer that learns cross-modal correlations, and task-specific heads that map fused representations to actionable outputs. This modularity supports governance, testing, and risk management while enabling faster experimentation and deployment at scale.

“Multimodal AI is not just about combining signals; it’s about ensuring that the combined signal preserves reliability, explainability, and business relevance across end-to-end workflows.”

Data and Training: Multimodal Datasets and Challenges

Training truly multimodal models hinges on data that is aligned across modalities and labeled with care. Enterprises face three core challenges: acquiring diverse, high-quality datasets that cover real-world usage; ensuring privacy and regulatory compliance when data originates from customers or employees; and maintaining data lineages that support auditing and governance. Multi-sensor or multi-stream datasets—such as text transcripts paired with video frames and audio cues—require careful synchronization and robust preprocessing to maximize learning signals while minimizing noise.

Data governance becomes a competitive advantage in this domain. Organizations that implement rigorous data curation practices—covering consent management, data minimization, and bias monitoring—tend to achieve better generalization and safer products. Transfer learning and domain adaptation techniques help leverage large, generic multimodal corpora while fine-tuning on organization-specific data, enabling models to reflect the unique vocabulary, visual style, or acoustic environment of a company’s operation. The outcome is a more reliable system that can adapt to changing business contexts without prohibitive retraining costs.

Architectures and Data Flow

Multimodal models operate through a carefully choreographed data flow that begins with modality-specific encoders and culminates in a shared, task-appropriate representation. The typical pipeline includes: (1) modality-specific feature extraction, (2) cross-modal alignment or fusion, (3) a task head or planner, and (4) an optimization loop that ties outputs to business metrics. This structure allows teams to swap components (for example, a newer vision encoder) without rearchitecting the entire system, supporting rapid iteration while preserving system stability.

- Text encoder for natural language understanding and generation

- Vision encoder to process images or video frames

- Audio encoder to capture speech, tone, and ambient cues

- Cross-modal fusion module to integrate signals from all modalities

- Task-specific heads or controllers for downstream actions

Benchmarking and Evaluation

Establishing robust benchmarks for multimodal AI requires more than measuring single-modality accuracy. Enterprises assess cross-modal alignment, robustness to modality absence, executive interpretability, and latency under load. Evaluation often combines quantitative metrics—such as retrieval accuracy, caption quality, and VQA (visual question answering) performance—with qualitative checks that examine user experience, safety, and compliance. Realistic benchmarks incorporate domain-specific data and simulate end-to-end workflows to reveal how models perform in production settings.

- Define representative tasks and success criteria aligned with business goals

- Select metrics that capture cross-modal performance, reliability, and user impact

- Test cross-domain generalization, failure modes, and resilience to incomplete inputs

Business and Operational Implications

Adopting multimodal AI entails not only technical readiness but also governance, operating model changes, and cost management. Enterprises must evaluate total cost of ownership, including data curation, compute for training and inference, and ongoing model monitoring. Operationally, teams need clear guardrails for model updates, versioning, rollback strategies, and documentation that makes AI behavior auditable. The most successful programs integrate AI into existing workflows, providing measurable improvements in speed, accuracy, or decision quality while maintaining compliance with data protection and industry standards.

From a governance perspective, model cards, risk assessments, and incident response plans become essential. Cross-functional collaboration among data science, product management, legal, and risk teams enables a balanced approach to deployment that respects both performance and safety requirements. As models evolve, organizations that embed continuous improvement loops and transparent reporting are better positioned to demonstrate value to stakeholders and customers alike.

Use Cases Across Industries

Across sectors, multimodal AI unlocks a spectrum of capabilities that previously required bespoke pipelines. In retail and e-commerce, multimodal search and product understanding improve discoverability and shopping friction. In manufacturing and logistics, multimodal monitoring can correlate textual signals with visual or acoustic cues to detect anomalies and optimize operations. In media and entertainment, automated content analysis and accessibility features become more scalable, while in healthcare, multimodal systems assist triage and patient communication when privacy and accuracy requirements are met. The common thread is a platform that can reason about information that spans words, images, and sounds, delivering outputs that are actionable and aligned with business outcomes.

- Multimodal search and retrieval across product catalogs and media libraries

- Automated content moderation, safety, and brand protection with cross-modal signals

- Enhanced customer support and virtual assistants using spoken language, visuals, and context

Risks, Ethics, and Governance

As multimodal AI becomes intertwined with decision-making, organizations confront ethical, legal, and operational risk. Bias in any modality can propagate through the system and amplify when fused with other signals. Privacy concerns are magnified when audio and video inputs capture contextual information about individuals. Robust governance requires end-to-end visibility into data provenance, model behavior, and the impact of AI outputs on users and business processes. Proactive risk assessment, explainability efforts, and red-teaming exercises help organizations identify vulnerabilities before deployment and support accountable innovation.

Security considerations extend to model poisoning, prompt injection risks, and misalignment between model outputs and real-world constraints. Enterprises should implement monitoring for drift, performance degradation, and unexpected behavior, with clear escalation paths and remediation playbooks. By treating risk management as an ongoing process rather than a one-time compliance exercise, organizations can sustain responsible deployment while unlocking the value of multimodal AI.

The Road Ahead: Trends and Market Dynamics

Industry momentum suggests that multimodal AI will continue to permeate enterprise software stacks, with deeper integration into enterprise data platforms, CRM, and content management ecosystems. Advances in instruction-tuning, few-shot adaptability, and on-device inference are likely to reduce latency and bandwidth requirements, broadening access to edge environments and privacy-preserving use cases. Market dynamics point to a growing ecosystem of platforms and vendors offering modular multimodal capabilities, accompanied by stronger governance tools and standardized evaluation frameworks. For business leaders, success will hinge on aligning technology roadmaps with real-world workflows, maintaining disciplined data governance, and investing in talent capable of translating abstract multimodal capabilities into tangible business outcomes.

FAQ

How do multimodal models handle alignment across modalities?

Alignment across text, vision, and audio is achieved through a combination of shared latent representations and cross-modal attention mechanisms. During training, the model learns to map signals from each modality into a common feature space where correlations can be detected and exploited. Attention layers help the system focus on the most informative cues across modalities for a given task, while regularization and alignment objectives ensure that, for example, a caption corresponds to the observed image or that spoken input maps to the correct textual interpretation. In production, alignment is continuously validated against task-specific metrics and monitored for drift.

What are the primary barriers to enterprise adoption?

Barriers include data hygiene and governance challenges, concerns about privacy and compliance, and the complexity of integrating multimodal models with existing IT ecosystems. Compute costs for training at scale and the need for specialized talent to manage data pipelines and model governance can be substantial. Additionally, risk management requires robust monitoring, explainability, and incident response capabilities to satisfy regulatory and customer expectations. Organizations that invest in data infrastructure, governance frameworks, and cross-functional collaboration tend to overcome these hurdles more effectively.

How should organizations evaluate multimodal AI vendors?

Evaluation should consider technical fit, governance practices, and deployment readiness. Key criteria include model performance across your target modalities and use cases, data privacy commitments, explainability and auditing capabilities, and the ability to integrate with your existing data pipelines and workflows. It is also important to assess vendor support for ongoing updates, risk management tooling, and transparent reporting on bias, safety, and regulatory compliance. A structured pilots program that mirrors real-world tasks can reveal how a solution performs under operational conditions before broader rollout.