Now Reading: Predictive Analytics Examples and Use Cases (Real-World Applications)

-

01

Predictive Analytics Examples and Use Cases (Real-World Applications)

Predictive Analytics Examples and Use Cases (Real-World Applications)

What predictive analytics means for contemporary businesses

Predictive analytics combines historical data, statistical modeling, and machine learning to forecast future events or behaviors. In practice, it helps organizations move beyond reactive decision-making toward proactive strategies that anticipate demand, customer needs, risk exposures, and operational bottlenecks. By translating patterns in data into actionable predictions, businesses can allocate resources more efficiently, personalize interactions, and reduce uncertainty in strategic planning.

The value of predictive analytics lies not only in the accuracy of the forecasts but also in the actionable context that accompanies them. Predictions are most effective when they are integrated into decision workflows, with clear owners, performance metrics, and escalation paths. Rather than a one-off report, predictive analytics becomes a continuous capability that informs planning, optimization, and risk mitigation across departments. For leaders, the challenge is to balance model complexity with explainability, ensuring that stakeholders can trust and act on the recommendations without being overwhelmed by technical detail.

Ultimately, predictive analytics supports a disciplined approach to decision making. It helps quantify trade-offs, test scenarios, and monitor outcomes in near real time. When deployed with governance, ethics, and data quality controls, predictive analytics can deliver measurable improvements in efficiency, revenue, and customer satisfaction while maintaining compliance with privacy and industry standards.

- Forecasting and demand planning

- Risk assessment and mitigation

- Customer behavior prediction and segmentation

- Personalization and dynamic pricing

- Operational optimization and anomaly detection

Data, models, and the analytics workflow

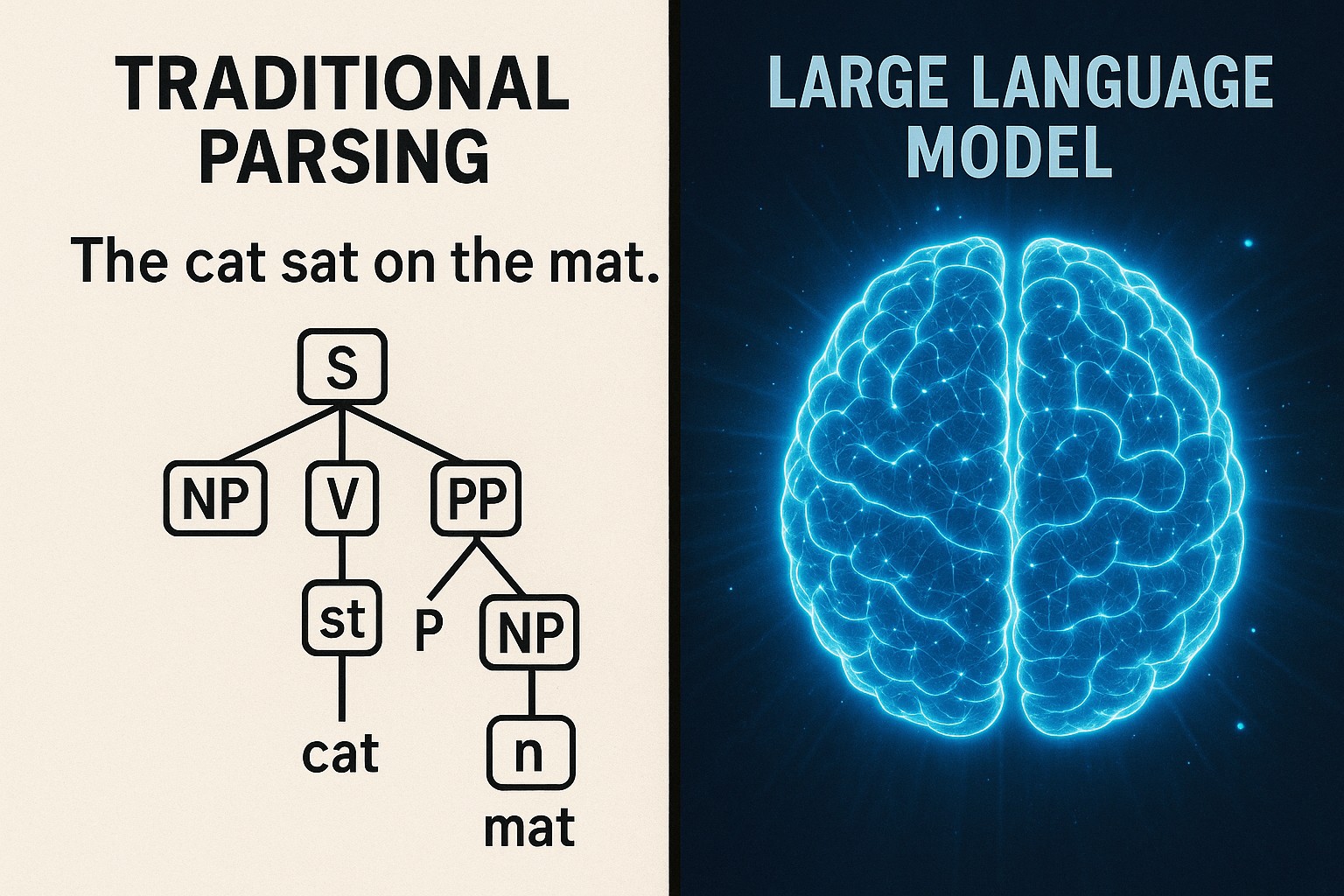

A successful predictive analytics program starts with clean data, well-defined objectives, and a reproducible modeling process. Data quality, provenance, and governance determine the reliability of the predictions. Teams typically combine internal data—sales, operations, customer interactions—with external sources such as market indicators, weather, or social sentiment to enrich the feature set. Feature engineering, data normalization, and careful handling of missing values are essential steps that can dramatically influence model performance.

Model selection depends on the objective, data characteristics, and the required balance between accuracy and interpretability. Common approaches range from traditional regression and time-series models to advanced machine learning techniques like gradient boosting, random forests, and, increasingly, neural networks for complex pattern recognition. Regardless of the method, robust validation—cross-validation, out-of-sample testing, and backtesting for time-sensitive tasks—is critical to avoid overfitting and to quantify the model’s real-world performance.

Governance and ethics should be woven into the workflow from the start. This includes documenting assumptions, enabling explainability for stakeholders, monitoring for bias, and establishing controls to manage model drift once deployed. Deployment is not the end of the journey; it requires monitoring dashboards, alerting, and a plan for updating models as data evolves. Operationalization also involves embedding predictions into business processes, whether through automated decision engines, decision-support interfaces, or human-in-the-loop review.

- Define objective and success criteria

- Acquire, clean, and integrate data from trustworthy sources

- Explore data and engineer meaningful features

- Split data for training and validation and choose appropriate models

- Validate, test, and calibrate models under realistic scenarios

- Deploy with monitoring, governance, and a plan for updates

Industry use cases across sectors

Predictive analytics touches almost every sector, but the value comes from tailoring the approach to the unique drivers of each industry. Retailers forecast demand and optimize assortments to reduce stockouts while boosting margins. Financial institutions assess credit risk, detect fraud, and price products in ways that reflect evolving customer profiles. Healthcare providers use predictions to identify high-risk patients, optimize resource allocation, and support precision medicine. Manufacturers apply predictive maintenance to minimize downtime and extend asset life. Telecommunications providers forecast churn and optimize network performance to improve reliability and customer experience.

Across industries, common patterns emerge: the most successful programs link data and models to concrete operational workflows, measure impact in business terms, and maintain a disciplined approach to data privacy and model governance. In practice, this often means starting with a high-value use case, delivering a measurable early win, and then scaling the solution across processes and geographies. The resulting capability becomes part of an adaptive operating model that can respond quickly to market changes, competitive pressure, and shifting customer expectations.

Industries and typical use cases:

- Retail and e-commerce: demand forecasting, inventory optimization, promotional response modeling

- Banking and fintech: credit risk scoring, fraud detection, customer lifetime value estimation

- Healthcare: patient readmission risk prediction, treatment response modeling, proactive care management

- Manufacturing: predictive maintenance, quality yield prediction, supply chain risk assessment

- Telecommunications: churn prediction, network fault detection, capacity planning

Deep-dive: forecasting demand, churn, and risk scoring

Forecasting demand combines external indicators with internal signals such as historical sales, seasonality, promotions, and competitive activity. The goal is not only to predict quantities but also to quantify confidence intervals and scenario-based outcomes. This enables better inventory planning, pricing decisions, and marketing calibration. In practice, teams design dashboards that present both point forecasts and upper/lower bounds, along with recommended actions anchored in policy and capacity constraints. When integrated into planning cycles, demand forecasts become a central input for budgeting, merchandising, and logistics.

Churn prediction focuses on customer behavior trajectories that signal a likelihood of disengagement. By modeling early indicators—usage patterns, satisfaction signals, service interactions, and price sensitivity—organizations can intervene with targeted retention campaigns, personalized offers, or product improvements before a churn event occurs. The value lies in turning predictive signals into timely, proportionate actions that preserve lifetime value and reduce acquisition costs. Responsible application also demands transparency about data usage and fairness across customer segments.

Risk scoring blends historical performance with forward-looking indicators to quantify exposure across different dimensions—credit, operational safety, compliance, and strategic risk. Effective risk models integrate cross-functional inputs, maintain explainability for decision-makers, and include processes to monitor drift or new risk signals. A mature risk program uses predictive insights to prioritize mitigations, allocate buffers, and inform contingency planning, while avoiding false positives that could disrupt legitimate activities or customer experiences.

Implementation considerations: governance, ethics, and risk management

A responsible predictive analytics program treats data security, privacy, and bias as foundational, not afterthoughts. Data governance defines ownership, access controls, retention policies, and lineage so stakeholders understand where data comes from and how it flows through models. Privacy protections—such as minimization, anonymization, and appropriate consent mechanisms—are essential when handling personal or sensitive data. Model explainability remains important, particularly in regulated industries, for audits and for earning trust among leadership, customers, and partners.

Ethical considerations must accompany technical choices. Algorithms can inadvertently reproduce or amplify existing biases if training data reflect historical disparities. Regular bias audits, diverse evaluation datasets, and guardrails against disparate impact are critical components of a mature program. Monitoring for model drift—where the relationship between inputs and outputs changes over time—ensures forecasts remain relevant. When failures occur, rapid remediation processes and rollback capabilities help maintain resilience and stakeholder confidence.

Operational readiness determines how predictions translate into action. This includes integrating outputs into business workflows, building feedback loops to capture real-world outcomes, and ensuring humans retain appropriate oversight where necessary. Finally, aligning incentives and governance with strategic objectives helps sustain commitment to continuous improvement, budget allocation, and cross-functional collaboration.

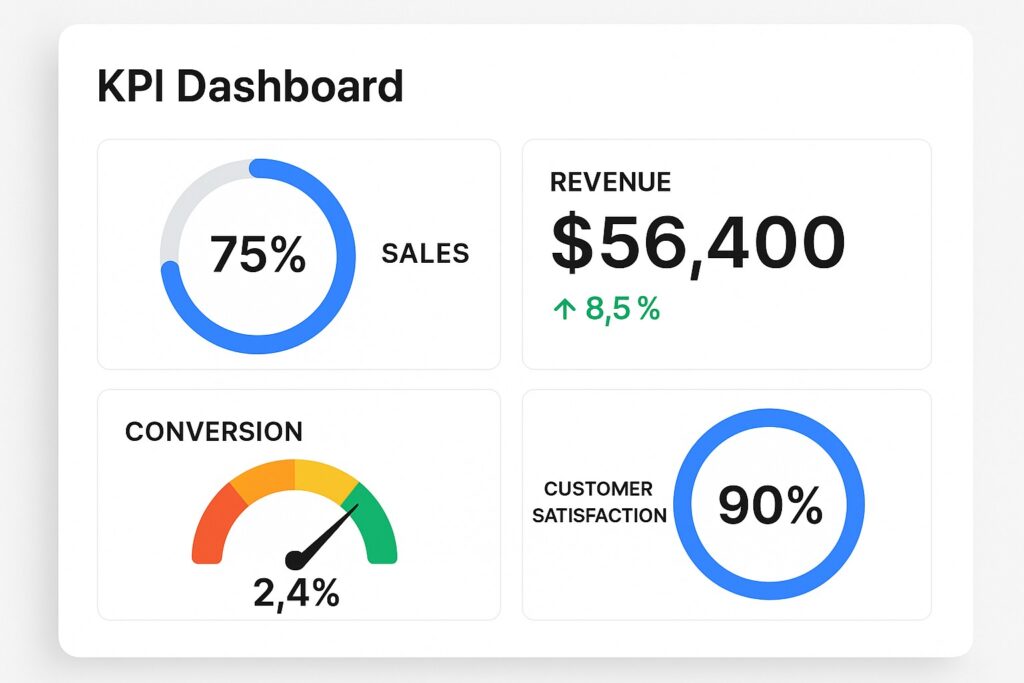

Measuring success: ROI, performance, and maturity

Measuring the impact of predictive analytics requires a clear framework linking model outputs to business outcomes. Common metrics include forecast accuracy, lift in performance relative to a baseline, and the financial return from decisions enabled by predictions. It is essential to define a measurement plan upfront, including the data needed for post-implementation evaluation, the expected time horizon for benefits, and the approach for attributing outcomes to the analytics initiative.

Beyond quantitative results, many organizations assess process maturity and capability development. This includes the reliability of data pipelines, the predictability of model performance, governance controls, and the degree to which decision-makers routinely incorporate predictions into action. A staged maturity model—ranging from ad hoc experiments to scalable, enterprise-wide deployment—helps leaders track progress, justify investments, and plan for expansion to new use cases and geographies.

Future trends and challenges

Looking ahead, predictive analytics will continue to grow in sophistication and ubiquity. Advances in automated feature generation, model explainability, and hybrid approaches that combine traditional statistical methods with AI-enabled pattern recognition will broaden the set of problems that can be tackled with predictive models. Real-time streaming data and edge computing will enable near-instantaneous predictions for time-critical applications, such as dynamic pricing and equipment monitoring in remote locations.

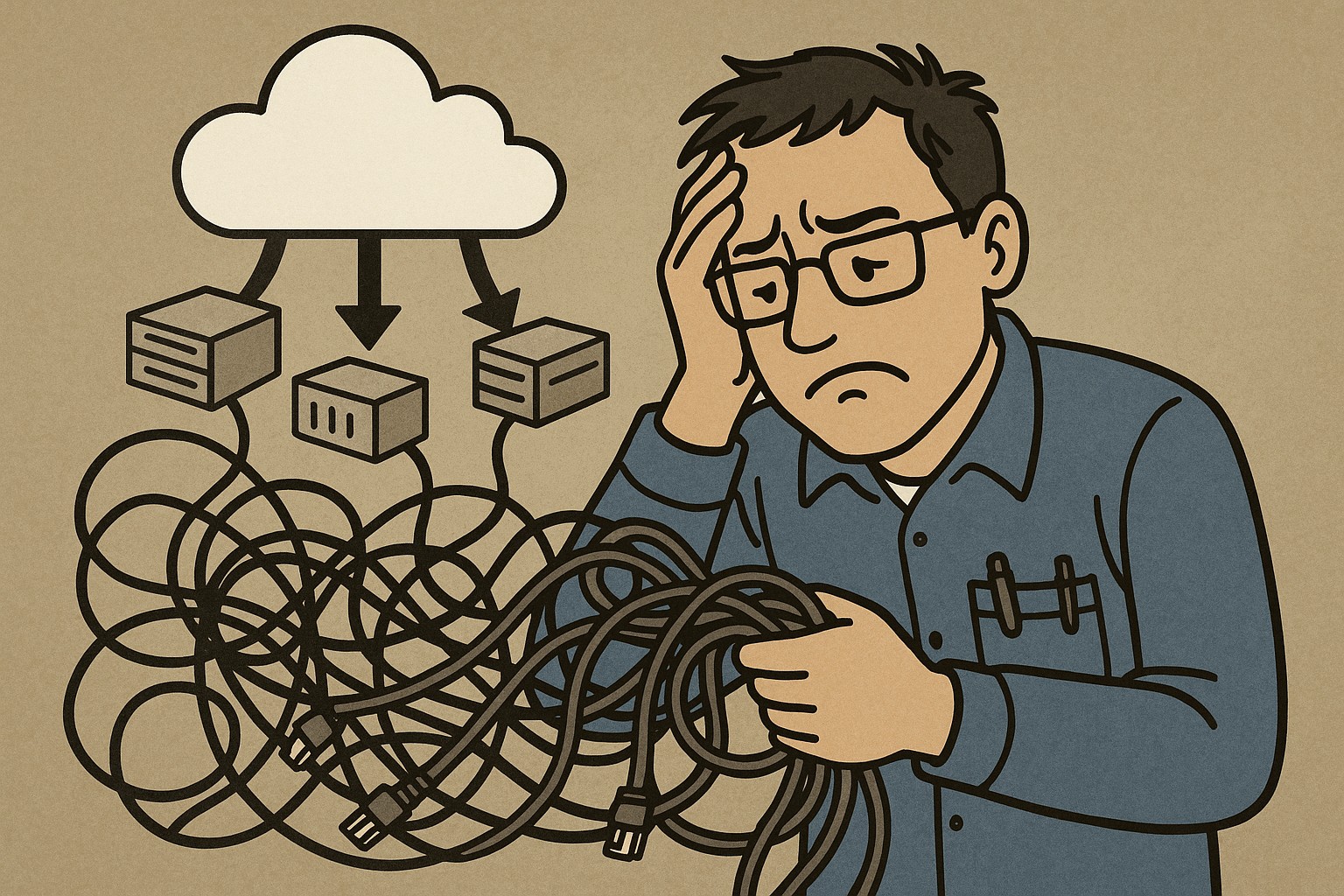

At the same time, organizations will face ongoing challenges around data quality, integration complexity, and the need to balance speed with governance. As models become more capable, the expectation for transparency and accountability increases, pushing teams toward more robust explainability and auditable decision pipelines. Ethical considerations, including bias mitigation and privacy protection, will remain central as predictive analytics expands into more sensitive domains and regions with strict regulatory requirements.

FAQ

What is predictive analytics and why is it valuable?

Predictive analytics uses historical data and statistical methods to forecast future events or behaviors, enabling proactive decision making, optimized operations, and improved customer experiences. Its value comes from turning data into actionable insights that inform planning, risk management, and strategy.

What data do you need for predictive analytics projects?

The core requirement is relevant, high-quality data that captures the factors driving the intended outcome. This typically includes internal sources such as transactional, operational, and customer interaction data, complemented by external indicators when appropriate. Data quality, provenance, and governance are critical for reliable predictions.

How do you measure the ROI of predictive analytics initiatives?

ROI is assessed by linking model-driven decisions to measurable business outcomes, such as revenue gains, cost savings, or improved customer retention. A robust plan includes baseline performance, forecasted improvements, and a methodology to attribute results to the analytics effort over a defined period.

What are common challenges in deploying predictive analytics at scale?

Common challenges include data silos, data quality issues, model drift, and governance complexity. Others are ensuring model explainability, integrating predictions into business workflows, and maintaining privacy and compliance across regions and use cases.

How can organizations ensure ethical and responsible use of predictive analytics?

Organizations should embed bias audits, privacy-by-design practices, and transparent explainability into every stage—from data collection to deployment. Establishing clear governance, stakeholder accountability, and ongoing monitoring helps balance innovation with safety and trust.