Now Reading: Predictive Analytics in Asset Management (Utilities Sector)

-

01

Predictive Analytics in Asset Management (Utilities Sector)

Predictive Analytics in Asset Management (Utilities Sector)

Executive overview of predictive analytics in utilities asset management

Utilities face aging assets, rising demand, and capital constraints that challenge reliability and cost efficiency. Predictive analytics provides a data-driven approach to anticipate equipment faults before they disrupt service, enabling teams to shift from reactive to proactive maintenance. By combining real-time sensor data, asset histories, and external signals such as weather or usage patterns, utilities can forecast stress on equipment, schedule checks during low-demand windows, and reduce the probability of cascading failures. This approach supports both grid reliability and customer experience by prioritizing interventions where they will deliver the greatest risk-adjusted value. It also helps executives balance short-term operational needs with long-term capital planning, aligning maintenance strategies with financial and regulatory goals.

The scope spans generation and distribution across critical asset classes—from transformers and switchgear to pumps, turbines, pipelines, and meters. The core idea is to translate streams of data into actionable insights: when a component is likely to fail, what preventive action is most cost-effective, and how to schedule work so that it minimizes disruption while preserving safety and compliance. As models mature, utilities can orchestrate maintenance across fleets, optimize spare parts inventories, and improve outage forecasting, thereby reducing both direct costs and customer impact.

- Reduced unplanned outages and service interruptions

- Optimized maintenance scheduling and inventory planning

- Extended asset life through timely interventions

- Improved safety and regulatory compliance

- Enhanced visibility and risk-based prioritization of work

- Capital expenditure and operating expense optimization

Data foundations and quality for asset analytics

Reliable predictive models start with clean, timely data. Utilities collect diverse data types from field sensors, control systems, and enterprise platforms, and they must manage data quality, lineage, and governance to sustain model performance over time. Without trustworthy data, forecasts degrade, alerts become noise, and decisions can drift away from business objectives. Establishing data freshness targets, validation checks, and robust data pipelines helps ensure that models respond to current conditions and reflect actual equipment behavior.

Key data sources and governance practices include structured sensor streams, asset registries, maintenance histories, weather and environmental feeds, and incident records. Establishing standard metadata, data retention policies, and access controls ensures that data is usable for training, validation, and production scoring. Clear data contracts between operations, IT, and analytics teams reduce handoffs and accelerate deployment, while regular audits guard against drift and ensure compliance with safety and regulatory requirements.

- Sensor telemetry and asset health metrics

- SCADA/EMS data streams and control logs

- CMMS maintenance records and work orders

- GIS and asset registry referencing locations, hierarchy, and criticality

- Weather, load, and environmental data

- Historical failure, repair, and outage records

- Data stewardship, metadata standards, and lineage tracking

Models, techniques, and reliability engineering for utilities

Predictive modelling in utilities blends time-series analysis, machine learning, and physics-informed reasoning to forecast failures, estimate remaining useful life, and quantify risk. Time-series models capture seasonal patterns in demand and stress on assets, while machine learning approaches identify nonlinear relationships in sensor data that precede faults. RUL estimation, survival analysis, and anomaly detection provide a multi-faceted view of asset health that supports different maintenance strategies—ranging from run-to-failure with monitoring to proactive replacement and retrofits. The best programs combine multiple approaches, leveraging domain knowledge about asset physics and operating envelopes to improve interpretability and trust in the results.

Effective deployment also requires governance around interpretability, monitoring, and change management. Models must be retrained as equipment behavior evolves, with dashboards that explain risk drivers to engineers and operators. Integration with existing workflows—CMMS work orders, spare parts inventories, and field crews—ensures that insights translate into timely, actionable actions. This includes establishing model performance targets, alert thresholds, and a clear process for validating model recommendations before they influence maintenance decisions.

- Supervised learning for fault classification and remaining useful life estimation

- Time-series forecasting (ARIMA, Prophet, LSTM) for load and stress assessments

- Survival analysis for failure risk and time-to-event modelling

- Anomaly detection (unsupervised/semi-supervised) to flag unusual sensor behavior

- Physics-informed and hybrid models for pumps, valves, transformers, and pipelines

- Ensemble methods to improve robustness and reduce overfitting

Operationalizing analytics and maintenance workflows

Bringing predictive insights into daily operations requires integration with CMMS, GIS, and control systems, along with clear escalation paths for maintenance staff. Model outputs are translated into risk scores, recommended maintenance actions, and prioritized work lists that align with safety and regulatory requirements. Dashboards and exception-based alerts help maintenance planners sequence interventions for maximum impact with minimal disruption, while field teams receive actionable guidance and feedback loops to capture outcomes that refine future predictions.

Key implementation considerations include data latency, model monitoring, role-based access, and change management. Field crews benefit from mobile workflows, standardized inspection checklists, and streamlined data capture that feeds back into model training. A well-designed deployment also anticipates governance, cyber security, and reliability standards to sustain performance over time, ensuring that analytics remain aligned with safety and environmental requirements as conditions evolve.

| Stage | Data Inputs | Output | Example Use |

|---|---|---|---|

| Data ingestion and feature engineering | Sensor streams, CMMS data, weather | Feature set for modelling | Prepare inputs for a remaining life model |

| Model training and validation | Historical failure labels, maintenance records | Predictive model | Estimate RUL for critical pumps |

| Deployment and monitoring | Live streams, model predictions | Alerts and maintenance recommendations | Trigger a work order for high-risk assets |

| Action and feedback | Actual outcomes, new data | Retraining triggers, improved models | Continuous improvement loop |

ROI, risk management, and implementation roadmap

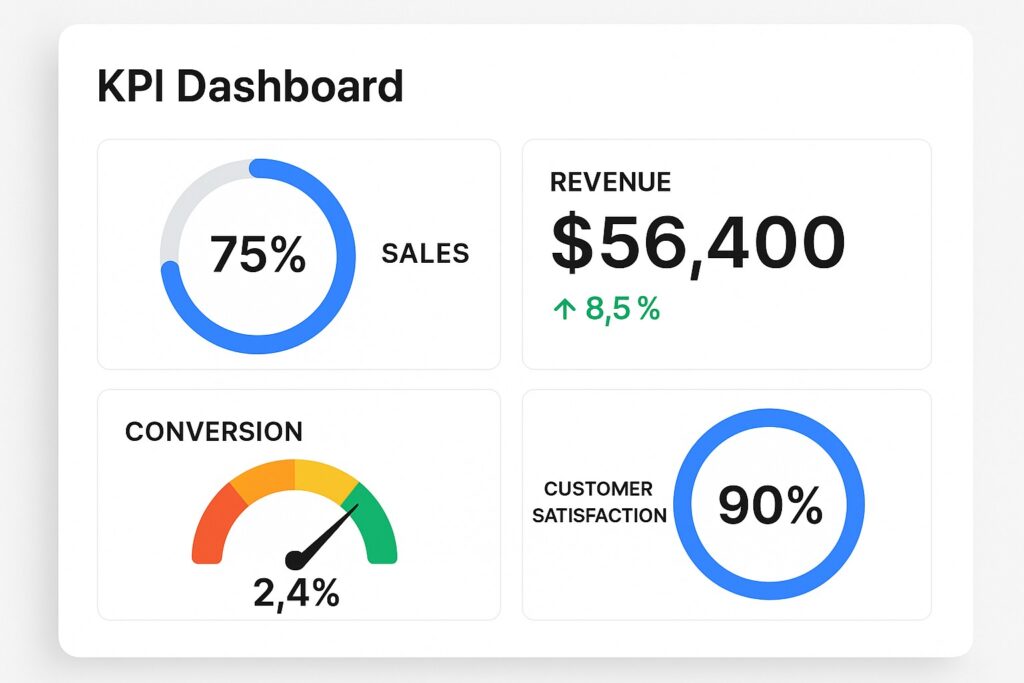

Financial outcomes from predictive analytics come from reducing unplanned outages, lowering maintenance costs, extending asset life, and optimizing capital expenditure. Utilities that implement a disciplined analytics program typically see faster response times, improved reliability indices, and better alignment between risk, front-line operations, and long-term planning. The business case integrates hard savings—such as parts cost reductions, labor efficiency, and avoided outages—with qualitative benefits like improved safety, customer satisfaction, and regulatory confidence, which can influence financing terms and regulatory outcomes. A disciplined approach also helps quantify risk transfer by enabling more accurate exposure analysis and scenario planning.

An implementation roadmap usually starts with a data readiness assessment and a pilot focused on a small, high-impact asset class. From there, teams scale to broader portfolios, establishing governance, change management, and model monitoring practices. The roadmap should define milestones, required data stewardship roles, and a framework for ongoing value delivery, including regular reviews of metrics and adjustments to maintenance strategies as more data becomes available. Governance emphasizes accountability, data privacy, and security, while the roadmap emphasizes measurable milestones such as outages avoided, maintenance cost reductions, and improvements in asset health indices.

What are the typical data requirements for predictive asset analytics in utilities?

Typical data requirements include real-time and historical sensor telemetry, asset metadata (type, location, criticality), maintenance history and work orders, environmental and weather signals, and event logs such as outages. Data quality, timeliness, and coverage across critical assets are essential; models must be trained on diverse scenarios to avoid bias and ensure reliable predictions in operation. Organizations often start with a prioritized subset of assets to establish data pipelines, then expand coverage as data governance and pipeline reliability mature.

How is predictive analytics integrated with existing CMMS and SCADA systems?

Integration is usually achieved through data pipelines and APIs that feed model outputs back into CMMS for work order creation or prioritization. SCADA/EMS data provides live context for predictions, while dashboards present risk scores to operators. Organizations often run models in a staging environment before production, with defined change controls and operator training to ensure smooth adoption. Successful integration requires governance around data ownership, versioning, and rollback plans to mitigate disruption during deployment.

What is the typical ROI timeline for utilities adopting predictive analytics?

ROI timelines vary by asset class and scope, but early pilots typically show measurable gains within 12 to 24 months through targeted maintenance, reduced outages, and more efficient spare parts use. Larger portfolios can realize broader financial benefits over 2–5 years as models mature, data coverage expands, and maintenance planning becomes increasingly predictive rather than reactive. Real-world ROI is influenced by data quality, asset criticality, organizational culture, and the ability to scale analytics across the enterprise.

How do utilities handle data governance and security when deploying predictive models?

Data governance and security involve clear data ownership, access controls, encryption in transit and at rest, audit trails, and compliance with relevant regulations. Many programs use sandbox environments for testing, strict vendor risk management, and periodic reviews of data sharing agreements to protect sensitive information while enabling analytics. A mature program couples technical safeguards with policy alignment and ongoing staff training to sustain secure, compliant analytics at scale.

What are common challenges and how can they be mitigated?

Common challenges include data quality gaps, data integration complexity, model drift, and resistance to changing workflow. Mitigation strategies focus on establishing robust data governance, cross-functional sponsorship, phased pilots, comprehensive validation, and ongoing education for operators and maintenance personnel to ensure sustained adoption. Early wins, transparent communication of results, and alignment with regulatory and safety objectives help build trust and sustain investment in analytics initiatives.