Now Reading: Predictive Analytics in Manufacturing: Enhancing Production Efficiency

-

01

Predictive Analytics in Manufacturing: Enhancing Production Efficiency

Predictive Analytics in Manufacturing: Enhancing Production Efficiency

Overview: Predictive analytics in manufacturing

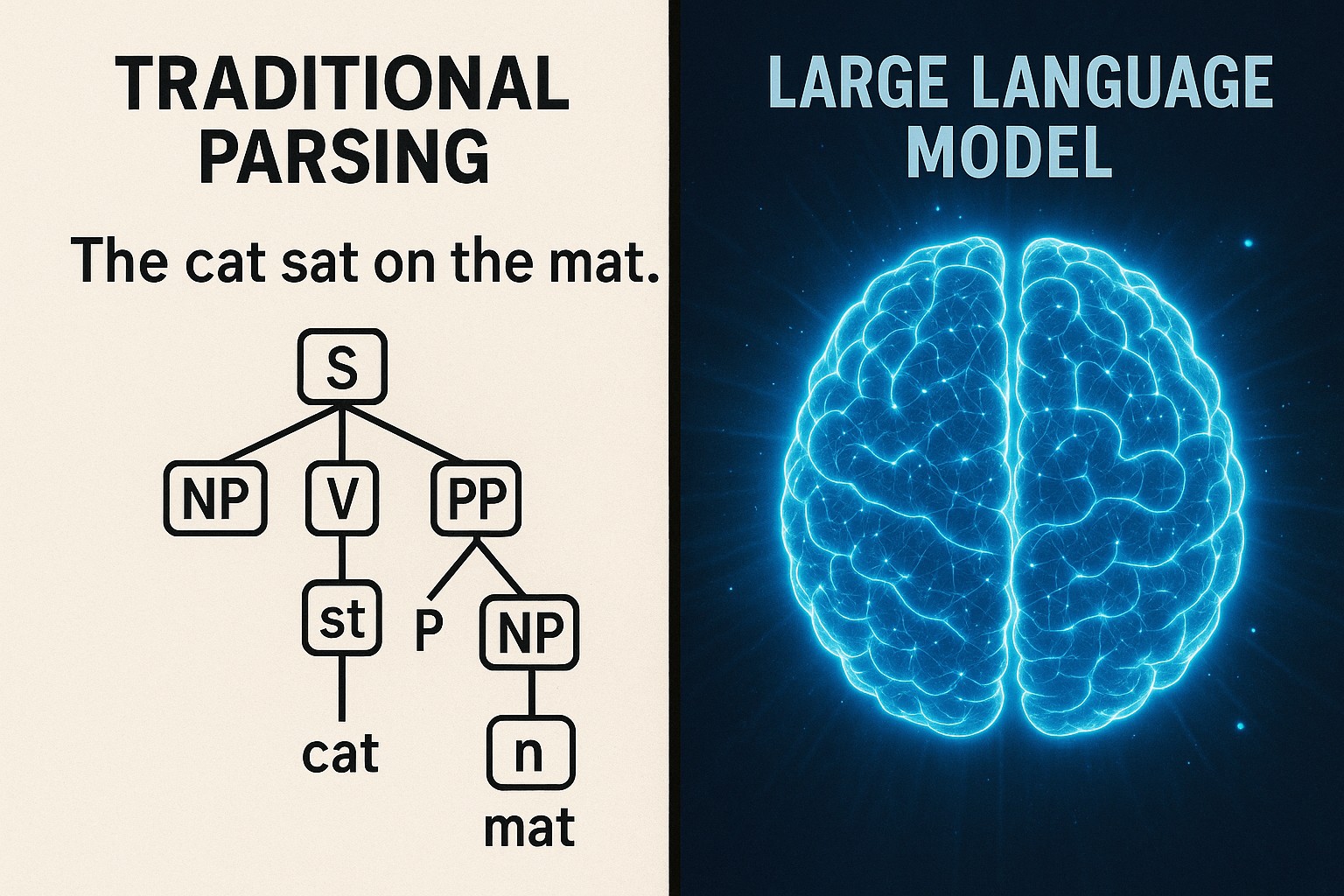

Predictive analytics in manufacturing refers to the systematic use of statistical models, machine learning, and data science techniques to forecast future conditions, events, or outcomes based on historical and real-time data. In practice, manufacturers collect vast streams of data from sensors embedded in machines, programmable logic controllers, maintenance records, quality inspections, ERP and MES systems, supplier portals, and even external signals such as weather or market indicators. By aggregating these sources, teams can identify patterns that precede equipment failures, production slowdowns, or quality deviations, and translate those patterns into actionable decisions that improve reliability and efficiency.

Adopting predictive analytics is not about replacing human expertise; it is about augmenting it with evidence-based insights. Mature programs connect data governance, data quality checks, and model monitoring to ensure predictions stay reliable over time. They also embrace digital twin concepts—virtual representations of equipment or entire production lines—that enable scenario analysis without interrupting operations. When deployed at scale, predictive analytics can improve overall equipment effectiveness (OEE), shorten maintenance windows, optimize energy use, and reduce inventory carrying costs by aligning supply with anticipated demand and capacity constraints.

Key use cases of predictive analytics in manufacturing

Across manufacturing, several use cases consistently deliver value when paired with robust data pipelines and domain knowledge. Organizations typically begin with the most measurable outcomes—reliability, throughput, and cost—and then expand to more strategic areas such as supply chain resilience and sustainability. The following use cases represent a practical starting point for many plants seeking to translate data into measurable improvements.

- Predictive maintenance and asset reliability

- Demand forecasting and inventory optimization

- Production scheduling and constraint management

- Quality analytics and process optimization

- Energy management and sustainability improvements

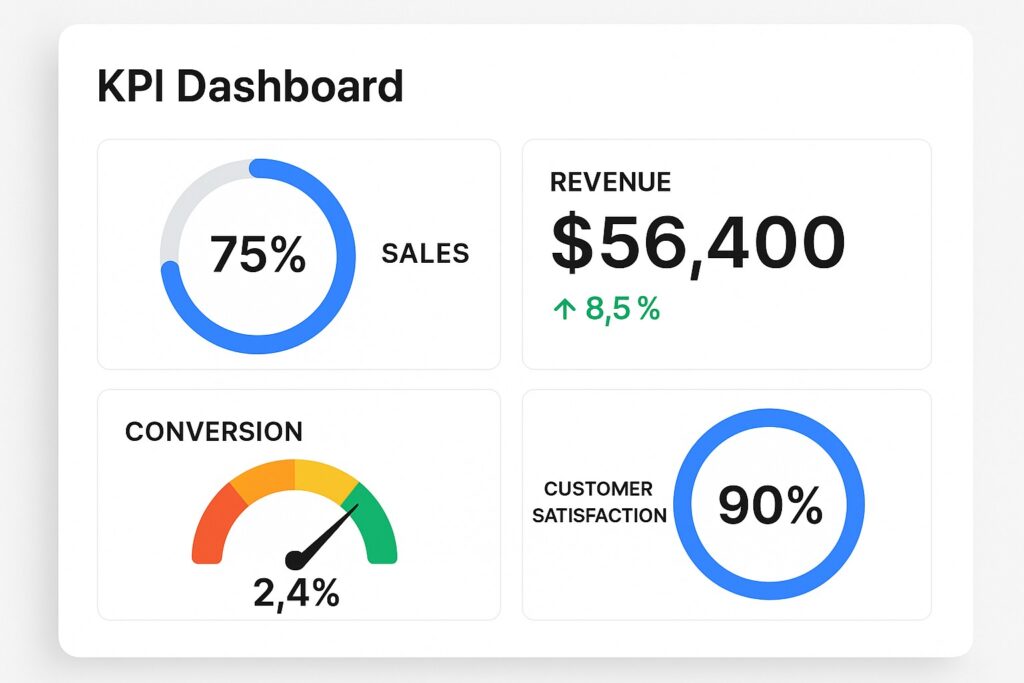

Each use case relies on a combination of data types, from high-frequency sensor streams to batch quality records, and requires governance to ensure data lineage and model transparency. Early pilots often demonstrate ROI through reduced unplanned downtime, shorter changeovers, and higher first-pass yields, which can be quantified via specific KPIs such as MTTR, OEE, and days of inventory per unit produced. As organizations mature, these initiatives can converge to deliver end-to-end improvements across the manufacturing value chain.

Predictive maintenance: reducing downtime and extending asset life

Predictive maintenance uses time-series data from equipment sensors, vibration analysis, thermal imaging, lubricant analyses, and historical maintenance records to forecast when a component is likely to fail or degrade below an acceptable threshold. Rather than performing maintenance on a fixed calendar, teams schedule interventions just ahead of predicted failure, keeping machines available for production and reducing the risk of catastrophic outages. The results can include longer asset life, lower repair costs, and fewer emergency shutdowns that disrupt delivery commitments.

Successful programs implement a closed-loop feedback process: data collection, model retraining as equipment behavior evolves, and maintenance decisions aligned with production priorities. They also distinguish between true predictive signals and spurious correlations by validating models with out-of-sample data and periodically revalidating assumptions. In practice, maintenance teams work with operations to calibrate alerts so technicians receive timely, actionable recommendations without creating alert fatigue. The net effect is a leaner maintenance cycle that targets what matters most to uptime and quality.

Optimizing production schedules and throughput

Analytics-driven scheduling considers machine availability, changeover times, material constraints, labor capacity, and demand signals to produce feasible, optimized schedules. When integrated with digital twins of the line or plant, planners can simulate what-if scenarios—such as introducing a new production run, re-sequencing steps, or adding a parallel line—and quantify expected throughput, lead times, and inventory impact before any change is made on the floor. The outcome is tighter adherence to delivery windows and improved utilization of expensive assets.

- Capture and integrate operational data from MES, ERP, and shop-floor sensors

- Develop, train, and validate predictive models for downtime, takt time, and queue lengths

- Run scenario simulations to compare schedules under constraints and risk factors

- Automate decision rules or optimization engines to adjust sequences in near real time

- Monitor actual results, retrain models, and continuously refine scheduling policies

Effective implementation requires governance over data timeliness, system interfaces, and stakeholder alignment. ROI is typically realized through higher on-time delivery, reduced work-in-process, and less overtime during peak periods, as well as the ability to respond swiftly to demand changes without sacrificing performance. By embedding analytics into the planning loop, operations teams gain a proactive rather than reactive posture, enabling more predictable production and better customer service levels.

Data and governance for manufacturing analytics

Analytics in manufacturing is only as good as the data that feeds it. Data quality, lineage, and access controls determine whether insights are trustworthy across the enterprise. Data often resides in multiple silos—instrumentation databases, maintenance histories, quality systems, and external supplier data—and requires careful integration, standardization, and metadata management. Without clear governance, models may degrade as data evolves or as personnel change roles, leading to degraded predictions and misplaced decisions.

- Data quality and cleansing standards

- Data lineage and impact analysis

- Access control and data privacy policies

- Retention, archival, and discoverability practices

Establishing a governance framework is a collaborative effort that spans IT, data science, operations, and finance. It includes defining responsibilities, setting thresholds for model risk, and creating feedback loops that feed back into the data supply chain to address gaps and errors. A practical approach often starts with a small, well-scoped data domain, then expands to enterprise-wide data with repeatable processes for ingestion, validation, and monitoring.

Data governance is not a one-off project; it is a capability that becomes visible in every decision, from a daily machine alert to a board-level strategic plan.

To support scalable analytics, teams typically implement a layered architecture: a data lake or warehouse to store raw and curated data, ETL/ELT processes to harmonize feeds, and analytic services or notebooks that run models and generate dashboards. Operational dashboards provide near real-time visibility, while governance dashboards monitor data quality and model performance over time. With these practices in place, manufacturers can pursue continuous improvement with auditable, reproducible results that stakeholders across the organization can trust.

FAQ

How long does it typically take to implement predictive analytics in a manufacturing environment?

Implementation timelines vary based on scope, data readiness, and organizational alignment. A focused pilot aimed at a single asset or line can often be established within 6 to 12 weeks, including data connection, model selection, and initial validation. Expanding to multiple assets, integrating with planning systems, and building automated decision capabilities may take 6 to 12 months or longer. A phased approach that starts with a measurable objective, uses clear success criteria, and includes governance from the outset tends to reduce risk and accelerate value realization.

What data is essential to start a predictive analytics project in manufacturing?

At minimum, you should have time-stamped data from equipment sensors (vibration, temperature, pressure, energy use), maintenance history (faults, repairs, parts, intervals), and production data (throughput, downtime, quality measurements, scrap rates). Supplementary data such as material specifications, supplier performance, and environmental conditions can enhance model accuracy. The key is to establish data quality, consistent identifiers, and reliable data lineage so models can be validated and trusted over time.

How do we measure ROI from predictive analytics initiatives?

ROI can be quantified through a combination of hard and soft benefits. Common metrics include reductions in unplanned downtime (MTTR), improvements in OEE, shorter changeover times, and lower energy consumption per unit produced. Financial indicators such as payback period, net present value, and internal rate of return are interpreted alongside qualitative gains like improved schedule reliability, better customer service, and reduced risk of regulatory non-compliance. A rigorous evaluation plan should compare performance before and after analytics deployment while controlling for confounding factors.

What are common challenges when deploying predictive analytics in manufacturing, and how can we overcome them?

Typical challenges include data silos and poor data quality, resistance to change among staff, and integration with existing planning and ERP systems. To overcome these issues, start with a cross-functional governance team, implement data quality checks early, and choose use cases with clear, measurable outcomes. Invest in change management by training users, creating user-friendly dashboards, and aligning incentives with data-driven decision making. Also, design models with interpretability in mind and establish ongoing monitoring to detect degradation and trigger retraining when needed.