Now Reading: SDLC Checklist: Ensuring Successful Software Projects

-

01

SDLC Checklist: Ensuring Successful Software Projects

SDLC Checklist: Ensuring Successful Software Projects

Requirements Gathering and Planning

A solid SDLC begins with a clear understanding of business goals and a shared vision of success. In practice this means engaging stakeholders early, documenting objectives, and translating them into measurable requirements that can guide design, development, and testing. The planning phase should also capture constraints related to budget, timelines, regulatory obligations, and available resources, while establishing governance mechanisms to keep the project aligned with strategy.

- Stakeholder interviews and workshops to uncover business goals, user needs, and success criteria.

- User stories, use cases, and clearly defined acceptance criteria that map to value delivery.

- Baseline scope definition with explicit boundaries, milestones, and success metrics.

- Regulatory, security, and compliance considerations that must be addressed from day one.

- Risks, dependencies, and environmental constraints that could impact schedule or scope.

Capturing these elements in a living requirements artifact creates traceability from business value to technical deliverables. It supports prioritization, risk management, and change control, helping teams avoid scope creep and ensuring alignment across product management, design, security, and operations. Additionally, a robust requirements baseline enables more accurate planning of resources, testing needs, and deployment windows, reducing friction later in the project lifecycle.

Design and Architecture Validation

Design and architecture activities translate the gathered requirements into a scalable, maintainable blueprint. Early architectural thinking helps identify potential bottlenecks, integration complexities, and non-functional demands such as performance, reliability, and security. A disciplined approach to design reviews, modeling, and stakeholder sign-off creates a foundation that reduces rework as implementation progresses.

- Define architectural goals that align with business outcomes and measurable quality attributes.

- Select suitable software architecture patterns and technology stacks that balance flexibility, performance, and maintainability.

- Model interfaces, data flows, and integration points using lightweight diagrams to facilitate understanding across teams.

- Assess non-functional requirements, including scalability, resilience, security, observability, and compliance constraints.

- Plan iterative design reviews and risk mitigation activities, ensuring decisions are well-documented and traceable.

In addition to formal reviews, maintain a concise design runbook and an accessible decision log so new team members can quickly grasp the rationale behind major choices. Emphasize principles such as modularity, separation of concerns, and clear ownership to enable efficient future evolution of the system.

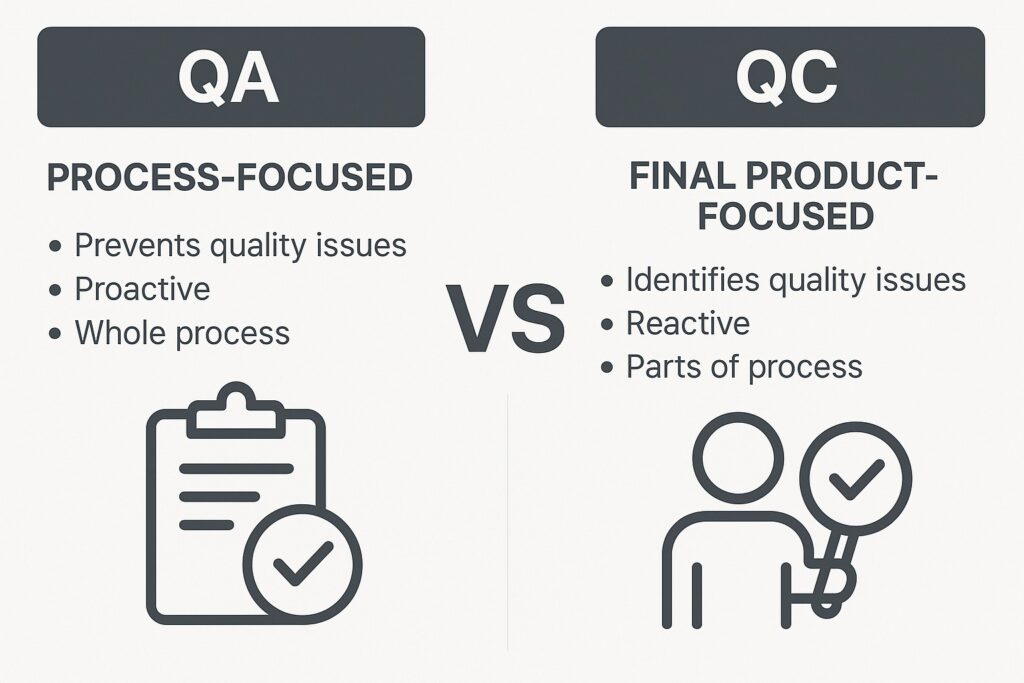

Implementation, Testing, and Quality Assurance

With a solid design in place, the implementation phase focuses on translating architecture into working software while upholding quality standards. Emphasize consistent coding practices, maintainable codebases, and robust version control. Automated pipelines, code reviews, and lightweight governance help ensure that changes are reliable, auditable, and traceable through each stage of delivery.

- Adopt and enforce coding standards, perform regular code reviews, and encourage pair programming where appropriate.

- Unit tests and, where suitable, test-driven development to validate individual components and logic early.

- Continuous integration with automated build, linting, and test execution to catch defects quickly.

- Integration and end-to-end testing for critical user journeys and cross-system interactions.

- Quality metrics, defect tracking, and a clear process for triaging and prioritizing remediation efforts.

Quality assurance should cover both functional requirements and non-functional targets such as throughput, latency, security posture, and reliability. A risk-based testing approach helps allocate limited testing resources to the areas with the greatest potential impact, while automation and data-driven testing provide continuous feedback to developers and product owners.

Deployment, Monitoring, and Maintenance

Deployment planning and operational readiness are essential to delivering value without disrupting existing services. This phase includes release planning, environment provisioning, and establishing processes for change control, rollback, and incident response. A well-defined deployment strategy minimizes risk and enables rapid recovery in the event of issues post-release.

To sustain performance and reliability after go-live, implement comprehensive monitoring, alerting, and runbooks for common incidents. Establish maintenance windows, deprecation timelines, and knowledge transfer procedures to operations teams. Documentation should remain current, with clear owners and escalation paths to ensure ongoing delivery is predictable and sustainable.

FAQ

What is the main purpose of an SDLC checklist?

The main purpose of an SDLC checklist is to ensure that all critical activities across the software lifecycle are considered, planned, and executed consistently. It serves as a reminder of governance, quality, compliance, and risk management practices, helping teams align on objectives, reduce rework, and improve predictability in delivery timelines and outcomes.

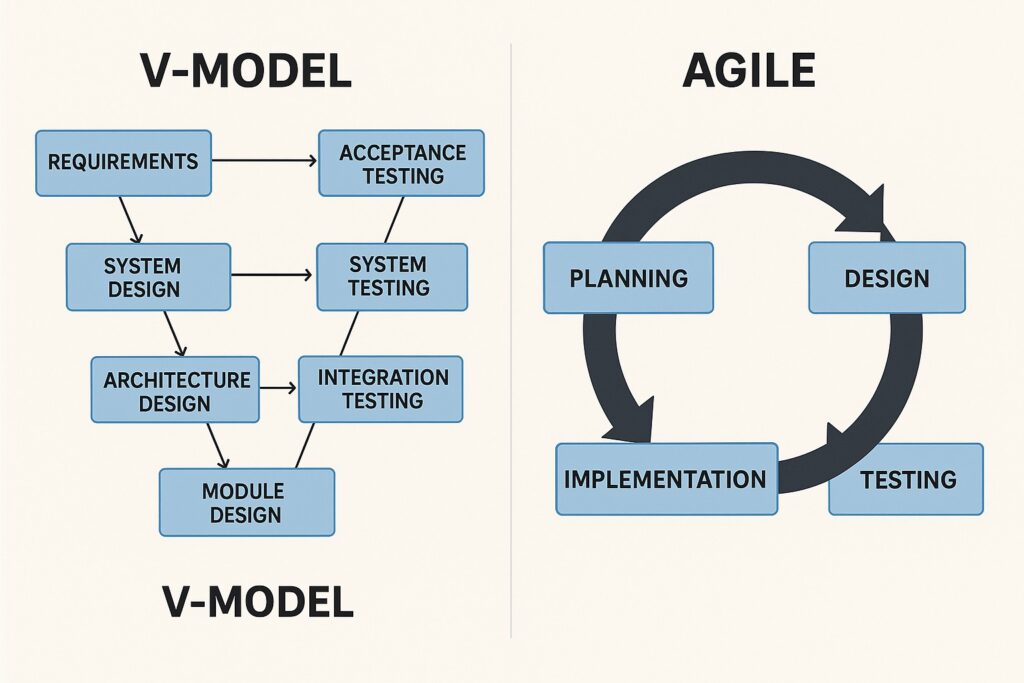

How can I tailor the checklist to accommodate agile versus traditional projects?

Tailoring the checklist involves emphasizing iterative learning and rapid feedback in agile contexts, while still ensuring governance and risk controls. In agile projects, emphasize ongoing backlog refinement, rapid prototyping, frequent demonstrations, and automated testing within sprints. For traditional approaches, focus on upfront requirement stability, phase-gate reviews, more formal change control, and longer planning horizons. The core principles—traceability, quality, and risk awareness—remain constant, but the cadence and emphasis adapt to the chosen methodology.

Who should own the checklist within a project?

Typically, the project or program manager coordinates the checklist with active participation from product owners, solution architects, and quality assurance leads. In larger organizations, a governance board or SDLC office may oversee the standard, while individual teams tailor it to their domain. The key is clear ownership, frequent updates, and shared accountability for adherence and improvement.

How often should the checklist be reviewed and updated?

Review and updates should occur at major milestones, prior to each release, and after significant incidents or scope changes. A lightweight cadence, such as quarterly or at sprint boundaries for agile teams, can help keep the checklist relevant to evolving technologies, regulatory landscapes, and business priorities. Continuous improvement should be the baseline, with changes reflected in a living document accessible to the entire team.

What metrics should accompany the checklist to measure success?

Metrics should cover delivery predictability (variance between planned and actual), defect density and escape rate, test coverage, deployment success rate, mean time to recover (MTTR), and system reliability indicators. Additionally, track alignment with business value, such as feature uptake, user satisfaction, and achievement of defined acceptance criteria. A balanced scorecard approach helps leadership assess both process maturity and business impact.

Can the checklist help with regulatory and security compliance?

Yes. A well-designed checklist explicitly includes compliance considerations, mapping requirements to controls, and documenting evidence trails. Integrating security and privacy review steps, threat modeling, and vendor risk assessments into the checklist helps ensure that regulatory obligations are addressed proactively rather than reactively, reducing the likelihood of expensive remediation late in the lifecycle.