Now Reading: Transformer Architecture and Models: From BERT to Beyond

-

01

Transformer Architecture and Models: From BERT to Beyond

Transformer Architecture and Models: From BERT to Beyond

Introduction to Transformer architecture

The transformer architecture emerged in 2017 as a foundational shift in natural language processing, introducing the principle that attention mechanisms can model dependencies across tokens without relying on sequential processing. This design eliminates many of the bottlenecks associated with recurrent neural networks, enabling highly parallelizable training and more scalable modeling of long-range dependencies. At its core, the transformer uses an encoder–decoder structure, where the encoder builds contextual representations of input sequences and the decoder generates outputs, often conditioned on those representations. The architecture relies on multi-head self-attention, position-wise feed-forward networks, residual connections, and layer normalization to stabilize training and improve gradient flow across many layers. Because attention operates over the entire input, the model can learn relationships that span sentences and even paragraphs, which is essential for language understanding tasks that depend on context and discourse-level information.

From a business and engineering perspective, the transformer offers compelling advantages: parallelizable computation accelerates training, pretraining on broad corpora yields transferable representations, and the same architectural family can power a wide range of applications—from classification and extraction to generation and planning. The shift away from strictly sequential models has enabled large-scale pretraining, leading to dramatic improvements in benchmarks and enabling practical deployment at scale. This architectural paradigm underpins a broad spectrum of models, from BERT-style encoders to GPT-style decoders and the encoder-decoder hybrids that span both worlds. For organizations evaluating AI strategy, understanding the transformer’s attention mechanism, encoder/decoder modalities, and the pretraining–fine-tuning lifecycle is essential for budgeting, governance, and roadmap planning.

In practical terms, transformer-based systems have become the default choice for many enterprise NLP goals. They power language understanding, content generation, translation, summarization, and question answering with performance that often scales with data and compute. The broad ecosystem—pretraining objectives, data strategies, benchmarking suites, and deployment tooling—has matured to support both research and production environments. For business leaders and AI engineers alike, mastering the transformer’s capabilities, limitations, and trade-offs is critical to align technical decisions with measurable outcomes such as accuracy, latency, reliability, and regulatory compliance.

Core building blocks of Transformer

The core building blocks of a transformer model are designed to enable deep representation learning while maintaining trainability at scale. Each transformer layer typically comprises a multi-head self-attention sublayer followed by a position-wise feed-forward network, with residual connections and layer normalization surrounding each sublayer. The attention mechanism allows every token to participate in constructing a contextual vector by weighing relationships across the entire input sequence. The feed-forward network then transforms each token’s representation independently. Because attention is permutation-invariant, positional encoding or learned position representations are added to inject information about token order. Collectively, these components enable the model to capture complex linguistic patterns—from surface syntax to semantic roles—without relying on fixed-length context windows that limit traditional architectures.

Within an encoder, inputs are first embedded and enriched with positional information before being processed through stacked layers. A decoder, when used, adds an additional attention path to attend to encoder outputs while generating tokens in a left-to-right fashion. Practical aspects such as layer normalization and dropout contribute to training stability and generalization, especially as models scale to hundreds of millions or billions of parameters. The architecture’s modularity—adjusting the number of layers, attention heads, and hidden dimensions—allows practitioners to tailor models to specific latency budgets, hardware constraints, and task complexities. This modularity is a key driver of the rapid adaptation of transformer architectures across industries and languages.

Below is a compact representation of a typical transformer block, illustrating the recurrent subcomponents that appear across encoder and decoder stacks:

def attention(Q, K, V, mask=None):

scores = Q @ K.transpose(-2, -1) / sqrt(d_k)

if mask is not None:

scores = scores.masked_fill(mask, -1e9)

weights = softmax(scores, dim=-1)

return weights @ V

# A single encoder/decoder layer applies attention, then a feed-forward network

# with residual connections and layer normalization around each sublayer

The following list highlights the essential building blocks you will commonly encounter in transformer-based architectures:

- Multi-head self-attention mechanism to capture relationships across tokens

- Position-wise feed-forward networks to transform representations at each position

- Residual connections and layer normalization to stabilize deep networks

- Positional encoding to inject sequence order into otherwise permutation-invariant attention

BERT and the era of bidirectional context

BERT introduced the concept of deep bidirectional context by pretraining an encoder-only transformer on masked language modeling and a sentence-pair objective (next-sentence prediction). The model learns to predict masked tokens using context from both directions, creating richer representations than unidirectional approaches. After pretraining, BERT can be fine-tuned for downstream tasks by attaching task-specific heads, enabling strong performance on classification, extraction, and inference tasks. The encoder-centric design makes BERT ideal for understanding rather than generation, aligning with many enterprise needs where interpretation and accurate extraction are paramount.

Subsequent variants—RoBERTa, ALBERT, ELECTRA, and others—refined data efficiency, pretraining objectives, and architectural tweaks to scale model capacity while maintaining or improving efficiency. RoBERTa increased training data and steps; ALBERT shared parameters to reduce memory footprints; ELECTRA used a replaced-token detection objective to improve sample efficiency. These innovations broadened the practical reach of BERT-like encoders, improving performance on a wide array of tasks, particularly in domains with limited labeled data or where inference latency is constrained. The BERT family established a robust foundation for representation learning and a familiar baseline for evaluating advances in encoder stacks.

For businesses and engineers, BERT and its successors demonstrated the value of pretraining on expansive, general-domain text and then fine-tuning on domain-specific data. They became the go-to choice for named-entity recognition, sentiment analysis, document classification, and information retrieval, among other tasks. While BERT-like models are optimized for understanding, their influence catalyzed a broader ecosystem of transformer variants that address generation, dialogue, and multimodal tasks. The BERT revolution laid the groundwork for a practical, reusable paradigm: high-quality representations learned on large corpora can be adapted to many tasks with relatively modest labeled data and specialized fine-tuning.

Evolution from BERT to GPT to T5 to beyond

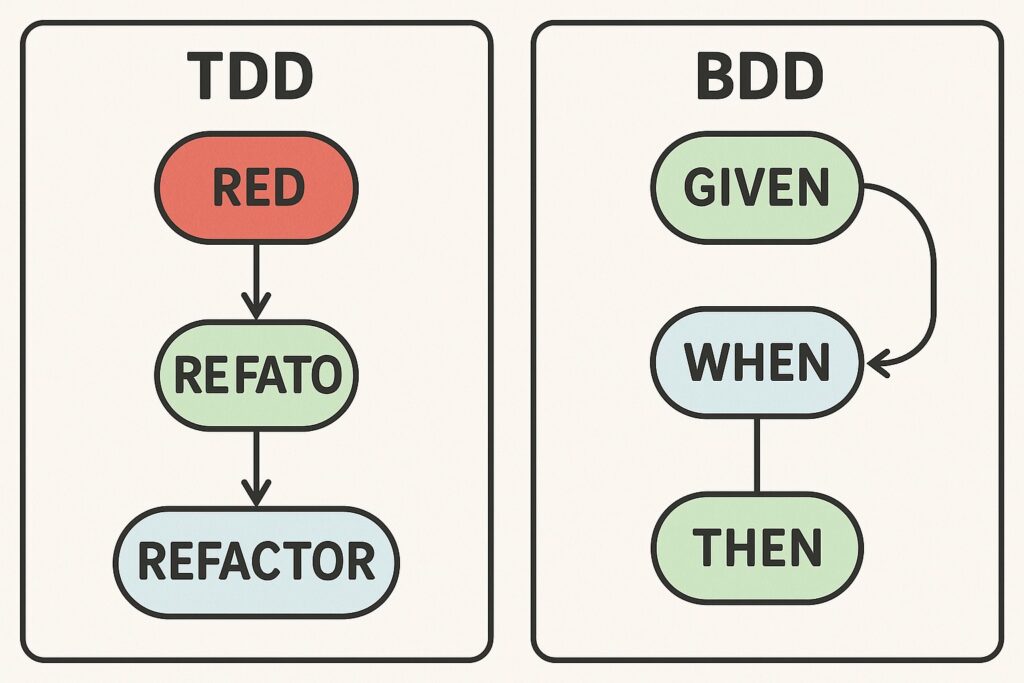

The history of transformer-based NLP often unfolds along three broad strands: encoder-only systems, decoder-only generation, and encoder-decoder versatility. Encoder-only models (e.g., BERT and RoBERTa) excel at understanding and extraction tasks by producing rich contextual embeddings. Decoder-only models (e.g., GPT series) emphasize autoregressive text generation and in-context learning, enabling fluent outputs and flexible prompting. Encoder-decoder architectures like T5 and BART demonstrate cross-domain versatility by modeling tasks as sequence-to-sequence problems, allowing simultaneous handling of understanding, transformation, and generation within a single framework.

- Encoder-only models: BERT and its successors optimize representations for understanding tasks with masked-language objectives and optional sentence-pair signals.

- Decoder-only generation: GPT-style models emphasize autoregressive text generation, enabling coherent long-form outputs and in-context learning.

- Encoder-decoder versatility: T5 and BART demonstrate how the same architecture can be used for both understanding and generation in a unified framework.

- Efficient and specialized variants: a family of optimizations and training objectives (e.g., ELECTRA-style pretraining, data-efficient methods, and multilingual scaling) to improve performance per compute unit.

From a product and deployment perspective, the evolution highlights a spectrum of capabilities and trade-offs. Encoder-only models are typically the best choice for high-precision classification and extraction with stable latency profiles. Decoder-only models excel at generation and instruction-following, but they demand careful prompting, safety safeguards, and often higher inference costs. Encoder-decoder models offer balanced performance for translation, summarization, and complex QA tasks, while also allowing flexible prompting and fine-tuning strategies. For organizations, the key decision often centers on the required capabilities, data availability, latency targets, and governance constraints rather than chasing a single “best” paradigm. This evolution also underscores the importance of diverse pretraining data, task-specific fine-tuning, and thoughtful data curation to achieve robust, real-world performance across domains.

How transformers process language

Transformers convert raw text into numerical representations that neural networks can manipulate. Tokenization converts text into subword units using methods such as WordPiece, BPE, or Unigram models, balancing vocabulary size with linguistic coverage. Each token is mapped to a dense embedding, to which a positional encoding—either fixed or learned—is added, ensuring the model can distinguish token order. The resulting sequence passes through the model’s stacked attention layers, progressively building contextualized representations that capture syntax, semantics, and discourse-level information essential for downstream tasks.

Attention mechanisms are central to this process. Multi-head attention allows the model to focus on multiple aspects of the input simultaneously, while masking in the decoder preserves causality during generation. Encoder self-attention enables token-to-token interactions within the input, producing rich, context-aware representations. In the decoder, cross-attention attends to encoder outputs while generating each token, enabling coherent sequence-to-sequence behavior. Training objectives vary by family—masked language modeling, span corruption, or supervised fine-tuning—but the overarching approach relies on cross-entropy losses and gradient-based optimization over vast corpora. The scale of data and compute often correlates with performance gains, particularly for tasks requiring nuanced world knowledge or long-range dependencies.

In production environments, practical considerations such as sequence length, memory footprint, and latency drive architectural choices. Techniques like mixed-precision training, gradient checkpointing, and hardware-optimized kernels help scale models to billions of parameters while keeping training times manageable. Inference strategies—beam search, nucleus sampling, and temperature control—shape the quality and diversity of generated text, impacting user experience and operational risk. For decision-makers, appreciating how tokenization interacts with model capacity and how attention scales with input length is critical for planning deployment, governance, and risk controls in real-world business contexts.

Practical implications for NLP applications

Transformer-based models unlock a broad set of practical capabilities across industries, from automated customer support and document understanding to multilingual translation and compliance monitoring. The business value arises from high performance across tasks, rapid adaptation to new domains, and scalable deployment. However, this power comes with considerations around data governance, privacy, compute expenditure, and safety. Enterprises typically pursue a mix of broad pretraining and domain-specific fine-tuning to achieve robust results while managing risk. The resulting systems can boost productivity, accelerate decision-making, and enable new value propositions in areas such as content moderation, enterprise search, and knowledge management.

From an engineering standpoint, deployment decisions involve balancing latency, throughput, and memory usage. Techniques such as model distillation, pruning, and quantization help reduce footprint with acceptable accuracy trade-offs. Prompt engineering and few-shot learning can reduce the need for extensive fine-tuning in some scenarios, though they may introduce variability in outputs. Data pipelines, monitoring, and evaluation tooling become essential as models transition from pilots to production. Governing policies around bias, fairness, and safety, along with robust testing, are increasingly integrated into the lifecycle of model development and deployment.

In practice, transformer-based models—ranging from BERT llm variants to GPT-style systems and T5-like architectures—are increasingly embedded in enterprise AI programs. The adaptability of pretrained representations to domain data lowers time-to-value for new tasks, while still leveraging broad world knowledge acquired during pretraining. For organizations, the decision to adopt transformer-based solutions should consider data governance, regulatory requirements, and the feasibility of on-premises deployment or privacy-preserving inference to meet policy constraints. When aligned with business goals, data strategy, and responsible AI practices, these models deliver measurable ROI and sustained competitive advantage.

Challenges and future directions

Despite their success, transformer-based models face ongoing challenges that shape research and product strategies. Data efficiency remains a critical concern: achieving state-of-the-art results often requires enormous unlabeled data and substantial compute resources. Techniques such as semi-supervised learning, self-supervised objectives, and distillation aim to reduce data and compute requirements while preserving performance. Interpretability and auditability are also central as models grow in capability; practitioners seek methods to trace decisions, identify failure modes, and understand biases rooted in training data. Alignment with human preferences, safety constraints, and robust performance under distribution shift are essential for responsible deployment across domains.

Scaling and efficiency continue to drive both opportunities and costs. As models expand to billions of parameters, energy use, memory, and hardware availability become critical constraints. This has spurred research into efficient attention variants, sparse architectures, and better parallelism strategies. Multilingual and cross-lingual capabilities are advancing, with aims to reduce data requirements per language while preserving or enhancing cross-language performance. Multimodal extensions—combining text with images, audio, and structured data—represent a growing frontier where transformer-like architectures fuse diverse signals to unlock deeper business insights and user-facing capabilities.

Finally, governance and risk management are becoming central to large-scale deployments. Organizations invest in bias and safety testing, explainability tooling, and monitoring dashboards to detect drift or unintended behavior over time. The future of transformers will not be defined solely by model size but by smarter training regimens, higher-quality data, and transparent, auditable deployment practices that align with organizational values and regulatory expectations. This holistic view is essential for sustaining value from transformer-based AI in complex business environments.

Practical implementation considerations

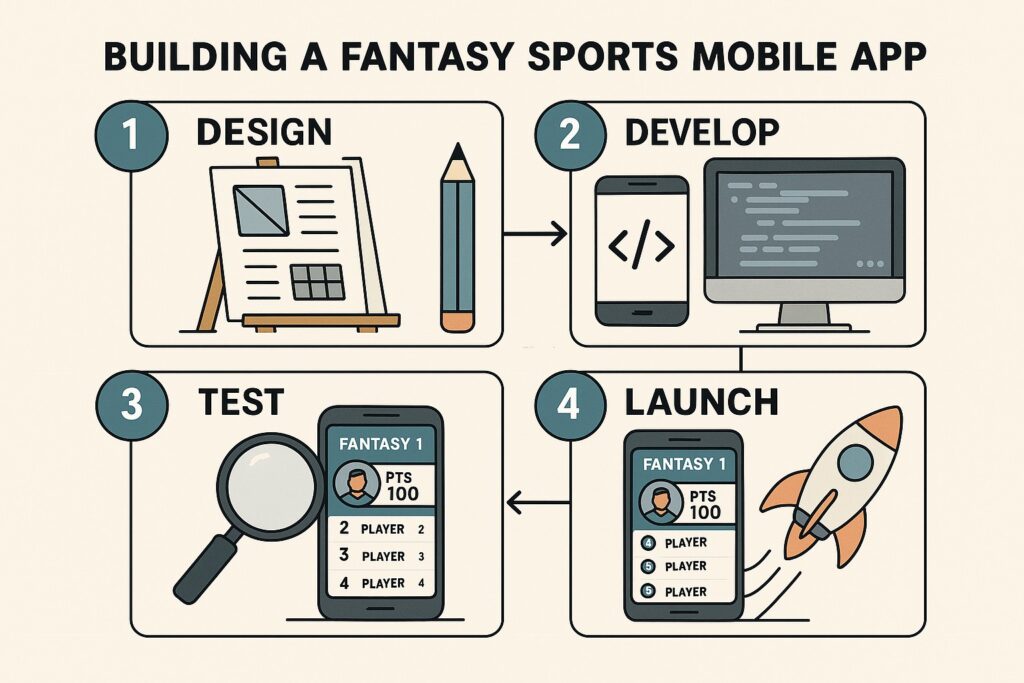

When planning an implementation strategy for transformer-based models, leaders must align technical choices with business outcomes and risk tolerances. A practical approach combines pretraining on large, diverse corpora with task-specific fine-tuning, enabling rapid adaptation to new domains while preserving a core knowledge base. Operational considerations—including compute budgeting, model versioning, and continuous evaluation—support sustainable deployment at scale and enable iterative improvement. The end-to-end lifecycle—from data acquisition to monitoring and governance—should be designed to deliver measurable value while maintaining compliance.

From a technical perspective, several design decisions have a disproportionate impact on performance and cost. Model size, number of layers, hidden dimensions, and attention heads shape capacity and compute expense. Sequence length determines memory usage and throughput, influencing latency budgets for interactive applications. Learning rate schedules and warmup steps critically affect convergence during both pretraining and fine-tuning. Regularization techniques such as dropout and weight decay improve generalization, while appropriate optimization strategies (e.g., AdamW, LAMB) and mixed-precision training optimize speed and resource utilization. Effective tokenization and vocabulary sizing influence both accuracy and memory, particularly for multilingual or domain-specific deployments.

Fine-tuning strategies can further optimize performance with minimal retraining. Options include task-adaptive pretraining, adapters, or prompt-tuning to tailor a shared base model to a specific domain or task without full-scale reoptimization. Deployment considerations such as quantization, distillation, and hardware-specific optimizations balance latency targets with accuracy. Operational practices—continuous evaluation, drift monitoring, and governance reviews—ensure sustained reliability and safety in production. By combining principled model design with disciplined data management and robust monitoring, organizations can realize dependable NLP capabilities that scale with business needs.

To guide implementation, teams often rely on practical guidelines for model selection, training pipelines, and deployment strategies. The goal is to deliver NLP capabilities that meet user expectations for quality, responsiveness, and privacy while maintaining responsible AI practices. The following list highlights key hyperparameters and design considerations that commonly determine a transformer’s performance, latency, and cost profile:

- Model size and depth: number of layers, hidden dimension, and attention heads influence capacity and compute cost.

- Sequence length and truncation: maximum input length affects memory usage and throughput.

- Learning rate schedule and warmup steps: critical for stable convergence during pretraining and fine-tuning.

- Regularization: dropout, weight decay, label smoothing to improve generalization.

- Optimization and precision: choose optimizer (AdamW, LAMB), mixed-precision training (FP16/AMP) to balance speed and memory.

- Data management: effective tokenization, vocabulary sizing, and handling of rare tokens.

- Fine-tuning strategy: task-adaptive pretraining, adapters, or prompt-tuning to limit retraining costs.

- Deployment considerations: quantization, distillation, and hardware-specific optimizations for latency targets.

FAQ

What is a transformer, in simple terms?

A transformer is a neural network architecture that uses attention mechanisms to weigh the relevance of different words in a sequence, allowing the model to understand context and relationships without processing text strictly from start to finish. This enables fast training on large datasets and supports both understanding and generating language, depending on the model variant.

How does BERT differ from GPT in terms of capabilities?

BERT is an encoder-focused model designed for understanding and representation learning, typically used for tasks like classification and extraction. GPT is a decoder-focused model optimized for generation, producing fluent text and handling in-context learning. Encoder–decoder models like T5 combine both capabilities, enabling tasks that require both understanding and generation within a single framework.

What role do pretraining objectives play in transformer performance?

Pretraining objectives shape the kinds of linguistic knowledge the model learns before fine-tuning. Masked language modeling and next-sentence prediction, used in BERT, help the model learn bidirectional context and sentence relationships. Other objectives, such as autoregressive language modeling (as in GPT) or span corruption, influence generation quality, coherence, and data efficiency. The choice of objective affects downstream suitability for specific tasks and data regimes.

Why are transformer models so compute-intensive, and is there a path to efficiency?

Transformers often require large-scale data and substantial compute due to their parameter count and deep architectures. Efficiency gains come from several approaches: model distillation, quantization, pruning, sparse attention, mixture-of-experts, and improved training techniques. Additionally, evidence suggests that broader and higher-quality data can yield better performance per parameter, making data strategy an important lever alongside architectural optimizations.

How should an organization approach governance and safety for transformer-based systems?

Governance should address data provenance, bias mitigation, model transparency, and ongoing monitoring for drift or harmful outputs. Safety practices include content filtering, prompt safeguards, evaluation against failure modes, and clear escalation paths for problematic outputs. Regulatory requirements may dictate data handling, user consent, and auditable deployment practices. A thoughtful governance framework helps ensure responsible AI with predictable risk management and regulatory alignment.

What are practical considerations for deploying transformers in production?

Practical deployment considerations include latency targets, throughput needs, memory constraints, and cost. Techniques such as model distillation, quantization, and hardware-optimized inference help meet performance requirements. Data pipelines, monitoring, and ongoing evaluation are essential to sustain quality and safety. Finally, organizational factors—data governance, access control, and license compliance—play a critical role in ensuring scalable, compliant deployments that deliver real business value.