Now Reading: AI Agents and Automation: AutoGPT, Plugins & Beyond

-

01

AI Agents and Automation: AutoGPT, Plugins & Beyond

AI Agents and Automation: AutoGPT, Plugins & Beyond

Strategic role of AI agents in modern automation

AI agents are increasingly positioned as the operating system of automated business processes. Rather than executing a single canned routine, these agents interpret broad goals, negotiate trade-offs, and progressively break work down into sub-tasks that can be executed by specialized tools. This shift enables organizations to move from manual handoffs to end-to-end automation that adapts to changing inputs, data quality, and stakeholder priorities. In practice, this means automation that can respond to new client prompts, regulatory changes, or unexpected data patterns with little to no reprogramming.

To realize reliable autonomous workstreams, enterprises must align agents with clear objectives, robust data governance, and measurable outcomes. The value proposition rests on enabling faster decision cycles, reducing human labor for repetitive cognitively demanding tasks, and improving consistency across processes. However, the transition also introduces new considerations around safety, traceability, and accountability, which must be addressed through disciplined design, monitoring, and governance.

Effectively deploying AI agents requires a thoughtful architecture that supports goal setting, task decomposition, tool integration, and oversight. This article outlines practical patterns for using AutoGPT, plugins, and orchestration techniques to deliver scalable intelligent automation that remains controllable, auditable, and aligned with business priorities.

AutoGPT: capabilities, architecture, and practical deployments

AutoGPT represents a class of autonomous agents that can interpret a defined objective, plan a sequence of actions, and execute tasks through a chain of calls to tools and services. The core value lies in the ability to turn high-level goals into concrete sub-tasks and to adapt the plan as new information becomes available. In a business context, this enables capabilities such as continuous data gathering, automated report generation, and iterative problem solving without constant manual intervention.

From an architectural perspective, AutoGPT-like systems typically comprise a planner or decision module, a task executor, memory or context persistence, and a plug-in surface that allows interfacing with external services. The planner analyzes the goal, generates sub-tasks, and sequences tool calls. The executor carries out tasks, handles responses, and may retry or reframe steps when outcomes are incomplete or data quality is suboptimal. Context persistence keeps track of state, progress, and historical decisions to inform subsequent actions and provide auditable traces for governance.

For practical deployments, teams often start with a narrowly scoped objective that can be achieved within existing data ecosystems and security boundaries. Examples include automating dataset preparation for analytics, triaging customer tickets by categorizing issues and routing them to the right teams, or drafting standard operating procedures based on observed workflows. Over time, the agent is extended with additional plugins and safety guardrails to handle more complex workflows, integrate with enterprise systems, and align with policy requirements.

// Example configuration snippet (illustrative)

{

"agent": "AutoGPT",

"goal": "Ingest customer service requests, categorize, and create resolved task tickets",

"constraints": ["response time < 2 minutes", "no PII leakage", "audit-log enabled"],

"plugins": ["data-connector", "ticketing-automation", "sentiment-analysis"],

"safety": { "guardrails": true, "human-in-the-loop": false }

}

ChatGPT plugins and modular automation for business workflows

ChatGPT plugins extend the capabilities of autonomous agents by connecting to external systems, databases, and specialized services. This modular approach enables rapid composition of workflows that can pull data from multiple sources, perform calculations, and trigger downstream actions. The result is a more capable automation fabric that can adapt to changing business needs by swapping or upgrading plugins without rewriting core logic.

In practice, effective plugin ecosystems enable three key capabilities: data access and enrichment, task orchestration and control flow, and governance-aware execution. Data connectors bring in external information, from CRM records to ERP data and document repositories. Orchestration plugins coordinate sequences of actions across services, ensuring dependencies are respected and results are consolidated. Governance plugins enforce policy checks, logging, and access controls to maintain compliance and transparency.

- Data connectors and adapters for databases, clouds, and file systems

- Task orchestration layers that sequence, parallelize, and error-handle work

- Knowledge management and retrieval plugins for context-aware reasoning

- Monitoring, observability, and performance telemetry plugins

- Security, governance, and policy enforcement plugins

Orchestration patterns, decision-making, and risk management

Effective orchestration combines planning, execution, monitoring, and adaptation. A typical pattern starts with a well-defined goal, followed by decomposition into sub-tasks, selection of appropriate tools, and an execution loop that includes checkpoints, quality checks, and potential human oversight when confidence is low. Important design considerations include avoiding overfitting to a single data source, ensuring retry logic that respects rate limits, and maintaining a clear audit trail of decisions and actions.

Several practical patterns emerge for scalable automation. First, adopt a plan-act-adjust loop that uses feedback from outcomes to refine subsequent steps. Second, implement guardrails and safety checks that prevent unintended side effects, such as data leakage or compliance violations. Third, design for observability by centralizing logs, outcomes, and decision rationales to support post-hoc audits and continuous improvement. These patterns help organizations balance speed with reliability and accountability as automation scales.

- Define measurable goals and success criteria before implementing automation.

- Decompose goals into actionable sub-tasks with explicit inputs, outputs, and dependencies.

- Assemble an agent network and plugin mix that aligns with the required capabilities.

- Run controlled pilots with guardrails, monitoring, and rollback plans.

- Monitor performance, collect feedback, and adapt the system to changing conditions.

Governance, security and risk management for AI agents

As autonomy grows, governance and risk management become foundational. Enterprises must implement access controls, data handling policies, and auditable decision trails to ensure compliance and accountability. Beyond compliance, governance helps manage reputational risk, oversight of agent behavior, and the ability to intervene when needed. A structured approach includes policy enforcement at the plugin level, continuous monitoring of outcomes, and defined escalation paths for anomalous results or security incidents.

Key governance practices include maintaining least-privilege access to data and services, safeguarding sensitive information, and ensuring that all automated actions are traceable. Organizations should also establish clear ownership for automated workflows, define rollback and failover procedures, and implement periodic reviews of agent capabilities against evolving regulations and business goals.

- Access controls and least-privilege permissions across data sources and tools

- Data privacy, retention, and handling of personal or sensitive information

- Audit trails, explainability, and traceable decision logs

- Model and plugin version governance, testing, and rollback processes

- Incident response, business continuity, and rollback plans for automated tasks

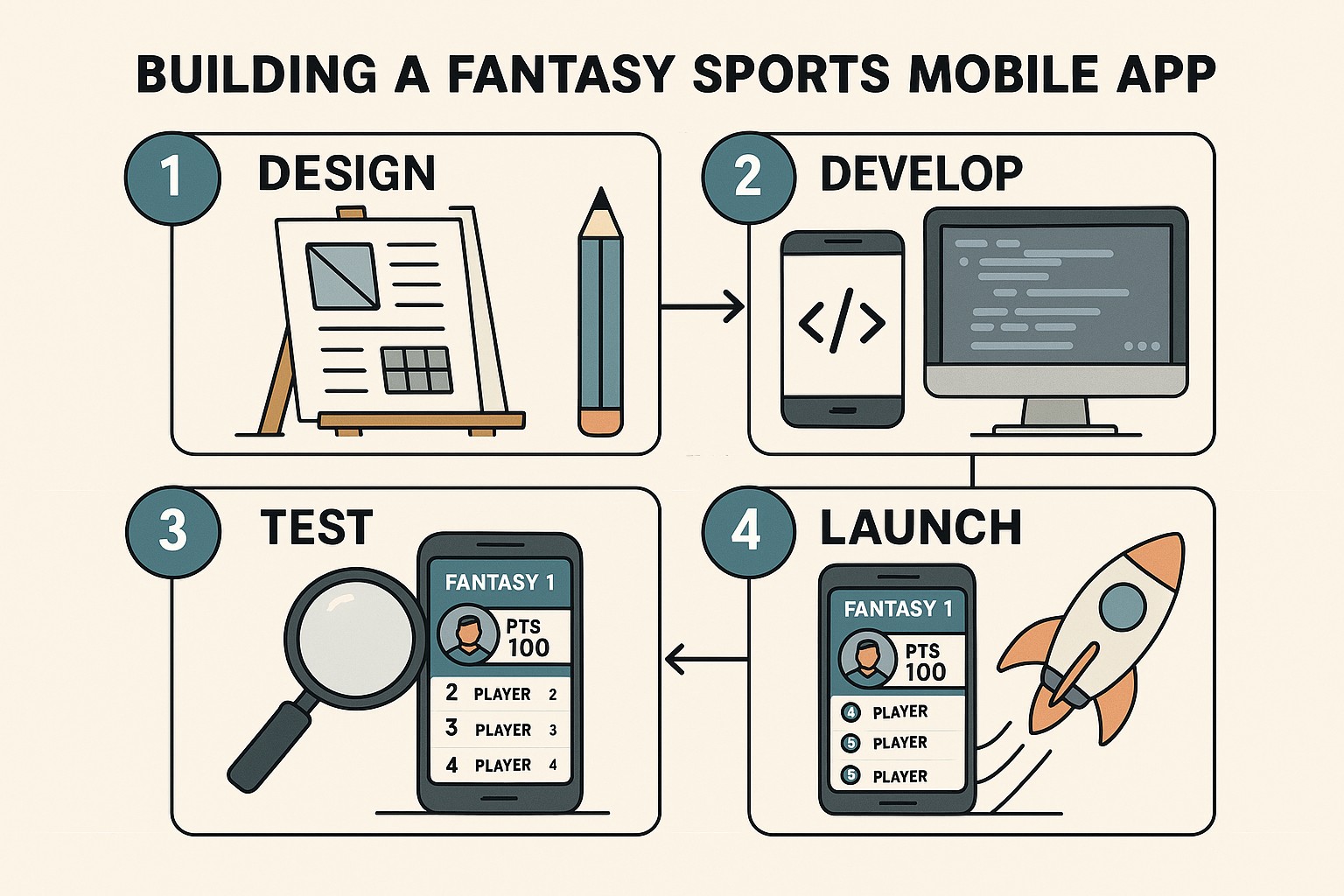

Practical adoption in businesses: from pilot to scale

Adopting AI agents in a business setting benefits from a deliberate, phased approach. Start with a targeted pilot that addresses a high-impact, low-complexity workflow where data quality is reasonably good and success metrics are well-defined. Use the pilot to validate technical feasibility, measure cycle times, and gather feedback from stakeholders. As confidence grows, incrementally expand the scope, introduce additional plugins, and tighten governance controls to accommodate larger data volumes and more complex processes.

Successful scale requires alignment with IT, security, and line-of-business sponsors. Establish a cross-functional governance council, define accountability for automated outcomes, and invest in training for teams to interpret agent decisions and intervene when necessary. Finally, redesign organizational processes to leverage automation as a core capability rather than a one-off experiment. This combination of technical rigor and organizational alignment helps ensure that intelligent automation delivers durable value across the enterprise.

FAQ

Below are common questions that organizations frequently ask when evaluating AI agents and automation platforms. Each answer provides practical considerations to help shape feasibility and roadmap.

How do AI agents differ from traditional automation?

AI agents differ from traditional automation in their ability to interpret broad goals, plan multiple steps, and adapt to new information without explicit reprogramming. Traditional automation typically follows fixed rules and deterministic workflows, whereas AI agents operate with probabilistic reasoning, manage uncertainty, and can orchestrate calls to various tools and services to achieve an objective. This enables more flexible end-to-end processes and quicker adaptation to changing business needs, at the cost of requiring stronger governance, monitoring, and risk controls.

What are the key risks and how to mitigate them?

The primary risks include data privacy and leakage, unintended side effects from automated actions, opaque decision-making, and potential outages if dependencies fail. Mitigation strategies include enforcing least-privilege access, implementing robust audit logs and explainability, incorporating human-in-the-loop checks for high-stakes decisions, and designing resilient fallback paths and rollback procedures. Regular risk assessments and governance reviews should accompany any scalable automation program.

What governance should enterprises implement?

Enterprises should implement a layered governance model that covers data handling, access control, policy enforcement, and operational oversight. This includes clear ownership of automated workflows, standardized testing and release processes for plugins, continuous monitoring dashboards, and auditable decision trails. A governance framework should also define escalation mechanisms for anomalies and a schedule for periodic reviews of automation with regulatory changes and business priorities.

How to measure ROI of AI agents?

ROI can be measured by combining quantitative metrics such as cycle time reduction, human-hour savings, error rate improvements, and throughput, with qualitative indicators like employee satisfaction and stakeholder confidence. A robust measurement plan compares baseline performance against post-implementation performance across defined KPIs, while accounting for the costs of tooling, data preparation, integration, and governance. Long-term ROI often emerges from improved capacity to scale cognitive work and accelerate decision cycles.

How to get started with AutoGPT and plugins?

Begin with a clearly defined, limited objective that touches multiple systems but remains within a safe risk envelope. Inventory the data sources and tools that will be needed, then identify candidate plugins that provide the necessary capabilities. Establish governance and security controls early, run a pilot in a controlled environment, and progressively expand scope as you validate performance, reliability, and compliance. Finally, align stakeholders across IT, security, and business units to ensure sustained buy-in and iterative improvement.