Now Reading: NLP vs LLM: Understanding Natural Language Processing vs Large Language Models

-

01

NLP vs LLM: Understanding Natural Language Processing vs Large Language Models

NLP vs LLM: Understanding Natural Language Processing vs Large Language Models

Foundations: what NLP and LLMs are designed to do

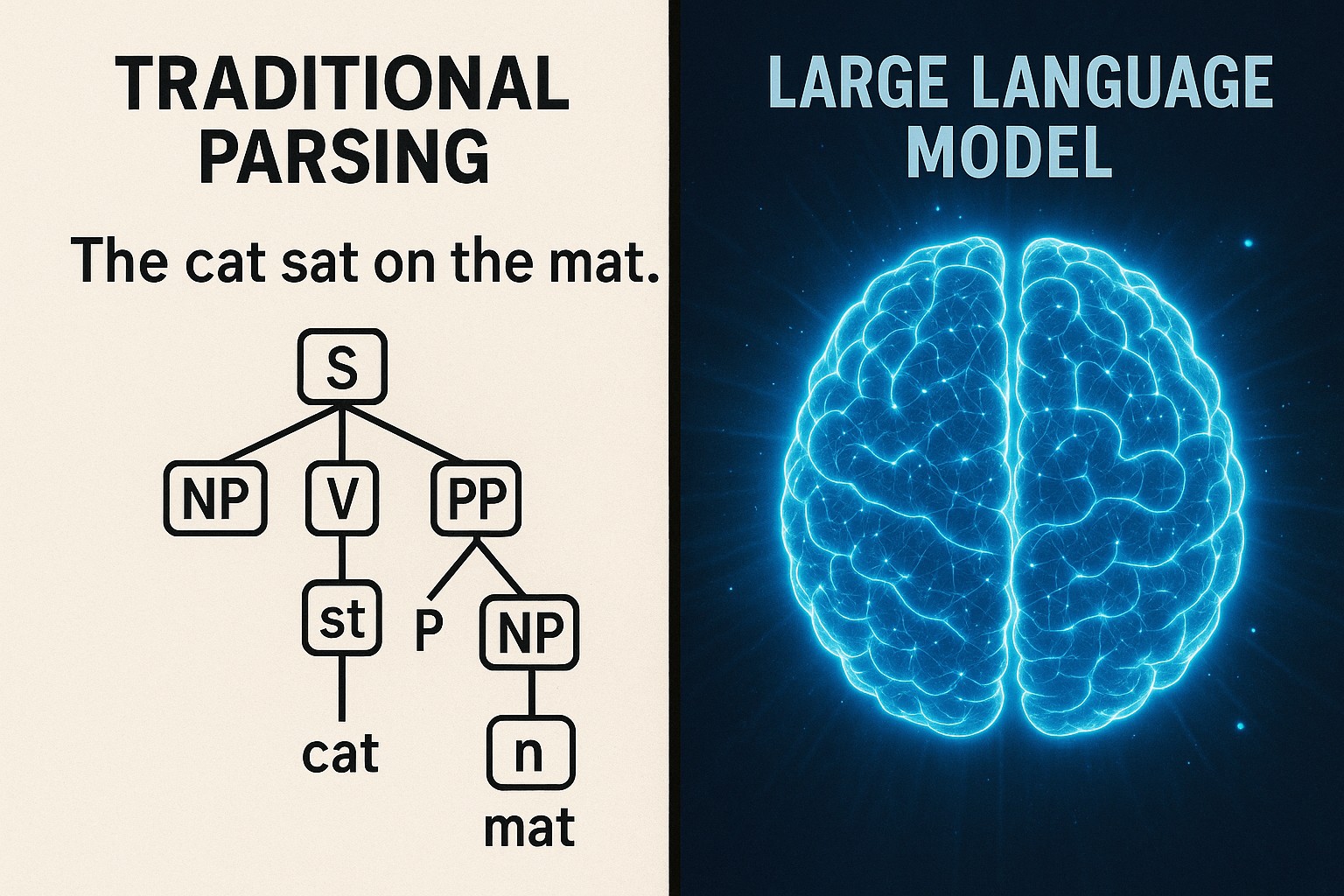

Natural language processing (NLP) and large language models (LLMs) both tackle the same fundamental problem—making sense of human language in a way that a machine can act on. Yet they originate from different design philosophies. NLP traditionally treats language as a structured signal to be analyzed, categorized, or transformed using explicit rules, statistical cues, or a blend of both. The goal is to extract deterministic signals: entities, relationships, sentiment, or syntactic structure that can be used downstream in business workflows such as policy enforcement, customer support routing, or document classification.

LLMs shift the focus from hand-crafted components to data-driven learning. These systems are trained to predict the next word in a sequence across massive text corpora, which endows them with broad linguistic fluency and the ability to perform a variety of tasks with minimal task-specific programming. The outcome is not a pipeline of modular tools with fixed behavior, but a single model that can be prompted to summarize, translate, reason, or generate text in contexts it has never explicitly seen during training. This contrast—rule-based or feature-based analysis vs end-to-end predictive generation—shapes both expectations and tradeoffs for engineering teams.”

Traditional NLP: rules-based and statistical methods

Traditional NLP builds systems as pipelines of modular components. Each stage—tokenization, normalization, part-of-speech tagging, syntactic parsing, named entity recognition, coreference resolution, and sentiment analysis—implements a specific linguistic or statistical objective. Early approaches relied on handcrafted features and linguistic rules, while more recent work leverages statistical models such as conditional random fields (CRFs) and support vector machines (SVMs) to make probabilistic judgments about text segments. The result is a collection of components that can be tuned and audited individually, with clear inputs and outputs at every stage.

These systems offer transparency, predictability, and strong performance in domains with limited vocabulary drift or where regulatory or interpretability requirements demand traceable logic. They excel when the task is well-defined and the data is domain-limited, such as processing invoices, legal documents, or customer tickets with structured formats. However, the cost of domain adaptation can be high: adding a new language, a new industry, or a new document type often requires re-engineering features, collecting labeled data, and re-tuning multiple components. In practice, teams rely on established tooling—open-source libraries and frameworks—that provide robust pipelines, governance hooks, and reproducible evaluation, but the models themselves can be brittle when language use shifts outside the training distribution.

LLMs in practice: transformers, pretraining, and prompting

LLMs are built on large-scale neural architectures—primarily transformers—that leverage attention mechanisms to model long-range dependencies in text. Their training process is unsupervised or self-supervised, typically involving exposure to billions of words from diverse sources. The core idea is to learn statistical patterns of language so that the model can generate fluent text, complete prompts, translate, summarize, or reason about content with impressive generality. Because the model has seen a wide array of linguistic styles and domains, it can adapt to many tasks through prompting, in-context learning, or minimal fine-tuning rather than rebuilding separate components.

In practice, organizations use LLMs to perform a spectrum of tasks—from drafting customer-facing responses to extracting insights from unstructured documents. The prompting paradigm enables rapid experimentation: you can describe the desired output, supply examples, and let the model infer the best approach. Yet this flexibility comes with caveats. Inference can be expensive and latency-sensitive, and the model’s outputs reflect learned correlations rather than guaranteed facts. Hallucinations—plausible but incorrect statements—are a well-known risk, as is the potential for biases embedded in the training data to surface in generated content. Responsible deployment therefore combines robust prompting strategies with safeguards, monitoring, and, where necessary, domain-specific fine-tuning or supervised instruction following.

Where they intersect: tasks, data, and evaluation

Both NLP and LLMs can tackle many common language tasks such as classification, extraction, translation, and summarization, but they do so with different philosophies and resource footprints. For clearly bounded problems with stable terminology and a requirement for interpretability, traditional NLP pipelines often deliver reliable and reproducible results with explicit error budgets. When the goal is broad coverage, fast experimentation, or unstructured tasks that span multiple domains, LLMs offer versatility and reduced upfront engineering effort, delivering value through flexible generation and inference with minimal task-specific coding.

Data availability and governance drive the decision. If labeled data is scarce but unlabeled text is plentiful, LLMs and self-supervised approaches may be attractive because they leverage vast corpora to learn useful representations. If data labeling is feasible and the business needs precise control over behavior, a traditional pipeline can be engineered to meet policy, compliance, and audit requirements. Evaluation mirrors these tradeoffs: classic NLP emphasizes task-specific metrics like precision, recall, F1, and confusion matrices, while LLM evaluation often blends automated checks for coherence and factuality with human review, and includes safety and bias assessments as part of governance frameworks.

- Approach alignment: symbolic/ rule-based pipelines vs data-driven neural models

- Data regime: labeled, domain-specific data vs massive unlabeled corpora plus prompting

- Governance considerations: interpretability and auditability vs safety, bias, and factual accuracy

- Performance characteristics: deterministic behavior and stability vs flexible but sometimes inconsistent outputs

- Adaptation path: modular component updates vs prompt design and selective fine-tuning

- Operational footprint: potentially higher inference cost and latency for LLMs vs lighter pipelines

- Evaluation complexity: targeted metrics for NLP pipelines vs human-in-the-loop and multi-faceted evaluation for LLMs

- Data privacy: proprietary data exposure risk in cloud-based LLM workflows vs on-prem or controlled NLP environments

- Versioning and governance: reproducibility of engineered features vs model versioning, prompt templates, and guardrails

NLP techniques in practice

Classic NLP techniques and pipelines rely on well-understood components. Tokenization, normalization, and stemming prepare raw text for analysis by removing noise and standardizing representations. Part-of-speech tagging and parsing reveal grammatical structure, enabling downstream tasks such as question answering, machine translation, and information extraction. Named entity recognition and relation extraction transform unstructured text into structured data your systems can reason with, while sentiment analysis and topic modeling provide business intelligence signals. Feature engineering—hand-crafting linguistic cues, lexical indicators, and domain-specific heuristics—often remains central to performance in tightly scoped domains.

Statistical models then interpret these features. Conditional random fields, logistic regression, and support vector machines have historically formed the backbone of robust NLP systems, offering interpretable parameters and principled optimization. Evaluation focuses on precision, recall, F1, and error analysis to drive iterative improvements. The operational realities of NLP—latency, throughput, and maintainability—drive decisions about model complexity, hardware requirements, and deployment architecture. For teams, the payoff is a predictable, auditable system whose behavior can be traced through individual components and parameter settings.

LLMs: capabilities, limitations, and governance

LLMs bring broad capabilities to the table, including real-time generation, multi-step reasoning, translation, and summarization across many languages. They can be prompted with examples to perform tasks without writing bespoke code or extensive feature engineering. In-context learning enables the model to infer task instructions from examples embedded in the prompt, offering a rapid experimentation loop for new use cases. However, reliance on massive corpora means outputs can be influenced by data quality and distribution, leading to hallucinations, biases, or leakage of sensitive information if not properly managed.

From a governance perspective, organizations must address safety, privacy, and compliance. Guardrails, fact-checking hooks, and post-generation filtering are often necessary, especially for customer-facing applications or regulated industries. The tradeoffs between latency, cost, and model fidelity require a careful design: choosing between hosted API access versus on-premises or private models, deciding how much to fine-tune versus rely on prompts, and implementing monitoring to detect drift, misalignment, or unsafe outputs. When used responsibly, LLMs can unlock rapid experimentation, cross-domain capability, and efficient handling of unstructured content, but they demand robust risk management and clear ownership of model behavior.

Key distinctions at a glance

- Approach: symbolic and rule-based pipelines paired with traditional statistical models vs end-to-end neural models trained on large corpora

- Data needs: curated labeled datasets for traditional NLP versus massive unlabeled data and prompt-driven adaptation for LLMs

- Output characteristics: deterministic, interpretable component behavior vs probabilistic generation with potential variability

- Adaptation: modular component updates and explicit feature engineering vs prompt design and limited fine-tuning

- Operational considerations: lower, more predictable inference costs in traditional NLP vs higher, often variable costs and latency in LLM deployments

- Evaluation: task-specific metrics and error analysis for NLP pipelines vs multi-faceted evaluation including factuality and safety for LLMs

- Governance: greater emphasis on auditability and interpretability in NLP pipelines vs risk management, guardrails, and privacy controls for LLMs

- Use-case fit: structured, domain-limited tasks with formal requirements vs broad, exploratory tasks that benefit from generalization

- Reliability: careful, repeatable behavior in traditional NLP vs occasional inconsistency and hallucination risk in LLMs

- Maintenance: feature-driven updates and version control in NLP projects vs model and prompt lifecycle management in LLM deployments

- Talent and tooling: a mature ecosystem of pipelines, libraries, and governance for NLP vs a fast-evolving ecosystem around LLMs, prompts, and safety frameworks

NLP techniques in practice (continued)

In real-world projects, teams often blend approaches, using traditional NLP for stable, high-precision components and LLMs for tasks that benefit from generalization or quick prototyping. For example, an enterprise search workflow might employ a robust, rule-based normalization and entity extraction layer to ensure deterministic results, while a companion LLM module handles user-facing summarization or question answering in a more flexible, conversational context. Hybrid architectures demand careful interface design, version control for prompts and templates, and clear ownership of data provenance and model behavior.

When planning a deployment, consider the entire lifecycle: data collection and labeling strategies, evaluation plans that incorporate both automated metrics and human reviews, monitoring for drift and safety, and governance around privacy and regulatory compliance. The goal is to align the chosen approach with business objectives, risk tolerance, and the ability to iterate quickly while maintaining predictability where it matters most.

FAQ

Which approach should a business choose for a given problem?

The choice depends on the problem scope, data availability, and governance requirements. If the task is tightly defined, domain-specific, and requires interpretable, auditable behavior, traditional NLP pipelines with well-understood components are often the safer, more cost-predictable option. If the goal is rapid experimentation across diverse domains, or if you have large volumes of unstructured text and need flexible generation or multilingual capabilities, LLMs can deliver value with less upfront feature engineering. In many cases, a hybrid approach—combining a robust NLP backbone with an LLM-supported layer for tasks like summarization or conversational interactions—offers a practical balance between control and versatility.

How should evaluation differ between NLP and LLM deployments?

Evaluation should reflect the core objectives and risk profile of the application. For NLP pipelines, standard task-specific metrics (precision, recall, F1, accuracy) and error analysis are typically sufficient, with emphasis on reproducibility and interpretability. For LLMs, evaluation often includes checks for factuality, consistency, safety, and bias, in addition to traditional quality metrics like coherence and relevance. Human-in-the-loop reviews can be essential for assessing user impact and boundary conditions. Finally, monitoring post-deployment—watching for drift in model behavior or changes in data distributions—helps maintain performance over time.

What about data privacy and security when using LLMs?

Data privacy considerations are critical when engaging with external LLM services. Any sensitive or proprietary content routed to cloud-based models can pose risk. Strategies to mitigate this include using on-premises or private deployments, masking or redacting sensitive data before submission, limiting the type of data sent to providers, and enforcing strict data governance policies. For many enterprises, a hybrid approach—processing sensitive data with in-house NLP components and reserving LLM usage for non-sensitive, high-value tasks—can offer a safer balance between capability and compliance.

How can organizations reduce hallucinations and bias when using LLMs?

Reducing hallucinations and bias requires a combination of design and governance. Techniques include prompting strategies that constrain outputs, retrieval-augmented generation to ground responses in verified sources, and post-generation verification against trusted facts. Bias mitigation involves diverse and representative data, auditing outputs across demographic contexts, and implementing guardrails or safety classifiers to flag problematic content. Regular evaluation with human review and clear escalation paths for questionable results are essential as part of an ongoing risk management plan.

What does the future hold for NLP and LLMs in enterprise settings?

The trajectory points toward greater integration of robust NLP pipelines with adaptive LLM capabilities, leveraging best-of-both-worlds architectures. Expect more fine-tuning options, better prompt engineering tooling, and standardized governance frameworks that make it easier to monitor, audit, and auditably deploy language models. As models become more capable and more transparent, organizations will migrate toward modular, scalable solutions that maintain compliance, reduce operational risk, and empower business users to extract actionable insights from language data at scale.