Now Reading: When to Choose NoSQL vs SQL: Use Case Guide

-

01

When to Choose NoSQL vs SQL: Use Case Guide

When to Choose NoSQL vs SQL: Use Case Guide

Overview: SQL vs NoSQL at a Glance

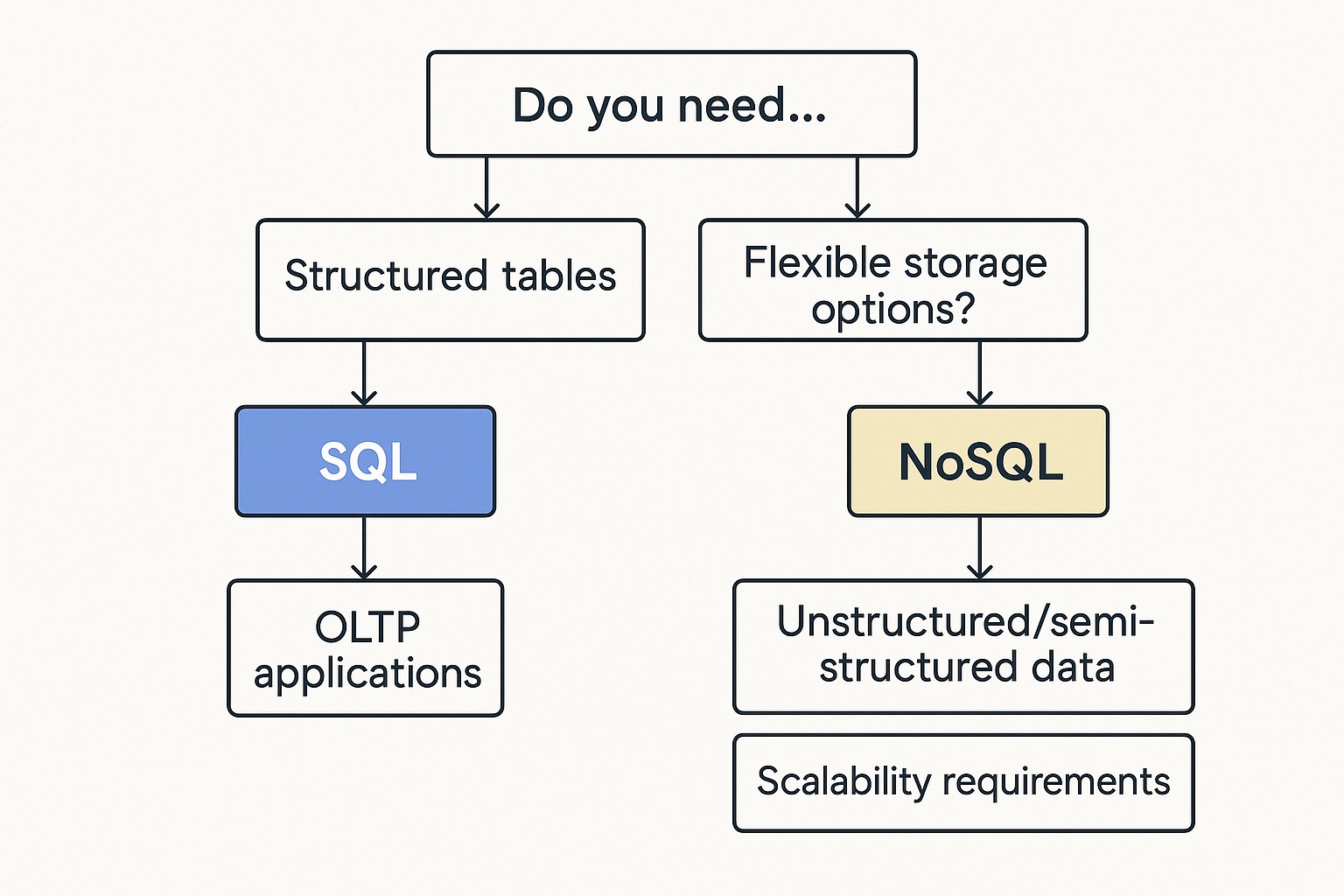

In modern data architecture, SQL databases have long stood as the default for structured data, strong consistency, and complex transactional workloads. NoSQL databases emerged to address needs around flexible schemas, rapid development, and scale across distributed environments. The choice between SQL and NoSQL is not purely technical; it hinges on data modeling, access patterns, and risk tolerance. This guide outlines how to evaluate use cases and requirements to decide which paradigm fits best in common business scenarios.

Today, many organizations deploy polyglot persistence: multiple data stores tailored to distinct workloads instead of forcing a single technology to do everything. Relational systems cover core transactional workloads with ACID guarantees, while NoSQL options excel in unstructured or semi-structured data, high-velocity ingestion, and horizontal scaling across regions. Understanding strengths and trade-offs helps teams design architectures that are resilient, scalable, and cost-effective in real-world operations.

- Schema and data modeling: SQL enforces structured schemas and normalization; NoSQL embraces schema flexibility.

- Consistency and transactions: SQL offers strong ACID transactions; many NoSQL options trade some consistency for availability and partition tolerance (CAP).

- Scaling approach: SQL systems typically scale vertically with performance improvements; many NoSQL systems scale horizontally across clusters.

- Query capabilities: SQL provides rich joins and analytics; NoSQL models vary but often optimize for specific access patterns.

- Tooling and ecosystem: SQL has mature tooling; NoSQL ecosystems offer specialized options for diverse data types.

Core SQL Use Cases

SQL databases excel when the domain requires structured data, predictable queries, and strong guarantees around accuracy. Financial ledger systems, inventory and order management, and HR systems often rely on multi-row transactions, referential integrity, and complex aggregations. In these contexts, the relational model provides a clear path to data normalization, consistent reporting, and audit trails.

The SQL ecosystem is mature, with standardized language, broad tooling, and extensive best practices for backup, security, and governance. This predictability matters in regulated industries and in teams that rely on long-standing skill sets. However, as data grows and query needs evolve, you may need careful schema design, indexing strategies, and partitioning to maintain performance at scale.

Strengths for SQL in these contexts include:

- ACID transactions and strong consistency across related records

- Powerful join and aggregation capabilities for complex queries

- Structured data modeling with referential integrity and normalization

- Established governance, auditing, and compliance tooling

Core NoSQL Use Cases

NoSQL databases shine when data is semi-structured or evolving, and when workloads demand scale and availability. Document stores are popular for content management, user profiles, and catalogs; key-value stores excel at caching and session data; column-family stores handle wide rows and time-series data; graph databases highlight relationships and traversals. These patterns map naturally to real-world applications where rigid schemas slow development or where data arrives at high velocity from diverse sources.

Flexibility in schema design reduces early development friction, and horizontal scaling allows organizations to grow capacity without costly vertical upgrades. Many NoSQL systems are optimized for particular access patterns and data models, enabling low-latency reads and writes across distributed environments. The trade-offs typically involve relaxed transactional guarantees and eventual consistency in some configurations, balanced by operational simplicity and resilience at scale.

Strengths for NoSQL in these contexts include:

- Schema flexibility and rapid evolution of data models

- Horizontal scaling to handle large datasets and high throughput

- Optimized data models for specific access patterns (document, key-value, column-family, graph)

- High availability and geographic distribution with simplified operational models

How to Decide: Requirements, Data Model, and Workload

Start with a clear map of data shapes, mutation patterns, and access paths. If your domain changes frequently, or you must accommodate diverse or nested data without heavy migrations, NoSQL can be a natural fit. If you require strong multi-record ACID transactions or complex referential integrity across many entities, SQL is often the safer baseline.

Next, assess workload characteristics. Are you performing heavy analytics and reporting on operational data, or serving high-velocity transactions with strict latency budgets? If analytics is a priority, consider introducing a separate analytical store or data warehouse that can ingest from both SQL and NoSQL sources, preserving core transactional workloads while enabling robust BI capabilities.

Finally, weigh operational constraints: team expertise, budget, governance requirements, and disaster recovery. A pragmatic approach is to start with one primary store for core capabilities and add secondary stores to handle specific workloads, a pattern known as polyglot persistence that can unlock the best of both worlds while distributing risk.

- Map data shapes and mutation patterns: identify fixed schemas versus evolving structures, and determine where schemas are likely to change over time.

- Evaluate consistency requirements: decide where strong ACID is essential and where eventual consistency is acceptable for performance or availability reasons.

- Assess query patterns and analytics needs: plan for how data will be queried, joined, and reported, including cross-system data flows.

- Estimate scale, availability, and operational complexity: forecast growth, regional distribution, backups, and monitoring to guide tool selection.

What scenarios benefit most from SQL versus NoSQL?

SQL is favored for well-defined domains with stable schemas, strict transactional requirements, and complex reporting needs. NoSQL shines in environments with evolving data models, high ingestion throughput, and the need to scale horizontally across regions or handle diverse data types with flexible access patterns.

Can a mixed environment be more effective than choosing one technology?

Yes. A mixed or polyglot approach lets you pair the strengths of SQL for transactional integrity with NoSQL for flexibility and scale. The key is clear ownership, well-defined data interfaces, and robust integration pipelines to keep data synchronized and governed across stores.

How should analytics be handled in a mixed environment?

Analytics in a mixed environment often relies on a separate analytical store or warehouse that ingests data from multiple sources. This approach preserves the performance of transactional systems while enabling scalable, schema-appropriate modeling for reports and dashboards.

What governance considerations matter when using multiple data stores?

Governance considerations include data lineage, access controls, auditing, and compliance across all stores. Establish consistent metadata management, centralized authentication, and clear data ownership to maintain security and risk management as the architecture evolves.

When is polyglot persistence most advantageous?

Polyglot persistence is advantageous when different data access patterns are best served by different data stores, enabling each store to excel in its role. The trade-off is added integration complexity, operational overhead, and the need for disciplined governance and clear ownership to avoid fragmentation.